If you've been running Docker containers and think you're ready for Kubernetes, here's some bad news: you're not. Kubernetes is a different beast entirely. Minikube exists to bridge that gap by running a complete Kubernetes cluster on your laptop.

What Runs Inside That VM

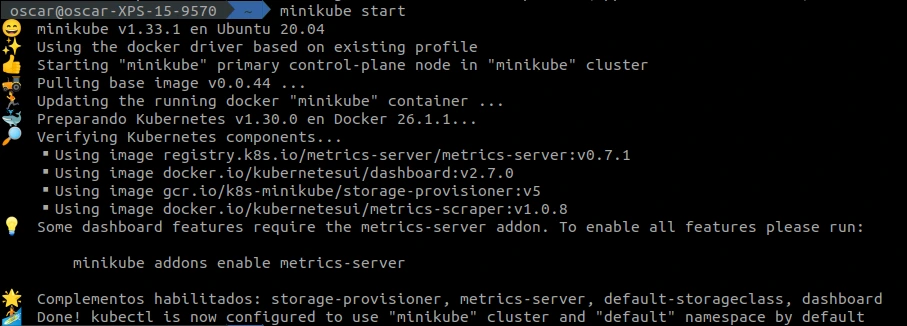

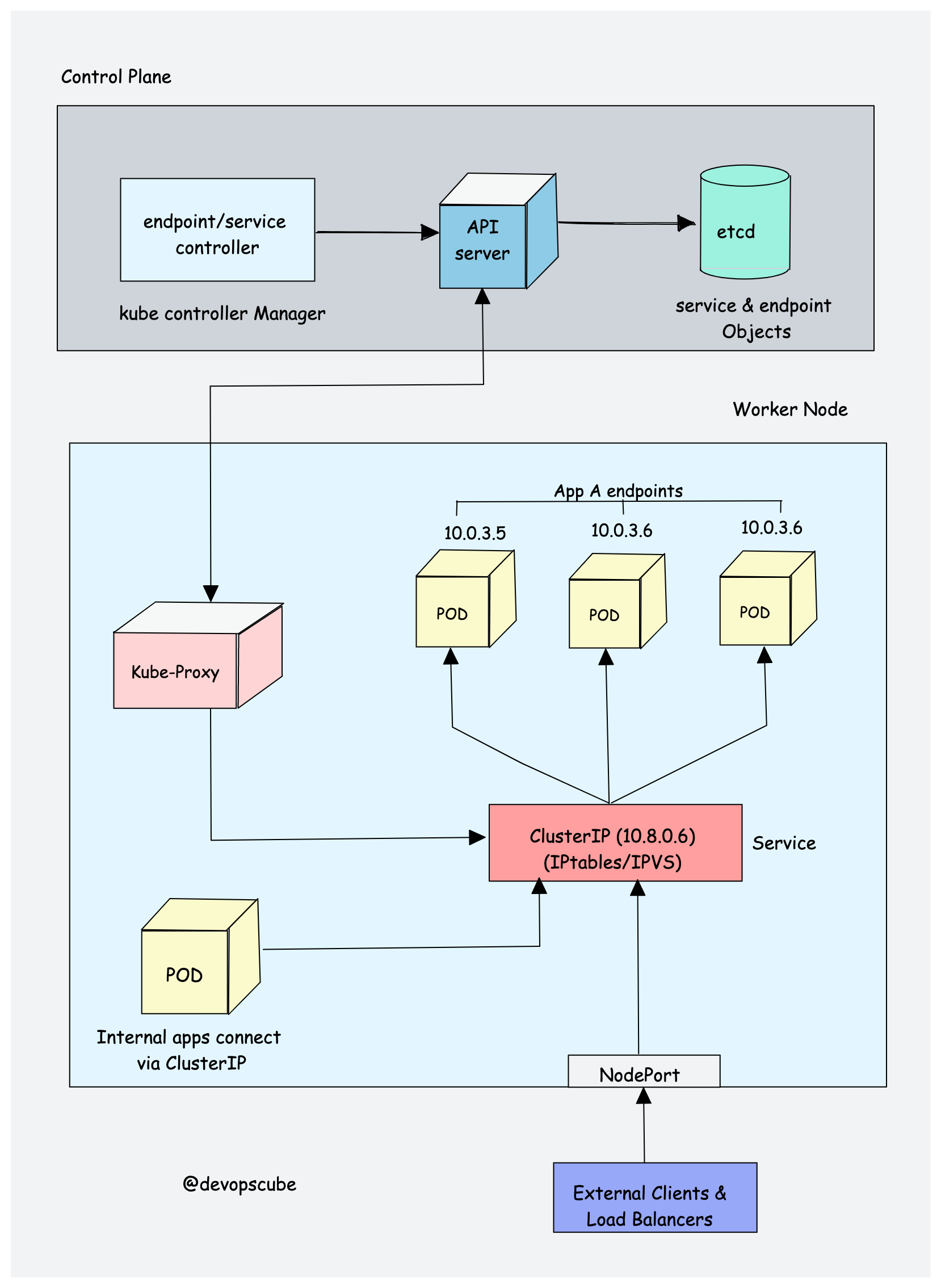

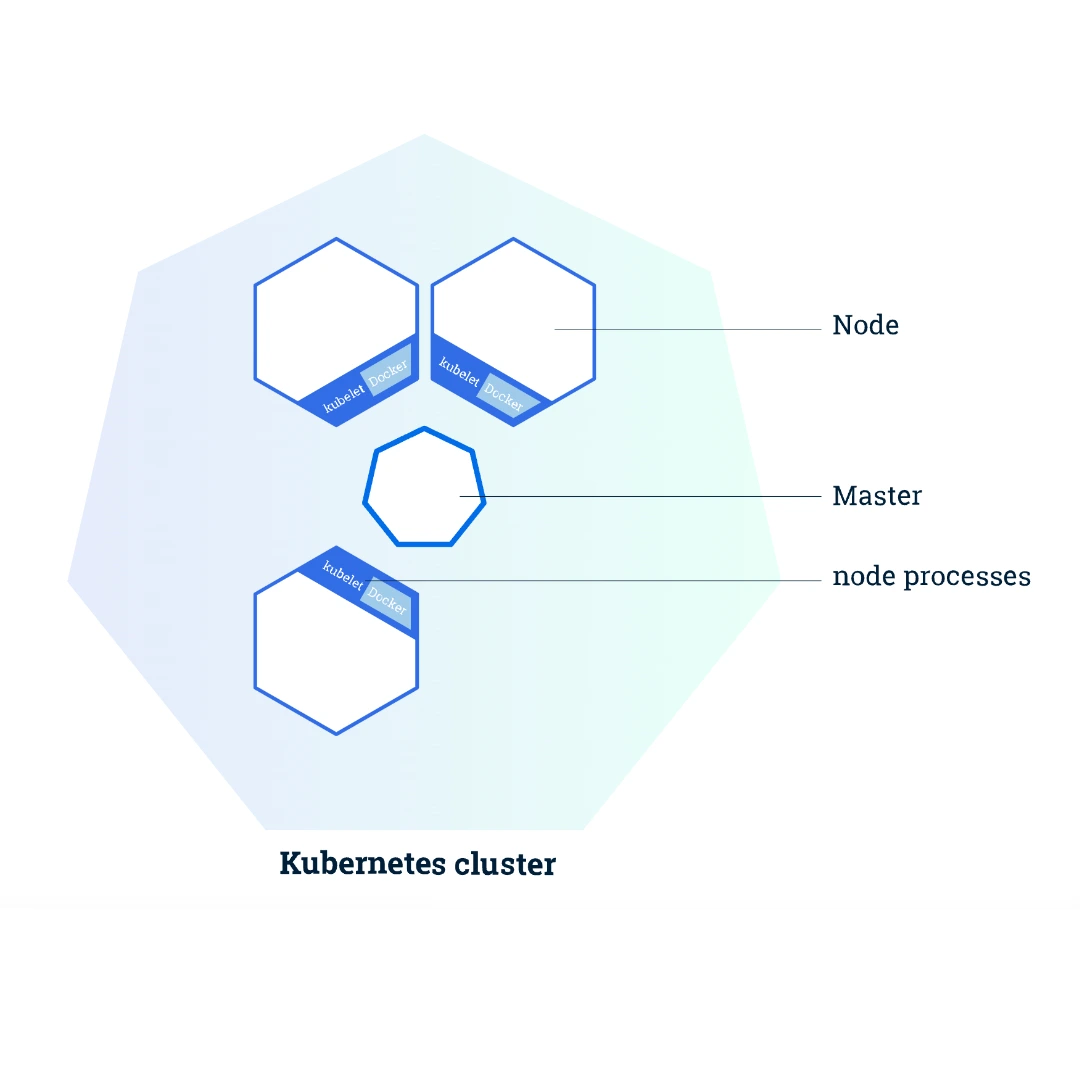

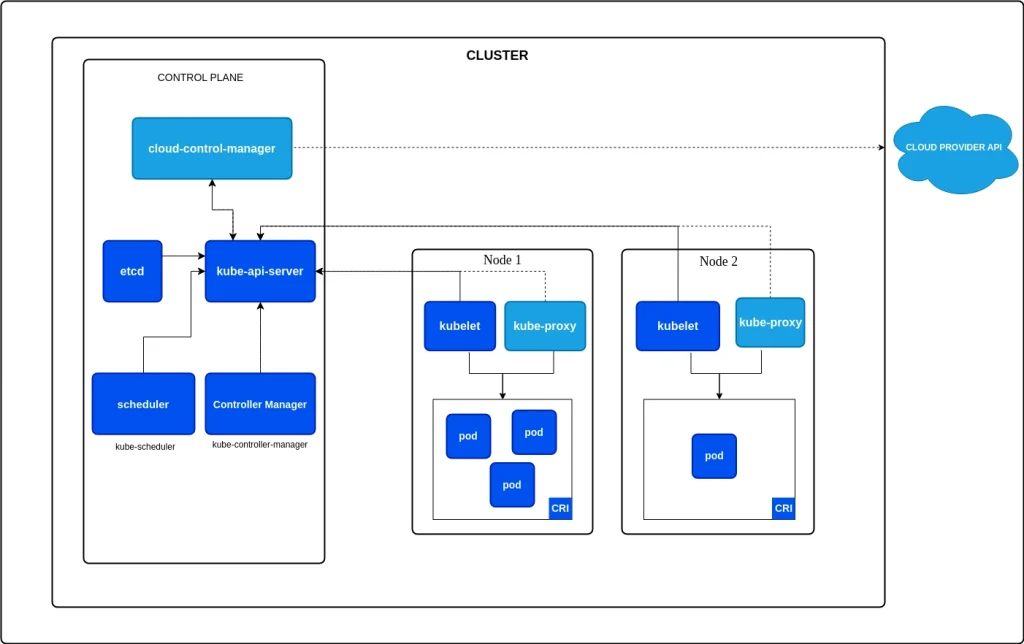

When you run minikube start, it spins up a VM (or container) with the entire Kubernetes control plane. This isn't just Docker - it's a full orchestration system with multiple components working together: the API server, etcd, scheduler, and controller manager.

When you run minikube start, it spins up a VM with the full control plane. Not just Docker - the whole orchestration system.

Why Teams Actually Use Minikube

Learning Without Breaking Things

The Kubernetes documentation recommends Minikube as the starting point because it gives you a real cluster to experiment with. You can break deployments, crash services, and mess up networking without affecting anyone else. I've seen developers go from Docker Compose to production Kubernetes in a few weeks using Minikube to understand the concepts.

Reasonable Resource Usage

Minikube officially needs 2GB RAM and 2 CPUs, but that's optimistic. In practice, you want 4GB+ unless you enjoy watching pods take forever to start. Still, it's way lighter than running a multi-node cluster on your laptop.

CI/CD Testing

Most CI platforms support Minikube because it's cheaper than spinning up real clusters for every test run. I've worked on projects where our integration tests ran against Minikube - it's close enough to real Kubernetes for most scenarios.

Predictable Failures

Unlike some local K8s tools, when Minikube breaks, it usually breaks in documented ways. There's a GitHub issue for almost every error you'll encounter, which beats debugging mysterious failures in production.

Resource Reality Check

The docs say 2GB RAM and 2 CPUs. That'll work but you'll hate life. On a 2GB machine, I've sat there for forever waiting for it to start.

What you actually need:

- 4+ CPUs - Less than this and everything takes forever

- 4-8GB RAM - Tried 2GB once, pods kept getting killed

- 30GB+ disk space - Images pile up fast

They say you can run 100+ pods. Sure, if your definition of "running" is pretty loose. Gets slow around 20 pods doing real work.

Common Ways Minikube Breaks

VirtualBox dies every macOS update. Ventura broke VirtualBox 6.x for months. Spent a whole Friday trying to demo something, turns out Tuesday's update killed the kernel extensions again.

Docker driver randomly stops working. Trying to show a coworker something, minikube start just sits there. Docker Desktop updated overnight and disabled WSL2 integration. Wasted hours on that.

Forgetting minikube tunnel. Deploy a LoadBalancer service, everything looks good, spend forever debugging networking. Then realize tunnel isn't running. Every damn time.

Running out of space mid-demo. "No space left on device" while people are watching your screen. Docker images filled up the default 20GB. Now I run docker system prune regularly.

Recent versions get flaky after a few days if you leave them running. Think it's CoreDNS memory leak but not totally sure. Just restart minikube when kubectl starts timing out.

The troubleshooting guide is actually pretty good - bookmark it because you'll need it.