Look, I'll be straight with you - I was skeptical when they first announced this AI chatbot thing. Another vendor trying to jump on the AI bandwagon. But after using Grafana Assistant for a few months, it actually solves real problems I have every day.

The Shit It's Actually Good At

Writing PromQL when you can't remember the syntax. You know that feeling when you need label_replace or group_left but can't remember exactly how the arguments work? Instead of googling for 10 minutes, you just ask "group these metrics by the first digit of status code" and it spits out working PromQL. Dafydd Thomas from Grafana Labs uses it constantly for this exact thing.

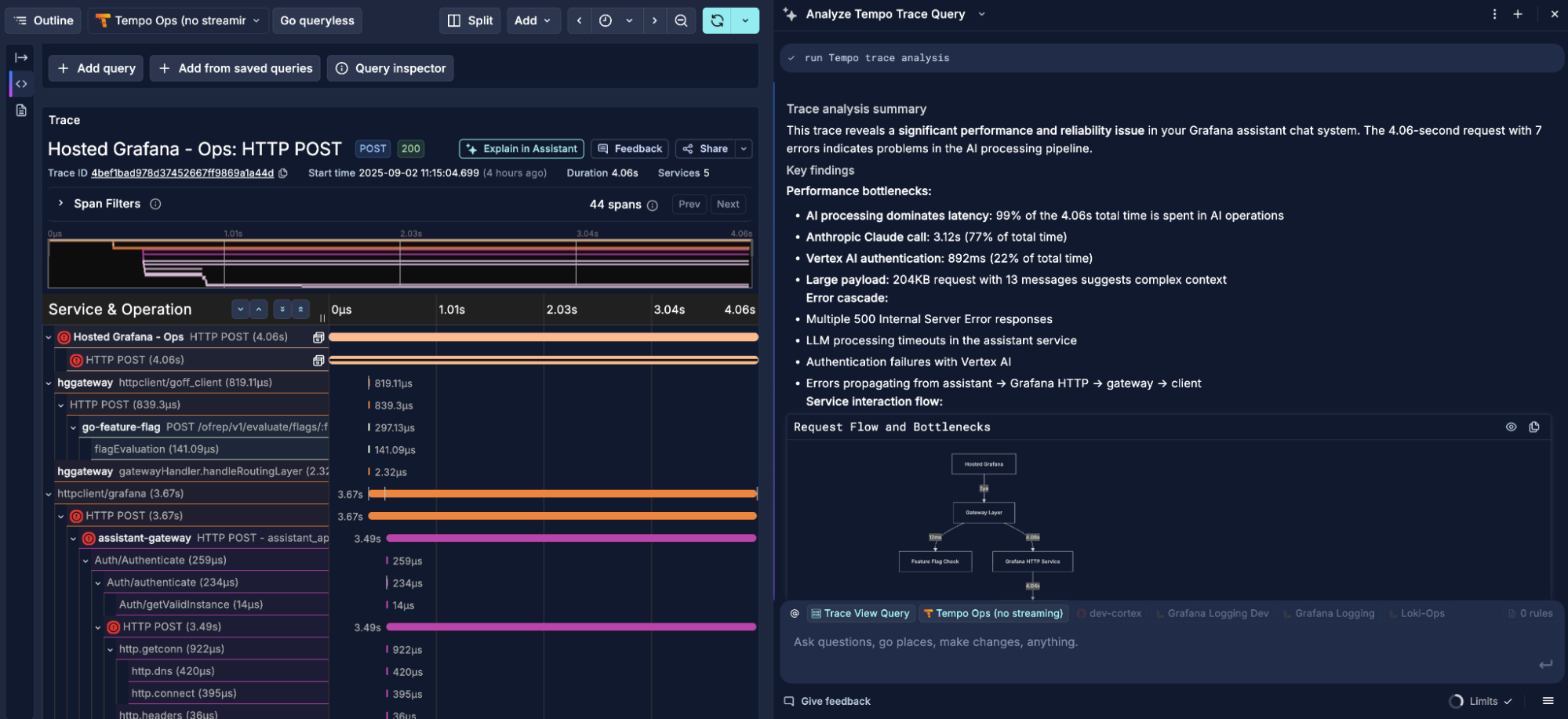

Explaining what the hell your traces mean. We had this latency spike last week and I was staring at a distributed trace with like 50 spans trying to figure out where time was getting wasted. Asked the Assistant to "analyze this trace" and it basically said "your database connection pool is getting hammered, here's the specific span where it's choking." Saved me from manually calculating span durations like an idiot.

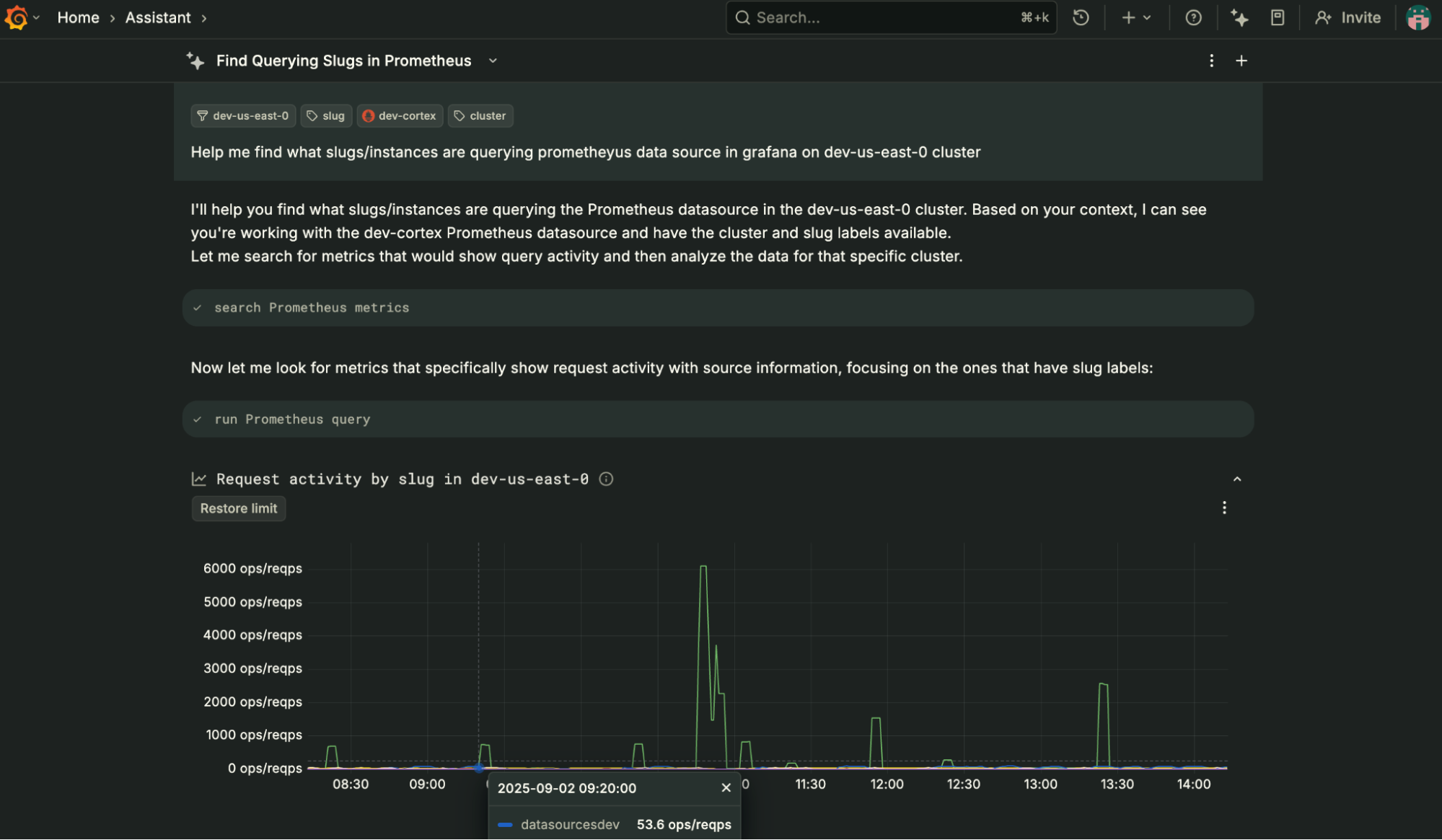

Finding data when you know it exists but forgot the labels. Sarah Zinger mentioned she needed to find customers in a specific region running a certain Grafana version but couldn't remember the exact LogQL query. Assistant figured out the right LogQL in seconds instead of her burning 30 minutes trial-and-erroring through label names.

Real-World Query Generation

When you're staring at a dashboard trying to remember PromQL syntax, this is where the Assistant actually shines. You type something like "show HTTP errors by service" and get back working PromQL that you can actually use.

When It Gets Confused

Complex multi-step correlations. If you're trying to do something really fancy like correlate network errors with specific Kubernetes node restarts during deployment windows, it sometimes generates queries that look right but miss edge cases. You still need to understand what you're actually monitoring.

Brand new features or your weird custom shit. The AI training doesn't know about the latest Grafana features or your janky custom exporters. Just last week it suggested using absent_over_time() which doesn't work in newer Prometheus versions - wasted 20 minutes figuring out why my query kept failing with some cryptic parse error.

Debugging the AI's own mistakes. Sometimes it generates syntactically correct PromQL that's logically wrong for what you asked. Like it'll give you rate() when you actually wanted increase(), and you have to catch that yourself. Worse, it once generated a query that looked perfect but was missing the [5m] range selector, so I got this cryptic error: invalid parameter 'query': 1:1: parse error: unexpected identifier "http_requests_total" and spent forever figuring out what was wrong.

Real Problems It Solves

![]()

The biggest win is onboarding new people. Kevin Adams said he got productive way faster by asking the Assistant questions instead of reading generic docs for hours or bothering teammates every 5 minutes. That's actually huge for teams.

Dashboard maintenance becomes less tedious. Piotr Jamróz needed to update thresholds across multiple panels and just described the change instead of manually editing each one. The Assistant generated the bulk updates, which is pretty neat when you have 50+ panels to modify.

The Security Angle

They claim your data doesn't get stored or used for training, which is good because we've all seen what happens when AI companies hoover up everything. Each conversation is supposedly isolated, and it meets the usual compliance checkbox stuff (SOC 2 Type II, GDPR, etc.).

Your data only gets accessed through the same permissions you already have, so it's not like the AI can see stuff you can't. Still, if you're paranoid about sending telemetry to an AI, you might want to stick to the open source LLM plugin where you control the AI provider.

Bottom Line

Is it perfect? Hell no. Does it hallucinate and generate broken queries sometimes? Yeah. But I use it multiple times a day instead of googling PromQL syntax or asking "how do I write this query" on Slack for the hundredth time.

The key thing is it's built into where you're already working instead of being another tool you have to context-switch to. When you're debugging at 3am trying to figure out why your API is slow, having an AI that knows your data sources right there beats opening 15 Stack Overflow tabs.