The biggest problem with AI coding assistants has always been context isolation. GitHub Copilot spits out decent code snippets, but it doesn't know shit about your database schema, can't check your Azure resources, and has no fucking clue about your current GitHub issues. Agent Mode with MCP (Model Context Protocol) support changes this fundamentally.

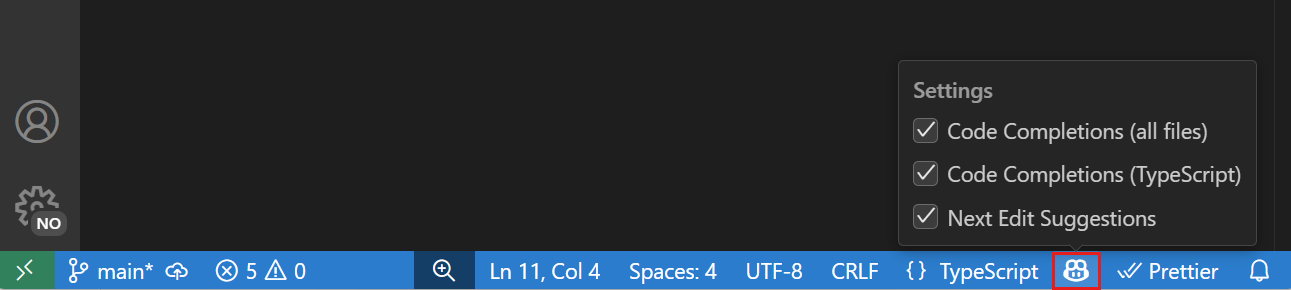

Released in VS Code 1.103 (July 2025), Agent Mode isn't just another chat interface that pretends to understand your codebase - it actually connects AI to your dev tools instead of just barfing out generic code.

What Agent Mode Actually Does

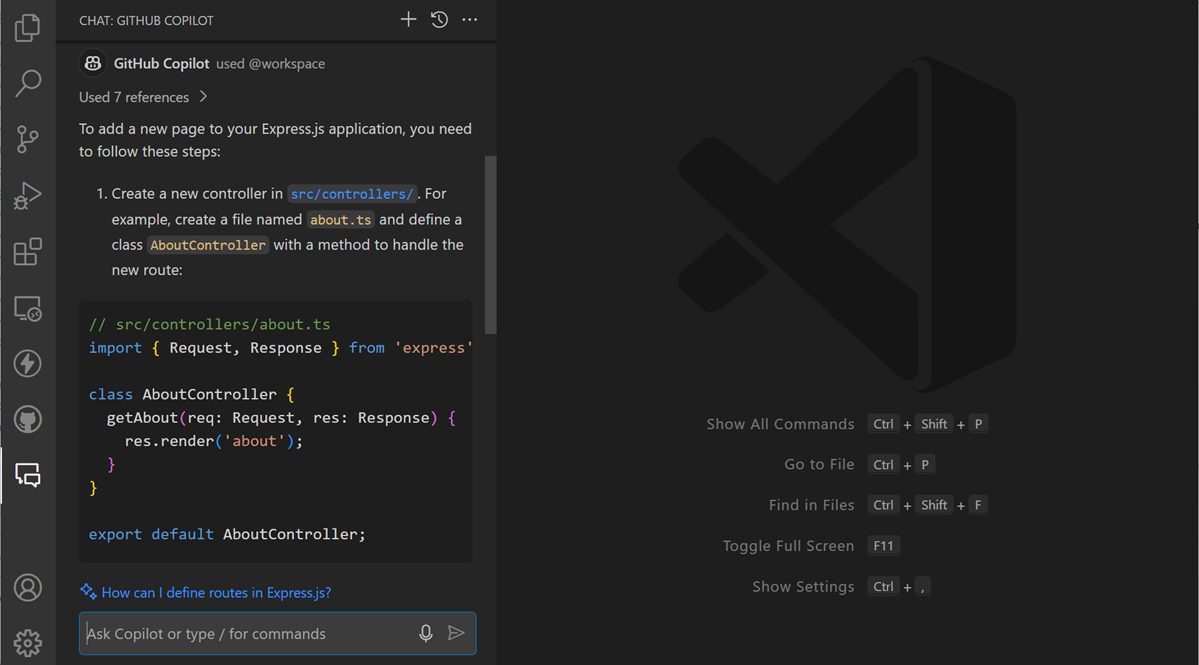

Instead of isolated code suggestions, Agent Mode turns your VS Code AI into a task delegation system. You can say "create a GitHub issue for the bug I found in the authentication flow" and the AI actually connects to GitHub, creates the issue, and reports back. Or "check if my Azure database has enough capacity for the weekend traffic spike" and it queries your actual Azure resources with real data.

This isn't theoretical bullshit. I've been testing this for two months, and the difference is fucking dramatic. Instead of asking "can you help me write a SQL query" and getting some generic Stack Overflow garbage, I say "show me which users haven't logged in for 30 days" and the AI connects to my actual database, sees the real schema, and returns actual results from production data.

MCP Servers: The Real Game Changer

Model Context Protocol (MCP) is what makes Agent Mode actually useful. Instead of one monolithic AI that knows nothing about your environment, MCP creates a standardized way for AI to connect to external tools and data sources.

Think of MCP servers as specialized plugins that give your AI specific capabilities:

Azure MCP Server: Connect to your actual Azure resources. Query databases, check container status, review log analytics - with real data from your production environment.

GitHub MCP Server: Full GitHub integration beyond basic git. Create issues, manage PRs, check CI/CD status, analyze security alerts - all from within VS Code.

Microsoft Learn Docs MCP: Access to current Microsoft documentation. Finally, AI suggestions that know about the latest .NET features, Azure updates, and C# 13 changes.

Playwright MCP Server: Web testing automation. "Generate tests for the login flow" becomes executable Playwright code that actually works with your app.

Real-World Agent Mode Workflows That Actually Work

Database-driven development: "Show me all failed payment transactions from the last hour and create GitHub issues for any new error patterns." The AI queries your production database through the Azure MCP server, analyzes the results, and automatically creates GitHub issues with relevant error details and stack traces.

Infrastructure debugging: "Check if our staging environment has the same configuration as production for the authentication service." Agent Mode compares actual Azure configurations, highlights differences, and suggests fixes.

Documentation-driven coding: "Build a new API endpoint for user profile updates following our team's standards." The AI references your team's documented patterns, checks your current database schema through MCP, and generates code that actually fits your existing architecture.

Automated testing workflows: "Generate Playwright tests for all the user flows mentioned in our recent GitHub issues tagged 'frontend'." The AI pulls real issues from GitHub, analyzes the described workflows, and creates executable tests.

This is the first time AI coding assistance feels like it understands context instead of just pattern matching against training data.

The Productivity Numbers Don't Add Up

Microsoft's research claimed 55% faster development, but I've seen mixed results in practice. Some teams love it, others say it slows them down with extra review time and debugging overhead.

The problem with productivity metrics is that they measure speed of initial code generation, not total software development lifecycle. If Copilot helps you write code 50% faster but introduces subtle bugs that take 3x longer to debug, your net productivity actually decreased.

Real-world productivity blockers:

Code review overhead increases because reviewers need to verify AI-generated code more carefully. When you write code manually, reviewers can assume basic competence. When AI writes code, reviewers need to check for logical errors, security issues, and architectural mismatches that humans typically avoid automatically.

Technical debt accumulates faster when developers accept AI suggestions without fully understanding them. Copy-pasted code from Stack Overflow was bad enough; now we have AI-generated code that developers didn't write, don't understand, and can't easily modify later.

Debugging becomes harder when the code you're debugging wasn't written by you or anyone else on your team. AI-generated code often uses patterns that work but aren't idiomatic for your codebase, making maintenance more difficult.

The Cost Reality Check

GitHub Copilot costs $10/month for individuals, $19/month for businesses. For our 10-person team, we're paying $190/month. Sounds reasonable until you realize half the team barely uses it.

ROI calculation depends on what you actually measure:

Yeah, the ROI math looks pretty on a PowerPoint slide. Whether it works out in practice? Ask me in a year when I've burned through another laptop upgrade because VS Code is eating 2GB of RAM. The calculations assume Copilot saves 30 minutes per day, which is fucking optimistic based on what I've seen.

But even if those savings are real, there are hidden costs nobody talks about:

Increased code review time: Reviewers spend more time validating AI-generated code, reducing the net time savings.

Training and learning curve: Developers need to learn how to prompt AI effectively and recognize when suggestions are wrong.

Infrastructure overhead: AI suggestions require compute resources, network bandwidth, and increase VS Code's memory usage.

Technical debt interest: AI-generated code that's "good enough" to ship but suboptimal for long-term maintenance creates compound costs over time.

The GPT-5 Integration Reality

VS Code 1.103 (July 2025) added GPT-5 support for GitHub Copilot users, along with chat checkpoints, improved model selection, and enhanced AI chat features.

GPT-5 improvements over GPT-4:

- Better code reasoning and context understanding

- More accurate suggestions for complex programming tasks

- Improved support for newer programming languages and frameworks

- Enhanced ability to work with large codebases

But fundamental limitations remain:

- Still doesn't understand your specific business context

- Can't access external systems or APIs to verify suggestions

- Doesn't know your team's coding standards or architectural decisions

- Updates to the model can change behavior unpredictably

Chat features and MCP servers add new interaction patterns, but also new ways for AI assistance to fail. The AI chat is great for explaining code concepts but terrible at understanding project-specific requirements or debugging environment-specific issues.

Performance Problems Nobody Mentions

AI features make VS Code slower and more resource-intensive. GitHub issues document users experiencing "painfully slow" response times, with Copilot taking 10+ seconds to generate suggestions.

Why AI features slow down VS Code:

Network latency: Every suggestion requires a round-trip to Microsoft's AI servers. Copilot is unusably slow on hotel WiFi, and when GitHub's servers take a shit (which happens more often than Microsoft admits), you're stuck waiting 10+ seconds for suggestions that never come.

Memory usage: AI extensions make VS Code feel sluggish on my 2019 MacBook. Task Manager shows VS Code using 200-500MB more RAM with Copilot enabled. That might not sound like much, but when you're already running Docker, Slack, and Chrome with 47 tabs, every MB matters.

CPU overhead: Processing AI suggestions pegs one CPU core constantly. My laptop fan runs more often, battery life is shorter, and typing in large files gets laggy.

Extension conflicts: I had to disable 3 other extensions because they interfered with Copilot suggestions. Vim mode, auto-formatting, and bracket colorization all started misbehaving once Copilot was enabled.

I've seen developers disable Copilot entirely because it turned their development environment into a sluggish mess. One guy on our team went back to manual coding after Copilot made his 2018 laptop unusable.

The Team Management Nightmare

Here's what nobody tells you: when half your team uses Copilot and half doesn't, everything becomes a mess.

I've watched teams where code reviews turned into debates about whether AI-generated functions were "good enough." Junior developers couldn't code without AI assistance. Senior developers refused to touch AI-generated code because they didn't trust it.

The policy questions that will bite you:

Which AI tools are allowed? We started with just GitHub Copilot, then people installed Continue, then someone started using ChatGPT for code reviews. Now we have three different AI tools generating three different coding styles, and nobody knows what the hell we're supposed to be using.

How do you identify AI code? We tried requiring // AI-generated comments. Lasted about 3 weeks before everyone ignored it. Code reviews became guessing games: "Did the AI write this, or does Sarah just have weird naming conventions now?"

Who's responsible when AI code breaks prod? This isn't theoretical - I watched a junior dev accept every AI suggestion for 6 months. When Copilot went down, they couldn't write a for loop. A memory leak in AI-generated code took down prod for 2 hours, and we spent a week arguing about whose fault it was.

Most teams haven't thought through these questions because they're too busy dealing with the immediate chaos.

The Agent Mode Reality Check: Actually Useful This Time

Agent Mode with MCP servers is the first AI coding assistant that feels like it understands your actual development environment instead of just pattern matching against training data.

What Agent Mode changes:

- Context awareness: AI knows your real database schema, current GitHub issues, actual Azure configurations

- Task delegation: "Create a GitHub issue for this bug" actually creates the issue instead of generating example code

- Environment integration: No more alt-tabbing between VS Code, browser, Azure portal, GitHub

- Data-driven decisions: "Which users are affected by this bug?" gets real query results, not generic examples

What still sucks:

- Setup complexity: Getting all MCP servers configured and connected takes time

- Security concerns: AI now has real access to real systems with real consequences

- Resource usage: More memory, more network, more battery drain

- Vendor dependencies: Works best with Microsoft's ecosystem

The honest assessment after 4 months: Agent Mode is the first AI coding feature that actually saves time instead of just changing how you spend time. The context switching reduction alone makes it worth the setup overhead.

But it's not magic. You still need to understand your systems, validate AI suggestions, and think through the logic. The difference is that now your AI assistant has access to the same information you do, which makes the collaboration actually useful instead of frustrating.

Bottom line: If you're already using GitHub Copilot and work with Azure/GitHub regularly, Agent Mode with MCP servers is a legitimate productivity upgrade. If you're not using AI coding assistants yet, this is a much better entry point than traditional Copilot ever was.