Qodo used to be called Codium until they rebranded in 2024 because apparently "Codium" sounded too much like a chemical element. It's an AI coding tool, but instead of just throwing code completions at you like every other tool, it's focused on generating tests and doing code reviews.

Look, I'll be straight with you - I was skeptical as hell when I first tried it. Another AI tool promising to revolutionize development? Please. But after using it for a few months, it's actually not terrible for test generation.

While GitHub Copilot suggests your next line of code, Qodo reads your entire project first - every file, dependency, pattern - then generates tests that actually understand your codebase. The company raised $40M in Series A last year, so they've got runway to keep improving the product.

The Three Main Tools They Built

Qodo Gen - This is the VS Code extension everyone talks about. You highlight some code, it generates unit tests. What makes it different is that it actually reads your project structure, existing test patterns, and tries to match your coding style. I've seen it catch edge cases I would have missed, but it also generates overly verbose tests sometimes.

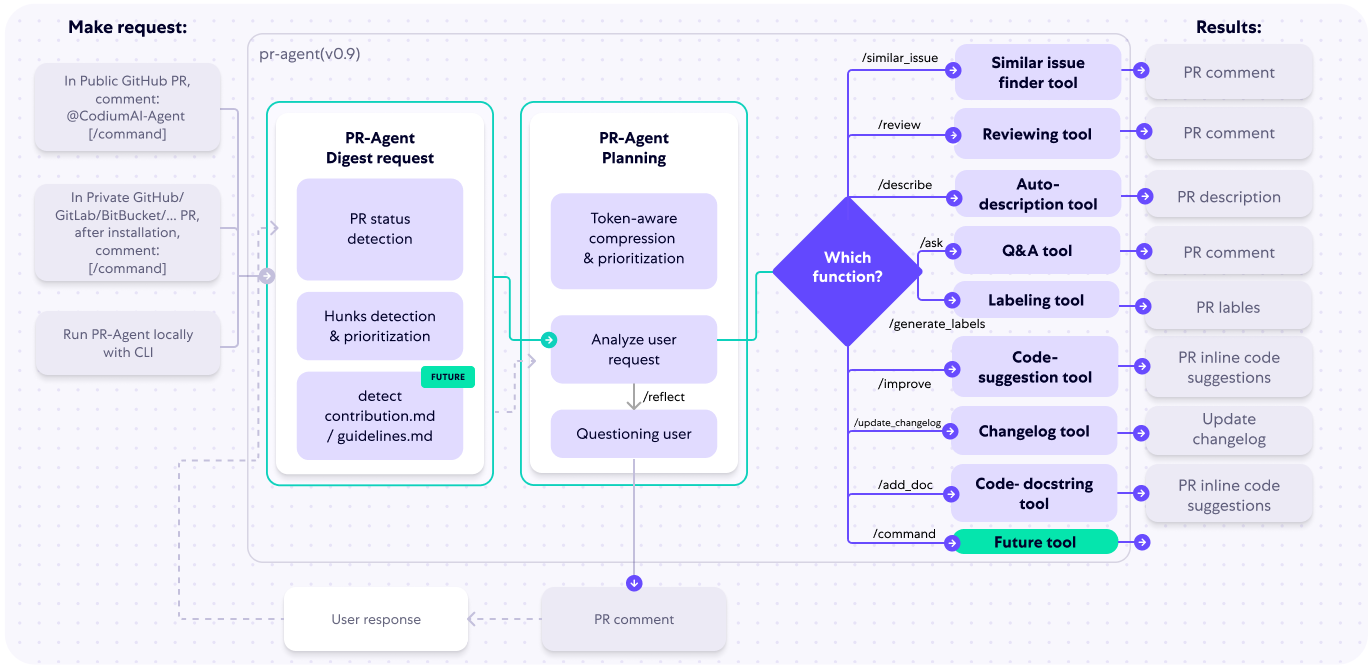

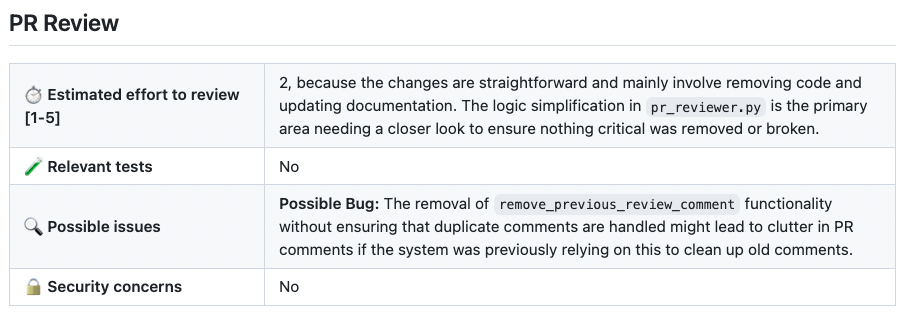

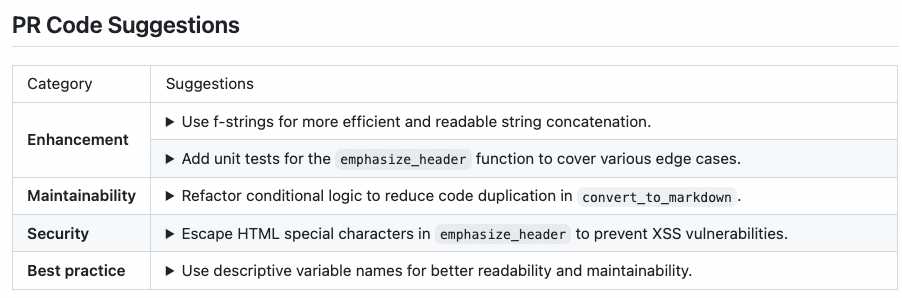

Qodo Merge - PR review automation that plugs into GitHub and actually provides useful feedback instead of just nitpicking whitespace. Setup is straightforward if you don't hit any OAuth issues. I've had teams use this to catch potential bugs before they hit production, though you still need human reviewers for architecture decisions.

Qodo Command - Their newer CLI tool for building custom AI agents. Still in alpha, but the idea is you can create your own AI workflows without being stuck in their UI. Personally haven't used it much since it's early days.

Why It's Actually Useful (Sometimes)

The context awareness is where it shines. Instead of just looking at your current file like Copilot does, Qodo indexes your entire repository - dependencies, patterns, naming conventions, everything. So when it generates tests, they actually follow your existing patterns instead of looking like generic tutorial examples.

They built this on AlphaCodium research that showed test-driven generation improved coding accuracy from 19% to 44%. Instead of just prompting an LLM to write code, it generates tests first, then writes code to pass them. Makes sense in theory, works decently in practice.

What It Actually Supports

Works with the usual languages - Python, TypeScript, JavaScript, Java, Go, C++, and a few others. You can use it through their VS Code extension, JetBrains plugins, or directly in GitHub via PR reviews.

Integrates with GitHub, GitLab, and Bitbucket without breaking your existing workflow, assuming you don't run into OAuth problems during setup. I've set it up on maybe a dozen repos and only had auth issues once.

Companies like Intel and Monday.com use it for automated code reviews, probably because it has SOC2 certification and enterprise security features. The free tier gives you 250 credits per month, but those burn through pretty quickly if you're actually using it. Teams pay around $30 per developer monthly for 2,500 credits, which is reasonable if you're generating a lot of tests.