The official installation guide makes it look simple: pip install vectordb-bench[all] and you're done. That's bullshit.

The Dependency Hell You'll Actually Face

Here's what happened when I tried to set this up on three different machines last month:

Ubuntu 22.04 (Clean): Worked on the second try. First install failed because of conflicting protobuf versions - apparently VectorDBBench wants protobuf 4.x but half the databases in [all] want 3.x. Solution: install specific database clients one at a time instead of using [all].

## Don't do this (it will break):

pip install vectordb-bench[all]

## Do this instead:

pip install vectordb-bench

pip install vectordb-bench[pinecone]

pip install vectordb-bench[qdrant]

## Test each one individually before adding the next

macOS Monterey (M1): Complete disaster. The pgvector client doesn't have ARM64 wheels for some versions. Spent 2 hours compiling PostgreSQL from source just to test one database. The GitHub issues are full of people hitting the same problems.

Docker (Recommended): Actually works reliably, which was a relief. The official Dockerfile allocates 4GB RAM and immediately uses 6GB, so I had to bump it to 16GB for anything useful. The container takes about 10 minutes to start because it downloads datasets on first run.

The Version Hell Problem

VectorDBBench is moving fast - too fast. Version 1.0.8 came out recently but broke compatibility with some Weaviate configurations that worked in 1.0.6. Meanwhile, version 1.0.6 had that memory leak that killed long-running benchmarks.

You're basically choosing between "stable but leaky" or "fixed but might not work with your setup." Check the release notes before upgrading - they don't follow semantic versioning at all.

Configuration: More Complex Than They Admit

The YAML configuration looks clean in the docs. Reality: you'll spend hours tweaking database-specific parameters that aren't documented anywhere.

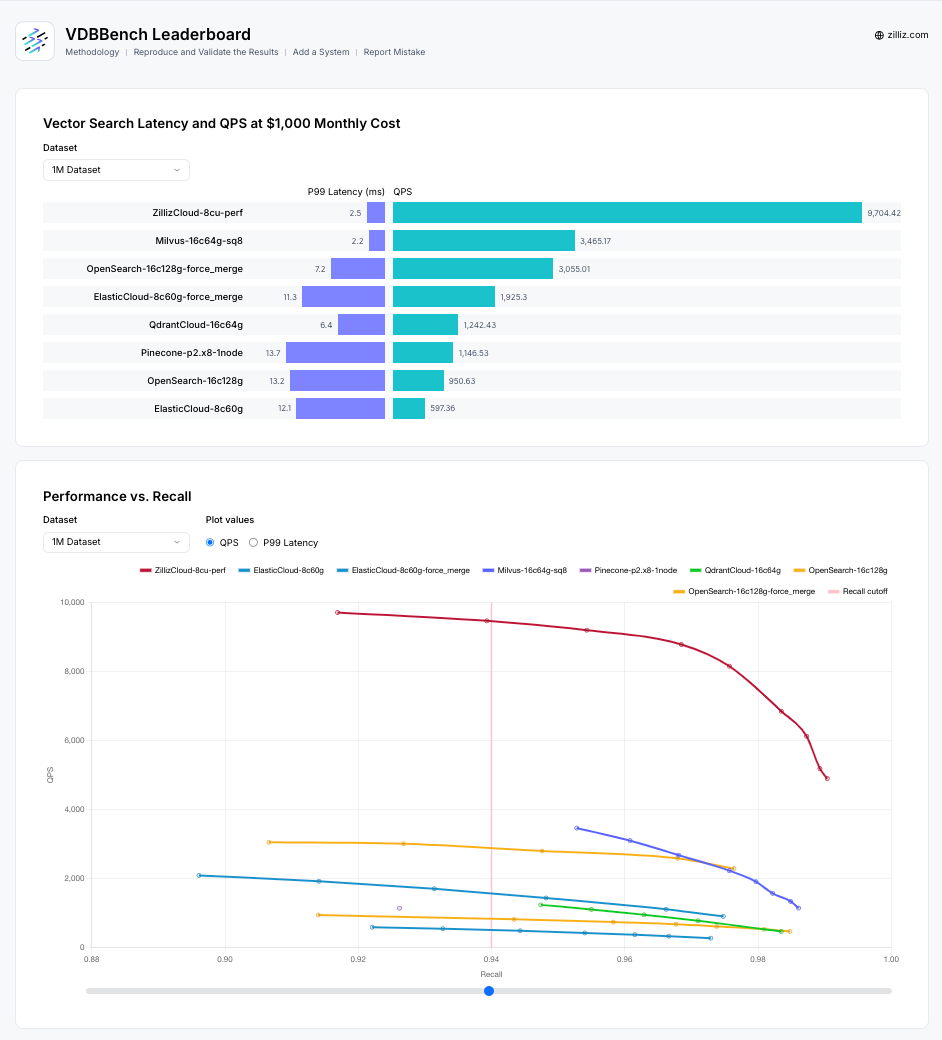

Example pain point: Milvus HNSW settings. The defaults in VectorDBBench give you terrible performance on anything over 1M vectors. You need M=64 and efConstruction=500 for decent results, but that's nowhere in their guides. Found this buried in a GitHub discussion after my benchmarks were 10x slower than expected.

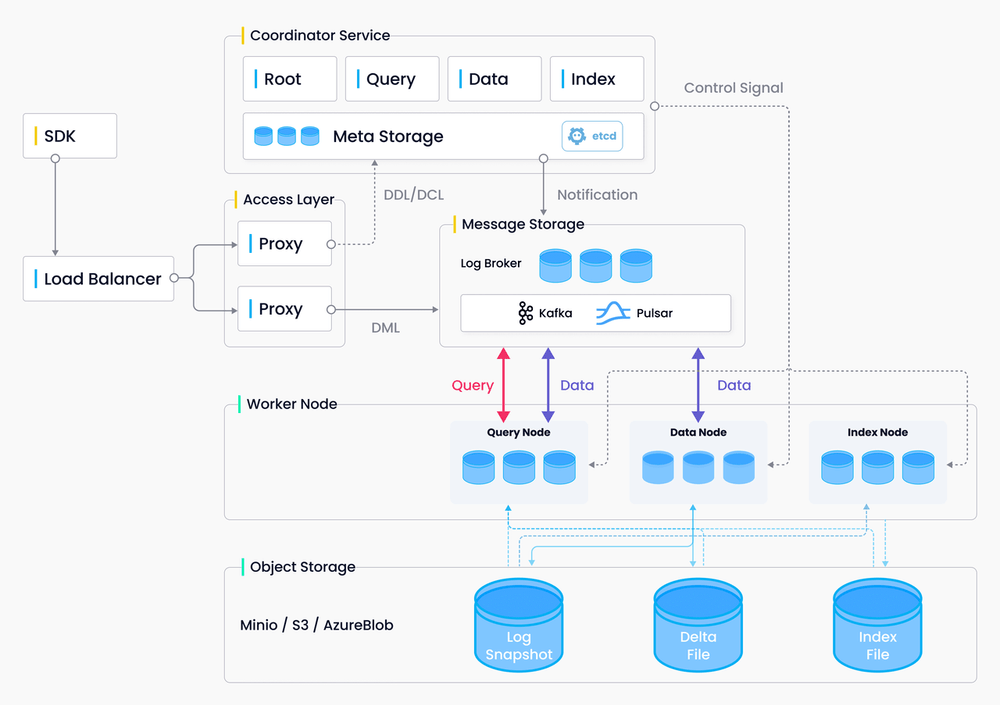

The official Milvus performance tuning guide explains the impact of these parameters, and you'll need to understand vector index types to configure VectorDBBench properly for your use case.

The web interface configuration is prettier than the CLI, but it hides important options and makes it harder to reproduce your exact setup later.