Look, you're not the first person to get stuck converting between frameworks. Your research team loves PyTorch because you can actually debug it - print statements work, stack traces make sense, and dynamic graphs don't fight you every step of the way. But now production wants TensorFlow because "it's enterprise ready" or whatever.

ONNX Export: When It Works, When It Doesn't

ONNX conversion works great for basic models. ResNets, VGG, standard transformers? Usually fine. But the moment you use anything slightly custom, you're in for a world of hurt.

What Breaks ONNX Export (Real Examples)

Grid Sampling Operations:

## This will kill your ONNX export

torch.nn.functional.grid_sample(input, grid, mode='bilinear')

## Error: ONNX export failed: Unsupported operator 'aten::grid_sampler_2d'

Found this out the hard way on a computer vision project. Our attention mechanism used grid sampling for spatial transformation, and ONNX just said "nope." Spent two days trying different workarounds before giving up.

Dynamic Control Flow:

Any model with if statements based on input data will break ONNX export. The tracing can't handle branching logic that depends on actual tensor values. Your for loops that depend on sequence length? Dead in the water.

Custom Operators:

Built a custom layer for your specific use case? Good luck getting ONNX to understand it. You'd need to implement the operator in ONNX's format, which is about as fun as writing assembly.

Version Hell is Real

PyTorch 2.1 broke ONNX export for certain models and nobody documented it for weeks. Here's the compatibility chain that actually works as of August 2025:

- PyTorch 2.0.1 → ONNX 1.14.0 → TensorFlow 2.13.0

Stray from this path at your own risk. Pin your versions or you'll spend your weekend debugging why everything suddenly stopped working.

The Batch Normalization Nightmare

This one's a classic. Spent 3 days debugging why my 92% accurate PyTorch model became 67% accurate after TensorFlow conversion. Turns out batch norm parameters use different conventions between frameworks:

- PyTorch momentum: exponential moving average

- TensorFlow momentum: different mathematical definition entirely

The fix? You need to manually convert momentum values: tf_momentum = 1 - pytorch_momentum. And you MUST set your PyTorch model to eval mode before export, or the running statistics won't transfer correctly.

Memory Usage Doubles in Hybrid Setups

Running both frameworks in the same container? Your memory usage just doubled. PyTorch's dynamic allocation fights with TensorFlow's pre-allocation, and you end up with memory fragmentation that'll kill your production server.

Learned this when our recommendation system brought down the entire inference cluster. Turns out 16GB wasn't enough when you're loading both PyTorch embeddings and TensorFlow serving components.

What Actually Works in Production

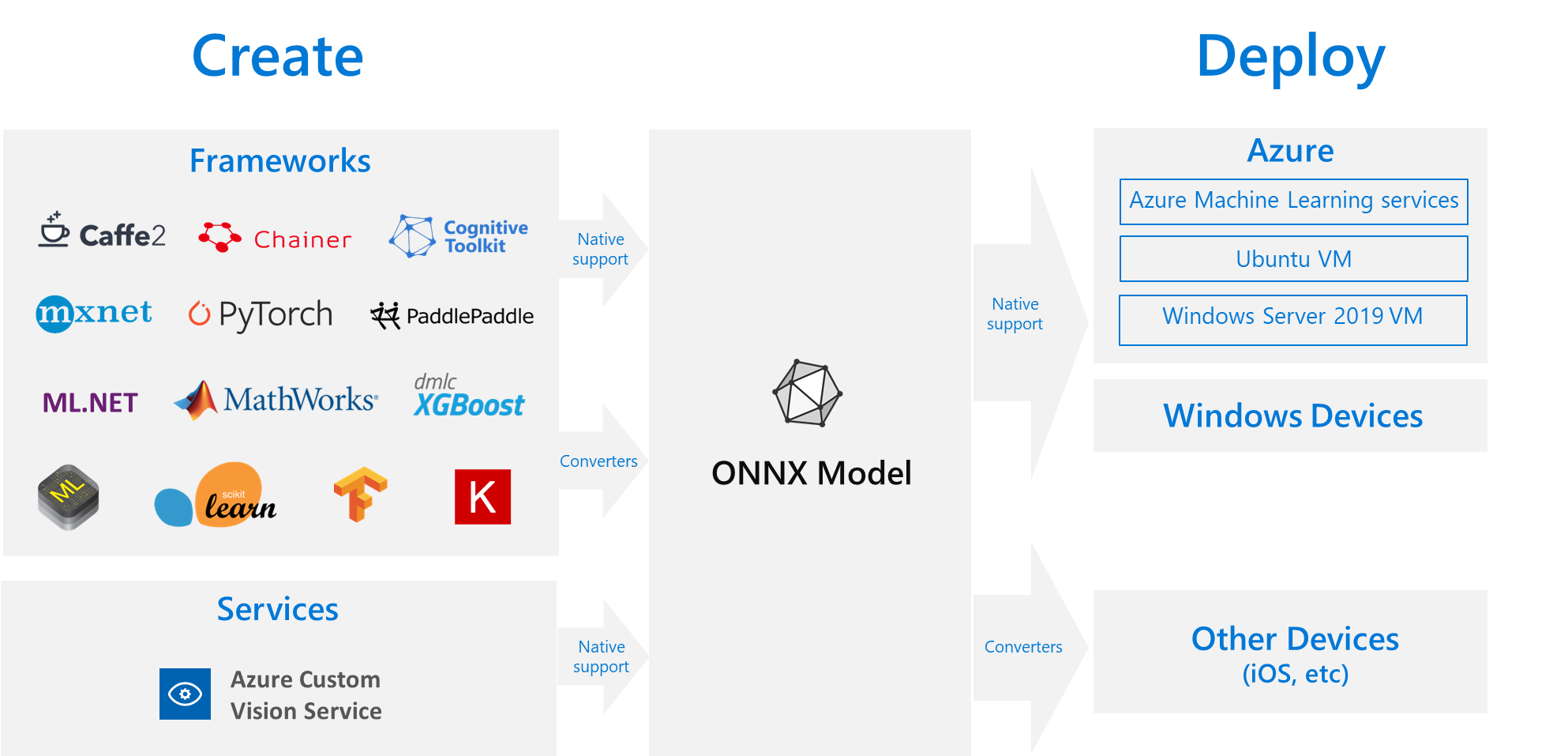

Option 1: ONNX Runtime

Usually works better than converting to the target framework. Less debugging, more consistent performance. But debugging ONNX models is a nightmare - good luck figuring out why your accuracy dropped.

Option 2: Manual Recreation

Painful but bulletproof. Takes 2-4 weeks for complex models, but at least you know it'll work exactly the same. Copy the architecture layer by layer, transfer weights manually, pray you got the parameter mapping right.

Option 3: Keep Both Frameworks

Expensive but pragmatic. Use PyTorch for research, TensorFlow for serving. Deploy them as separate microservices and deal with the network latency. At least you won't lose your mind debugging conversion errors.

The truth is, there's no perfect solution. Pick your poison based on your team's patience level and production requirements.