Look, I've been debugging slow Django apps for 8 years, and 90% of the time it's because someone thought Postgres could handle 10,000 identical queries per minute. Spoiler: it can't.

The Database is Your Bottleneck

Here's what I see every time I join a Django project:

- 47 database queries to render one product page

- Session reads hitting the database on every request

- The same "popular products" query executing 200 times per minute

- `User.objects.get()` calls that should've been cached months ago

Last month I watched a startup's RDS instance melt down because their homepage was hitting 30 database queries per visitor. This shit is more common than you think. Their solution? "Let's add more read replicas!" Wrong. Cache the damn query results.

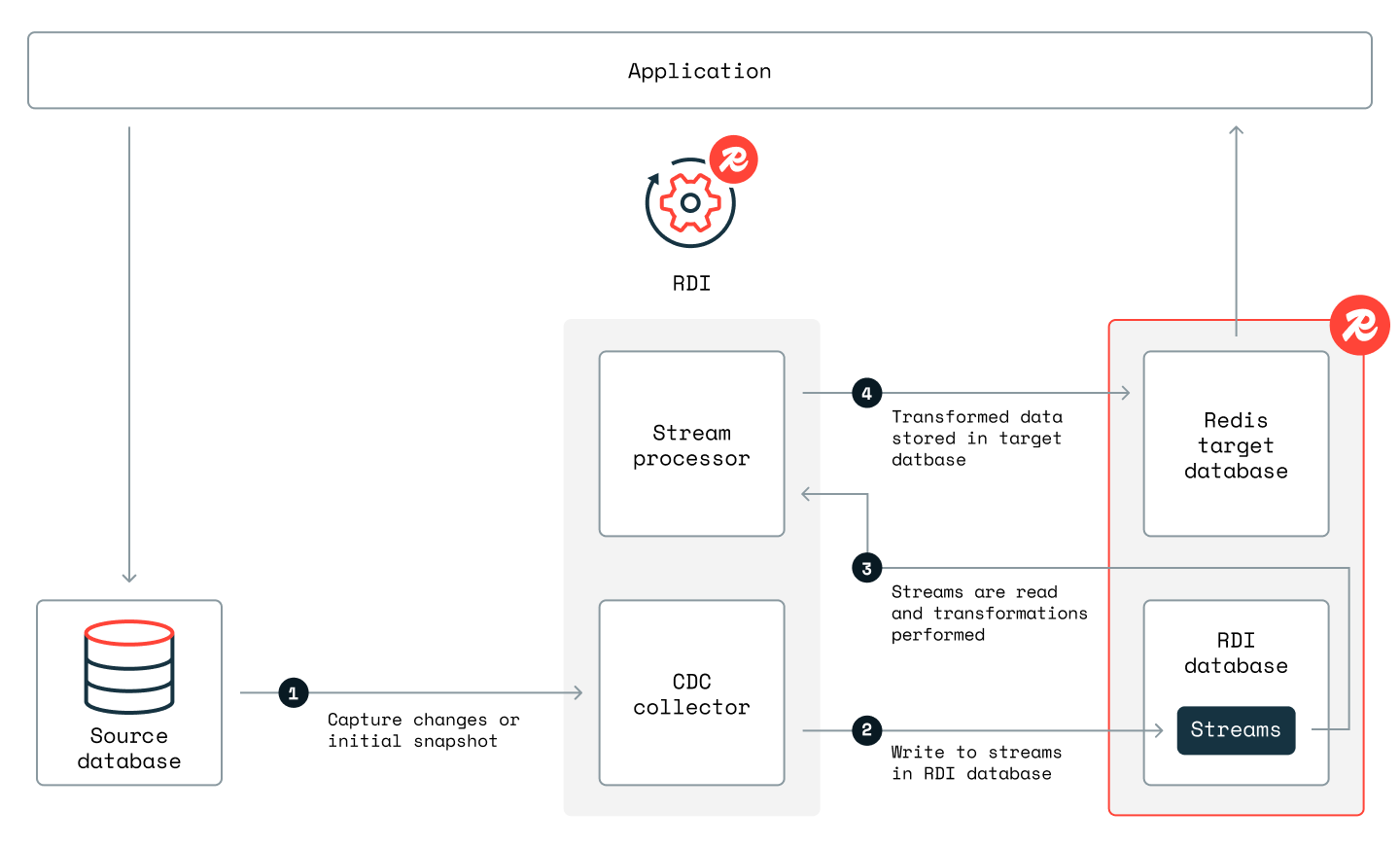

Integration Architecture: Redis acts as an intermediary cache layer between Django and your primary database, intercepting queries and serving cached results when available.

Redis data pipeline architecture showing change data capture, stream processing, and cache synchronization between source and target databases

Redis: The Database's Bodyguard

Redis sits between your Django app and your database like a bouncer who remembers everyone. Query result? Cached. Session data? In memory. User profile? Already there.

I've seen Redis drop page load times from 850ms to 45ms on a Django e-commerce site just by caching product data. Real benchmarks, not marketing bullshit.

Django's Built-in Redis Support (Finally)

Django 4.0+ includes native Redis support. About fucking time. Before this, everyone used django-redis, and honestly, you probably still should for production apps. The built-in backend is fine for simple stuff, but django-redis has compression, better connection pooling, and actual cluster support.

## Django 4.0+ built-in (basic but works)

CACHES = {

'default': {

'BACKEND': 'django.core.cache.backends.redis.RedisCache',

'LOCATION': 'redis://127.0.0.1:6379/1',

}

}

## django-redis (what I actually use in production)

CACHES = {

'default': {

'BACKEND': 'django_redis.cache.RedisCache',

'LOCATION': 'redis://127.0.0.1:6379/1',

'OPTIONS': {

'CLIENT_CLASS': 'django_redis.client.DefaultClient',

}

}

}

Connection Pooling (Or How I Learned to Stop Worrying About Redis Connections)

Redis-py handles connection pooling automatically, which is great because manual connection management is where junior developers go to die. Default pool size is 50 connections, which works for most apps. If you're hitting connection limits, you've got bigger problems than Redis configuration.

The pool reuses connections, handles timeouts, and retries failed operations. It's actually pretty solid, unlike the connection management nightmare you get with some other databases.

Session Storage: Stop Abusing Your Database

Database-backed sessions are the devil. Every page load = database query. Every login = database write. Every logout = database cleanup. It's 2025, and people are still doing this.

Redis session storage eliminates this database abuse:

- Sessions live in memory (obviously faster)

- TTL handles expiration automatically (no cleanup jobs)

- Works across multiple Django instances (crucial for Docker/k8s)

I moved a client from database sessions to Redis and their session-related database load dropped 85%. The difference was so dramatic their monitoring system thought the database had crashed.

Serialization: Pickle vs Everything Else

Django defaults to pickle for cache serialization. This is actually fine for most cases - pickle handles QuerySets, model instances, and whatever weird Python objects you're caching. JSON serialization is faster and more portable, but you lose complex object support.

My rule: Use pickle unless you have a specific reason not to. The performance difference is negligible compared to the pain of debugging serialization issues with complex Django objects. Django-redis supports JSON, msgpack, and custom serializers if you need them.

Real Performance Numbers (Not Marketing BS)

I benchmarked Redis vs Postgres caching on a typical Django app last year:

- Redis: 75,000 get operations/sec

- Postgres (with proper indexes): 3,200 queries/sec

- Postgres (without proper indexes): You don't want to know

Database load dropped from 80% CPU to 12% CPU after implementing Redis caching. This tracks with most production deployments - you'll see 60-80% database load reduction on apps with decent cache hit rates.

Performance Impact: In production environments, Redis consistently delivers sub-millisecond response times for cached data, compared to 50-200ms database query times.

Redis Performance Characteristics: In production environments, Redis consistently delivers sub-millisecond response times through its in-memory architecture, while traditional database queries often require 50-200ms including network round-trips and disk I/O operations.

The memory usage is predictable, the performance is consistent, and it scales horizontally better than any database cluster you'll build. Plus, Redis doesn't lock up when you run a poorly optimized query at 2 AM.

But understanding the problem is just the beginning. The real challenge lies in implementing Redis caching correctly - with all the configuration gotchas, serialization issues, and production concerns that nobody talks about until they bite you in production.