![]()

Cursor has four different ways to configure prompts and they all conflict with each other. I've spent countless hours debugging why my rules weren't working only to discover I had the wrong file format or the glob pattern was fucked.

Project Rules live in .cursor/rules/ directories and use this janky MDC format that's barely documented. They're supposed to be the "powerful" option but the syntax is finicky as hell. One missing dash in the metadata header and your rule becomes decoration.

User Rules are global settings that sometimes work and sometimes don't. They're buried in Cursor Settings and the interface for editing them changes every update. Half the time I forget they exist until they conflict with project rules.

AGENTS.md files were supposed to be the "simple" option - just markdown in your project root. Except they don't work with glob patterns and the AI sometimes ignores them completely.

Rules for AI in the settings panel is for quick testing but it gets wiped when you restart Cursor. Perfect for temporary fixes that become permanent because you forgot to move them somewhere persistent.

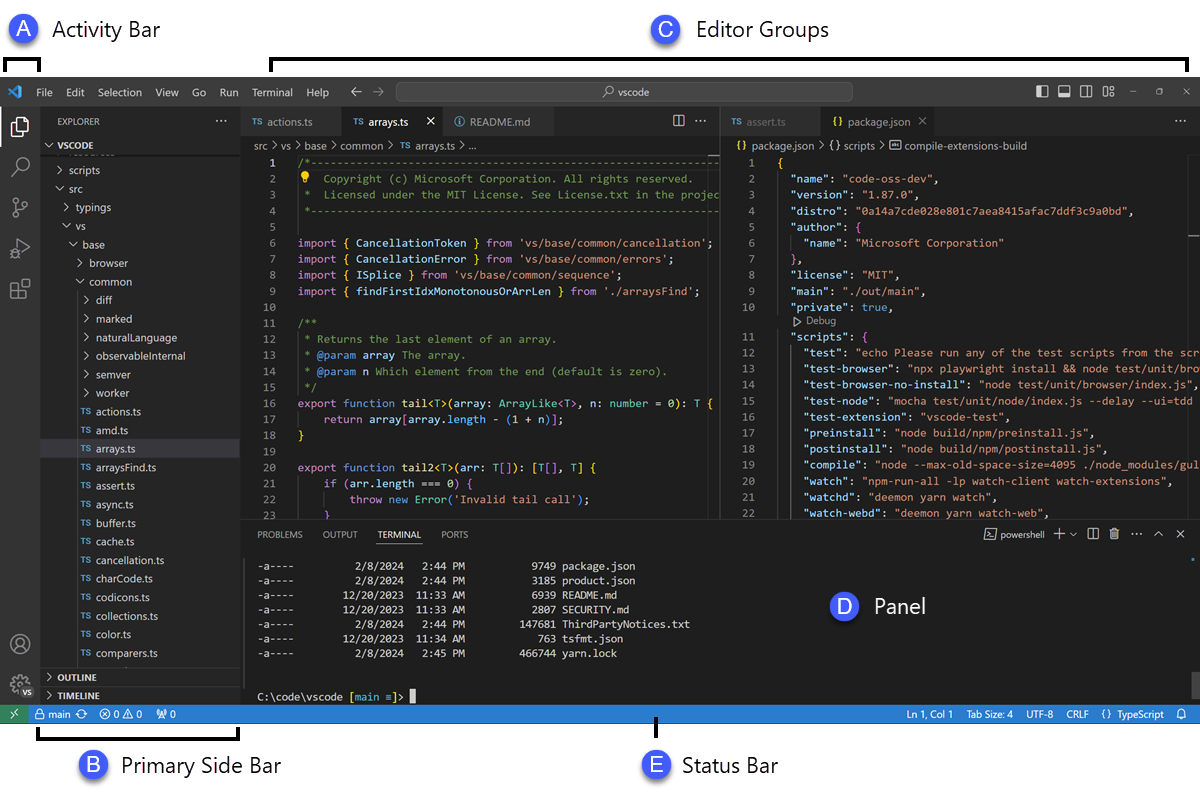

The Real Problem: Context Chaos

The core issue isn't the configuration options - it's that Cursor's context handling is unpredictable. Sometimes it sees your rules, sometimes it doesn't. Long conversations cause "context degradation" where it starts ignoring your carefully crafted prompts.

I learned this the hard way after spending 3 hours writing detailed MDC rules only to have Cursor generate code that completely ignored them. Turns out the conversation was too long and I needed to start a new chat.

The MDC format documentation is community-driven because the official docs are basically nonexistent. You'll be reverse-engineering examples from GitHub repos, forum posts, blog tutorials, Medium articles, Dev.to guides, LinkedIn tutorials, Reddit discussions, Discord conversations, and API documentation guides to figure out the syntax.

Memory Features Are Creepy But Useful

Cursor's memory functionality requires disabling privacy mode, which means your code gets sent to their servers for "learning." It's creepy but actually works once you get over the privacy concerns. The AI remembers your preferences across sessions if you end statements with "Keep that in mind."

Auto-Run mode (formerly called YOLO mode, which was more honest) lets Cursor execute commands automatically. It's convenient until it runs rm -rf node_modules when you didn't expect it. Configure allowed/denied commands carefully or you'll have a bad time.

New in Cursor 1.5 (August 2025): The latest release added native OS notifications for agent runs, improved Agent terminal UX with clear backdrop and border animations, and Linear integration for starting Background Agents directly from Linear issues. The terminal now auto-focuses input on rejection so you can respond immediately. Check out guides on safe Auto-Run configuration, security best practices, and community warnings.