The Problem with Hammering APIs Every Second

Look, I get it. You wrote a nice little script that calls requests.get() every second to check if TSLA moved. Congrats, you've built the digital equivalent of asking "Are we there yet?" on repeat. That worked fine when you were trading your lunch money, but now you're missing moves faster than your ex ghosted you.

Here's what actually happens with REST API polling: Stock jumps 2%, you find out 30 seconds later because that's when your next API call fires. By then, the move's over and you're buying at the top like everyone else who discovered the news on Twitter.

WebSocket gives you the data the moment it happens. No delays, no missed moves, no more refreshing your screen like a psychopath watching your portfolio die.

The WebSocket Connection That Actually Stays Connected

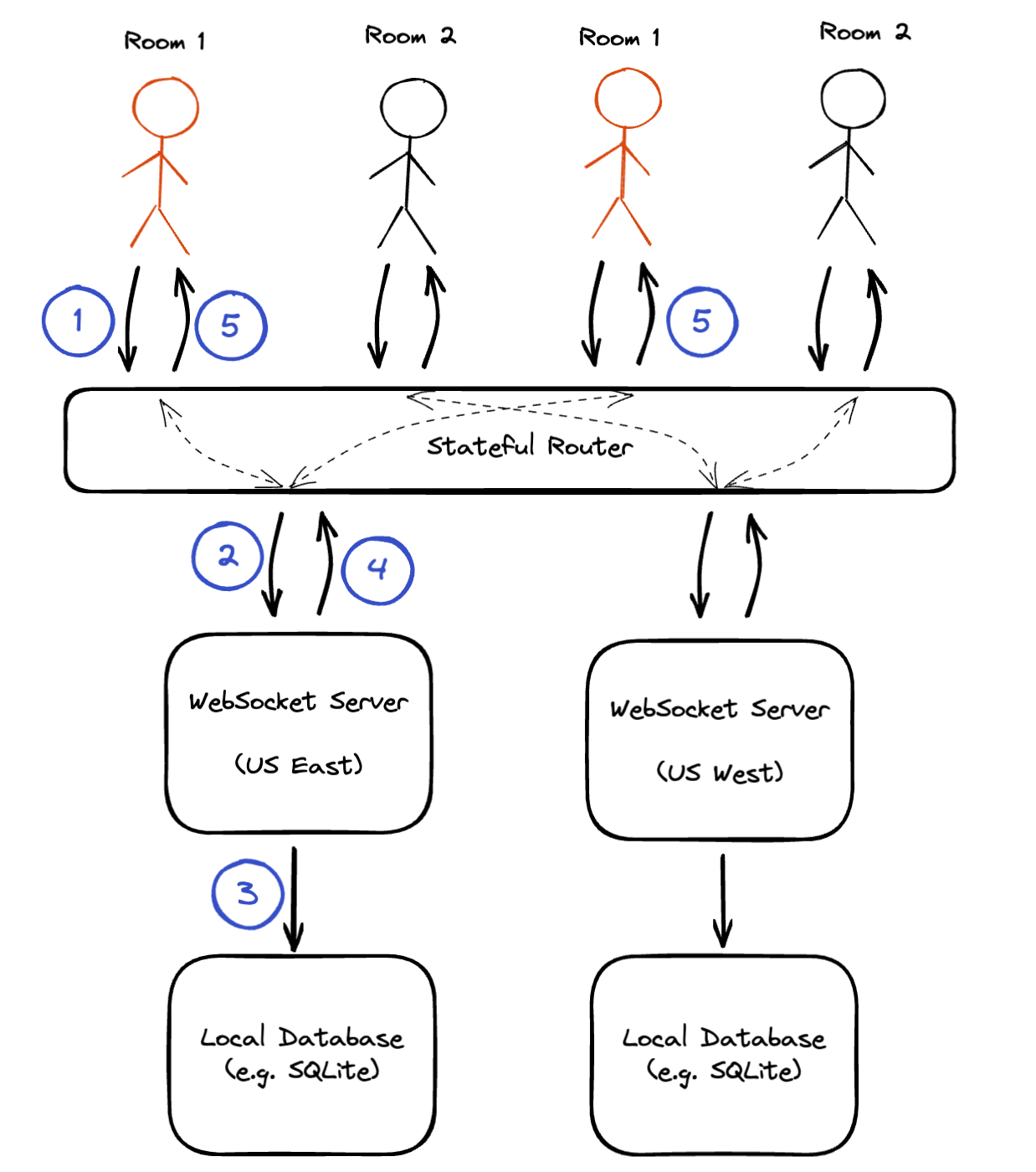

Alpaca's WebSocket feeds work like this: You connect once, it pushes data to you in real-time. Think push notifications but for stock prices and without the battery drain.

The endpoints you need:

- Market data:

wss://data.alpaca.markets - Your account updates:

wss://paper-api.alpaca.markets/stream

Don't try to be clever and use both in the same connection. They're separate for a reason.

What Breaks and How to Fix It

Connection drops every 10 minutes? That's your firewall being helpful. Configure keep-alive or use a VPS that doesn't hate persistent connections.

Auth failures? You're probably using paper trading keys on the live endpoint or vice versa. Yes, this breaks in production at the worst possible moment.

Data stops flowing during volatility? Your message handler is too slow. When TSLA announces another "funding secured" tweet, you'll get 1000 messages per second. If your handler can't keep up, messages get dropped and you miss the move.

The fix is simple: Queue the data, process it asynchronously. Don't try to calculate your entire portfolio's net worth inside the message handler.

async def handle_trade(trade):

# Don't do this - will break during volatility

# complex_calculation(trade)

# Do this instead

await trade_queue.put(trade)

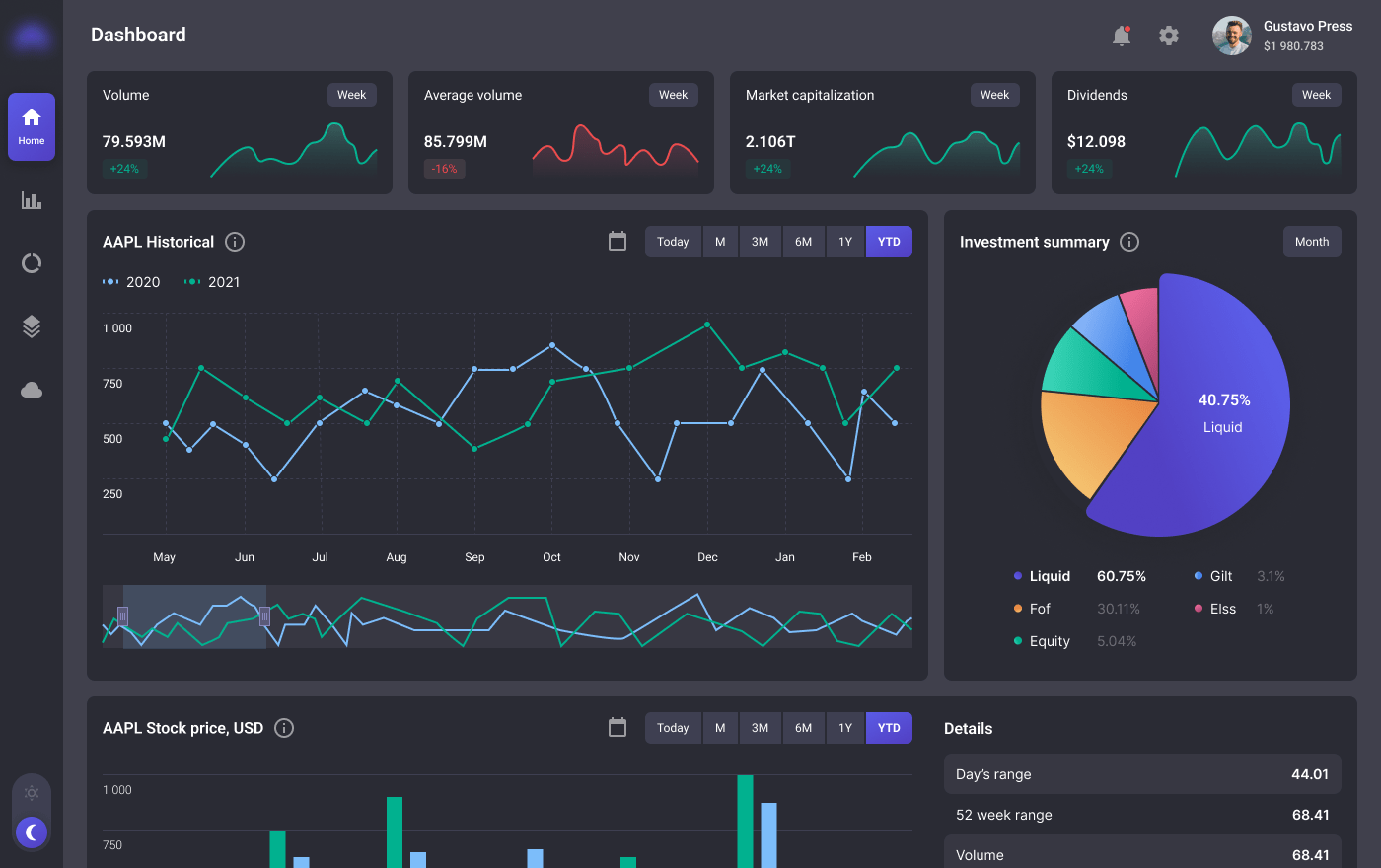

Free vs $99/month Data: Choose Your Pain

IEX (Free): Only gets you data from one exchange. Great for testing, useless for serious trading. You'll miss moves that happen on NYSE while only watching NASDAQ.

SIP ($99/month): All exchanges, all the time. Used to be $49 but they jacked up the price. Still worth it if you're trading more than coffee money - the extra data coverage pays for itself the first time you catch a move that IEX users missed.

Crypto runs 24/7 and never sleeps. Your connection management needs to handle weekends, holidays, and that random Tuesday when Bitcoin decides to crash at 3am because someone mentioned "regulation" in a tweet.

When It All Goes to Hell

Your WebSocket will die. Not if, when. Networks fail, servers restart, cosmic rays flip bits. Plan for it.

The connection will drop right before the most important announcement of the year. It's like Murphy's Law but specifically designed to cost you money.

Set up monitoring that screams at you when data stops flowing. I learned this the hard way when my bot sat silent for 2 hours during an earnings announcement because the connection died and I was too busy feeling smart about my "automated" system.

Keep historical data APIs handy for backfilling gaps when you reconnect. Yes, this means more code complexity. No, you can't skip it and hope for the best.

Now that you understand what can go wrong, let's build something that actually handles these problems properly. The difference between a broken bot that loses money and one that stays profitable comes down to handling the edge cases that everyone else ignores.