etcd dies, everything dies. When etcd corrupts, your kubectl returns "unable to connect to server" and you're fucked.

Most official troubleshooting guides assume your cluster is healthy enough to run diagnostics. Reality: when clusters fail, the tools you need to debug them stop working.

Here's what actually happens when clusters fail:

etcd Dies: kubectl returns ECONNREFUSED. Pods keep running but you can't manage anything. Had this happen when someone ran apt upgrade on a master node and etcd went from 3.4.22 to 3.5.1 without the data migration.

Multiple Masters Fail: Lost quorum means rebuilding from scratch unless your backup script actually worked. Spoiler: it probably didn't write to the right directory.

Power Failures: UPS died at 3am, corrupted etcd and the filesystem. Lost 3 months of config because backups were going to the same failed NFS mount.

Skip the theory - here's what works when nothing else does.

The Three Ways Kubernetes Breaks

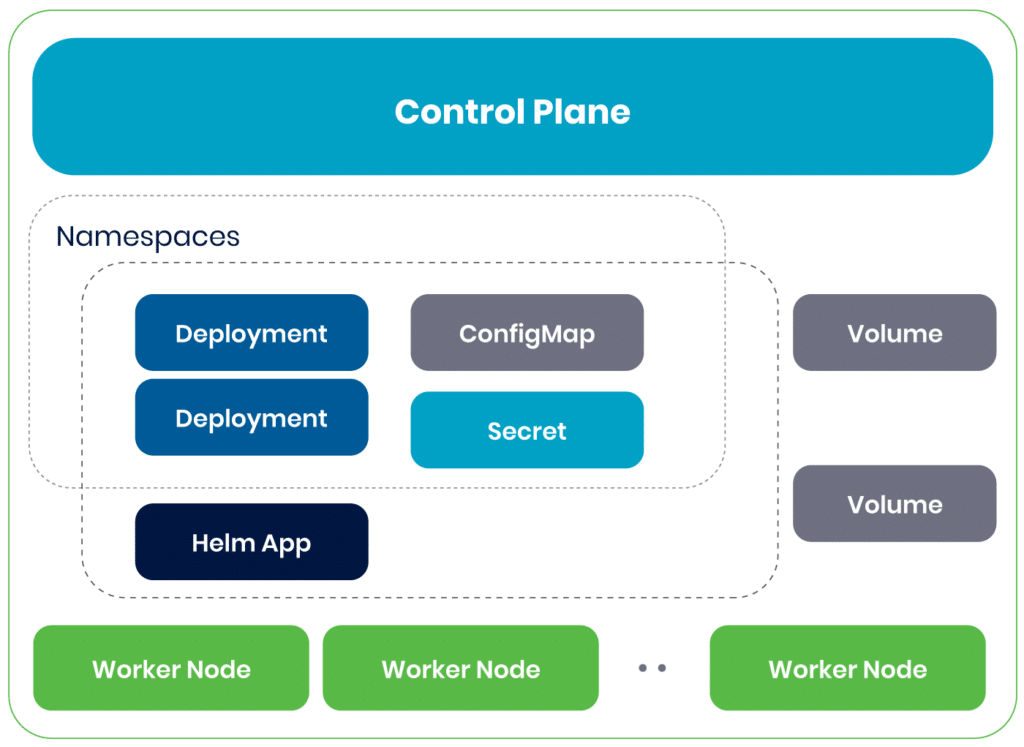

Complete Control Plane Death

This is the nightmare scenario. API server won't start, etcd is corrupted, kubectl throws connection errors. Usually happens because:

- Someone fucked up a cluster upgrade

- Disk space ran out on master nodes (happens more than you'd think)

- Network issues between etcd members

- Power outage without proper UPS

Fixed this twice. First time took 8 hours because the etcd user needed read access to the backup directory. Second time took 3 hours because the restore worked but I forgot to update the systemd service file with the new data directory path.

Resource Cascade Failures

Starts with one OOMKilled pod, ends with the entire cluster grinding to a halt. Memory pressure spreads like cancer until nothing can schedule.

How it cascades: Database pod gets OOMKilled → Apps can't connect → Restart storm → Node runs out of memory → kubelet dies → API server can't reach nodes → Everything fails.

The fix is brutal: kill everything non-essential immediately using resource quotas.

The Slow Death Spiral

Worst because it's hard to catch. Node starts having issues, pods slowly migrate, other nodes get overloaded, more nodes fail. Eventually you're running production on one overloaded node.

Signs: Random pod evictions, slow kubectl responses, deployments timing out.

Why kubectl Becomes Useless

When clusters are dying, the tools you need to debug them stop working. kubectl needs the API server. The API server needs etcd. etcd needs healthy nodes and disk space.

So when things go sideways, your primary diagnostic tool becomes a paperweight.

Instead you need:

- `crictl` (works when docker doesn't)

- Direct SSH to nodes

- `systemctl` commands

- Raw `etcdctl` if you can reach it

Learned this during a 6-hour outage where kubectl was dead the entire time and I kept trying kubectl get nodes like an idiot instead of just SSHing to the boxes.

The Real Recovery Process

Forget the clean step-by-step guides. Real recovery is messy:

- Panic for 5 minutes while you figure out how bad it is

- SSH directly to nodes because kubectl is dead

- Check if etcd is alive - if not, you're in deep shit

- Kill everything non-essential to free up resources

- Restore from backup or rebuild

The hardest part isn't technical - it's staying calm while everyone asks for ETAs you can't give.