Been using this for like two years now? Maybe more. It does work - took our bundle from... I think 2.3MB down to 1.8MB? Maybe 1.7MB. Point is, way smaller. Mobile users stopped bitching about load times, so that's something. But damn, this thing can be a pain sometimes.

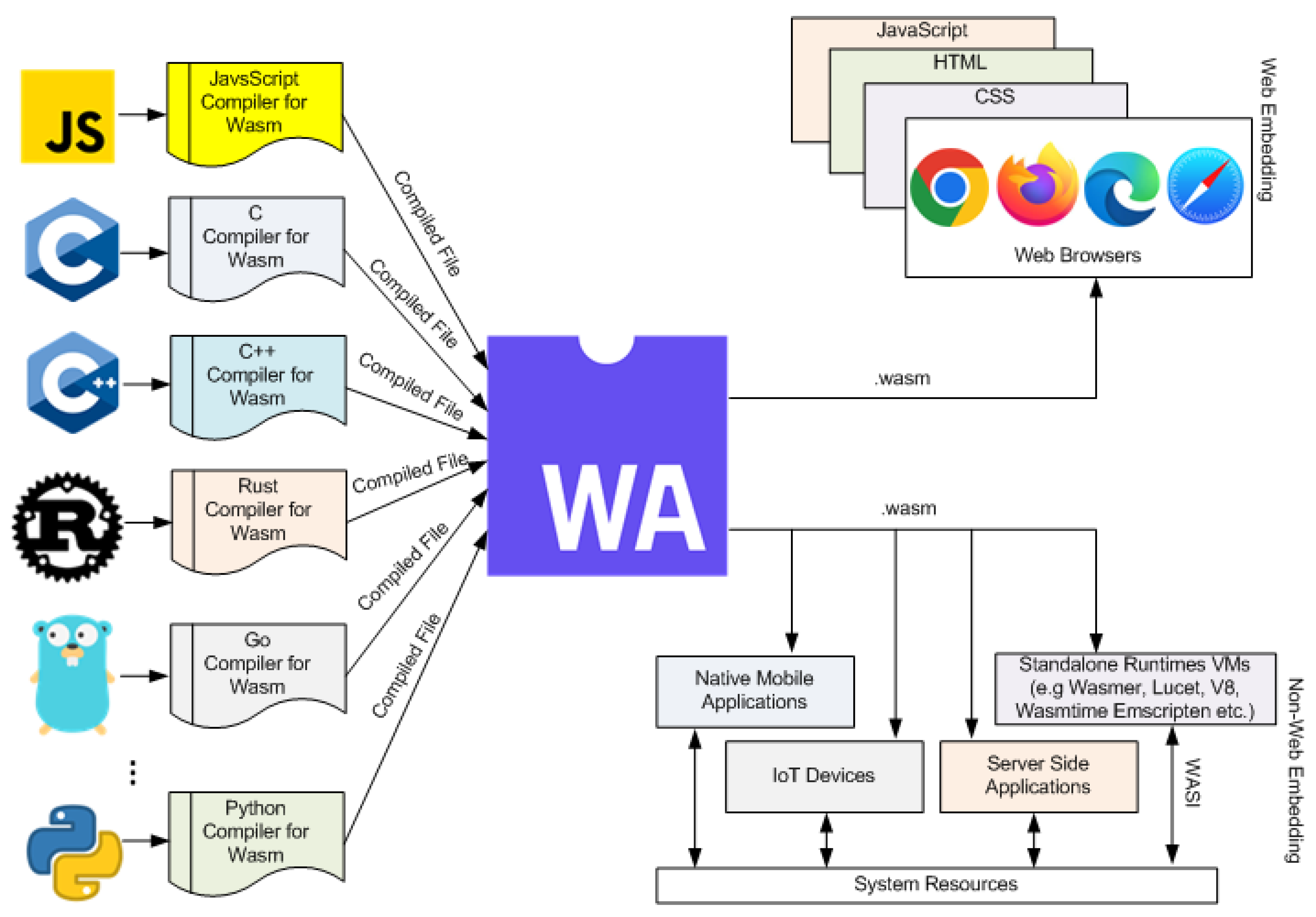

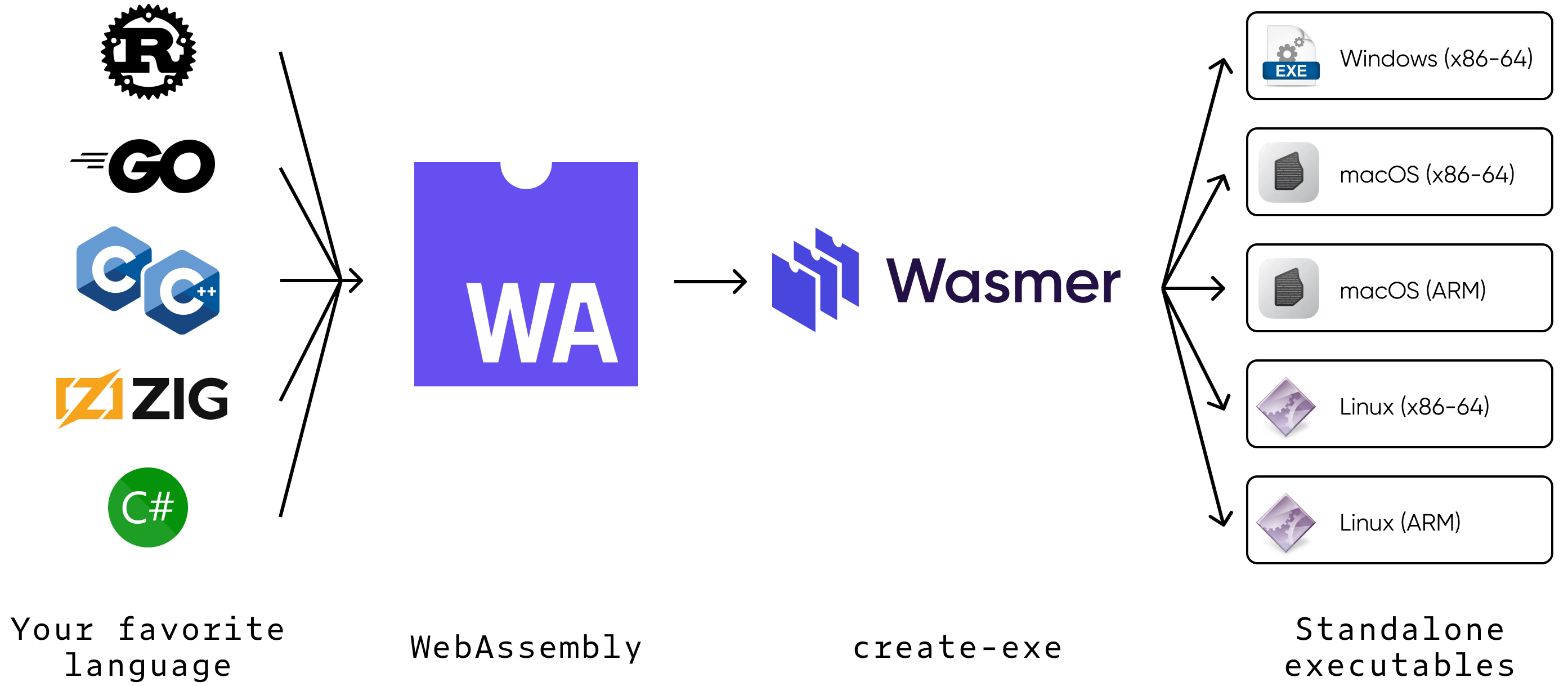

It's the only WASM optimizer that actually does anything useful. Emscripten, wasm-pack, everyone just calls wasm-opt because what else are you gonna do? The thing reads your .wasm files, runs a bunch of optimization passes, and hopefully gives you back something smaller without breaking everything.

The Reality Check

Performance claims online are hit or miss. I usually get about 20% smaller files with -O3, sometimes better. Depends on what your compiler already optimized. The 2023 benchmarks show WASM runs about 2.3x slower than native - not amazing but not terrible.

Here's what I learned the hard way: -O4 is a trap. Takes forever for maybe 3% extra savings. Stick with -O3 unless every byte actually matters. Someone on our team set it to -O4 once and our CI started taking forever. Like, we'd go grab coffee and it still wasn't done. Had to change it back to -O3.

When It All Goes to Hell

Yeah, it crashes sometimes. Had it die on me twice with big modules - once was some memory overflow bullshit, another time it just said "validation error" and gave up. Check the GitHub issues if you want to see how many other people are having the same problems.

The real pain in the ass is when wasm-pack tries to auto-download wasm-opt and your corporate network blocks it. You get this cryptic error about download failures and your build is fucked. Solution: cargo install wasm-opt first so it uses the local copy.

Most of the time it works fine, but when it doesn't, you'll end up disabling it with wasm-opt = false in your Cargo.toml just to ship something that day.