Look, nobody starts out wanting to run four different language runtimes in production. You end up here because you have actual problems that no single language can solve well. Maybe your Python ML models are eating all your CPU, your JavaScript API can't handle the concurrent load, and you need Rust because nothing else is fast enough for your core algorithms.

Here's the reality: Rust will make your high-performance code scream fast but compile times will make coffee breaks mandatory. Python's ML ecosystem is unmatched but the GIL will ruin your threading dreams. JavaScript with Node.js handles async I/O beautifully but npm will randomly break your builds. WebAssembly promises universal deployment but debugging it feels like reading assembly with a blindfold on.

The dirty secret? This architecture works when you're desperate enough to accept the operational complexity in exchange for solving specific, painful performance bottlenecks.

When Each Language Actually Makes Sense (And When It Doesn't)

You don't use polyglot because it's trendy. You use it because one language is genuinely failing you and you're willing to eat the complexity cost.

Rust for the stuff that has to be fast: Discord moved from Go to Rust and saw 40% CPU reduction. But they spent 6 months dealing with borrow checker fights and dependency hell. Figma got 3x performance compiling Rust to WebAssembly, but their build pipeline went from 30 seconds to 5 minutes.

Python when you need ML libraries that don't exist anywhere else: Every data scientist wants TensorFlow and PyTorch, but your Python service will become the bottleneck when you scale. One team I know spent three months trying to make their Python API handle 1000 RPS before giving up and rewriting the hot path in Go.

JavaScript for APIs that just need to work: Express.js with Node.js handles typical web API loads fine. Until you need CPU-intensive work, then you'll watch your event loop block and your response times explode. Perfect for most CRUD, terrible for anything computationally heavy.

WebAssembly for when you want to run the same code everywhere: Great in theory. In practice, debugging WASM feels like archaeology. When your WASM module crashes with RuntimeError: unreachable, good luck figuring out what actually went wrong in your original Rust code.

What Actually Works (And What Fails Spectacularly)

Here's what you'll learn the hard way about polyglot microservices:

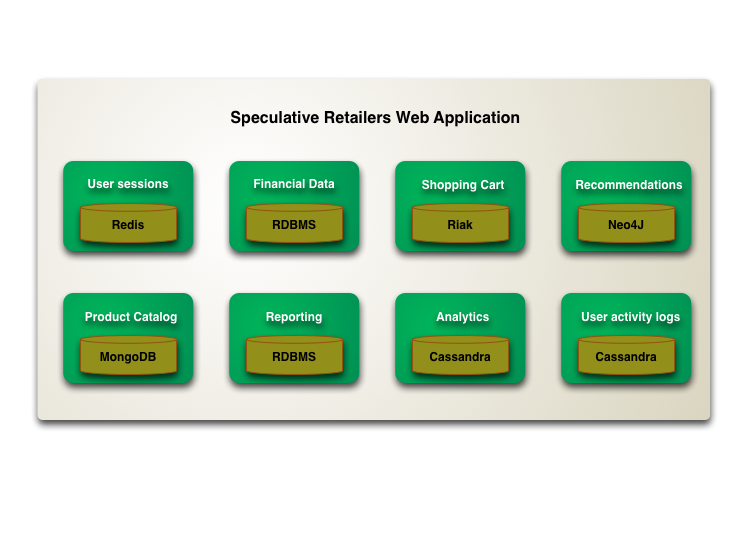

Don't split services by language, for fuck's sake. Split by business domain. The tempting approach is "Rust service for performance, Python service for ML, JavaScript service for APIs." This is a recipe for disaster. You'll spend more time debugging communication between services than actually solving business problems. Instead, each service should own a complete business capability and choose languages internally.

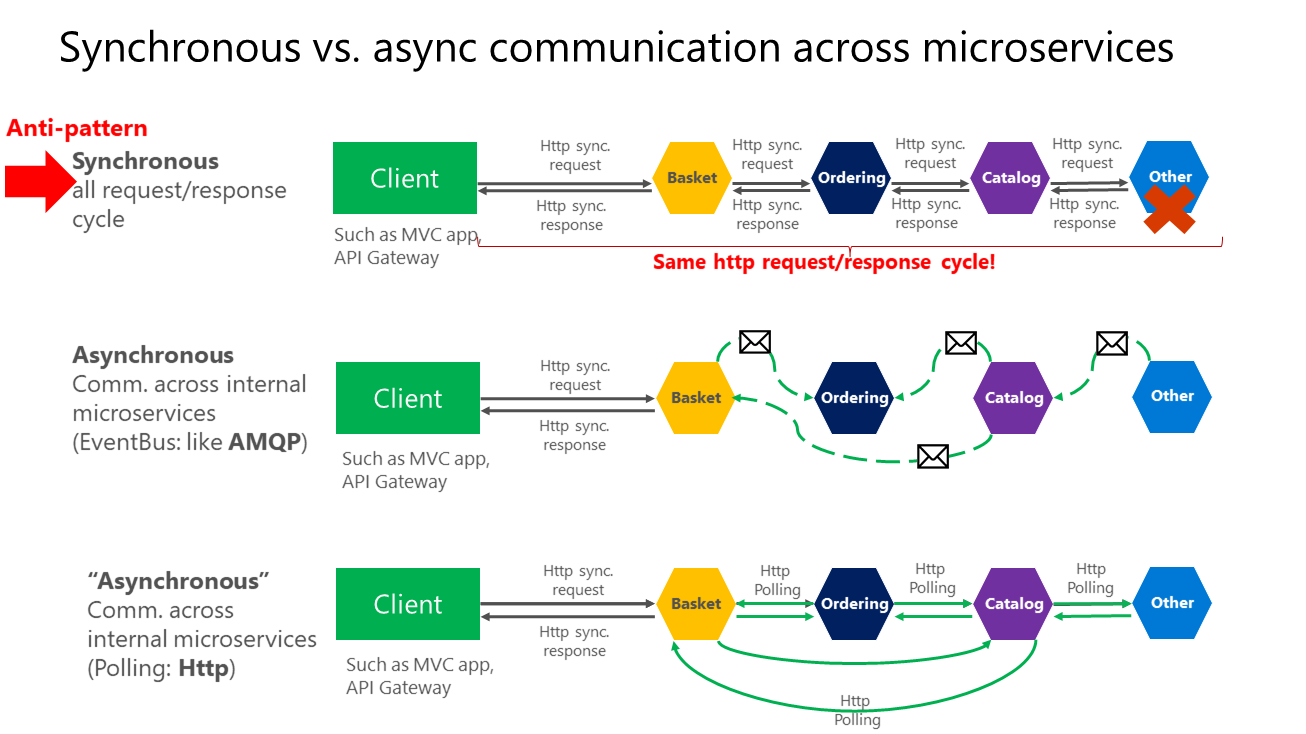

Communication is where everything breaks. gRPC works great until network partitions cause cascading timeouts across your polyglot services. Protocol Buffers keep your schemas consistent until someone deploys a breaking change and you realize your error handling sucks. That perfect GraphQL federation setup becomes a nightmare when your Rust service panics and takes down your Python ML pipeline.

Deployment is the easy part, debugging is hell. Docker and Kubernetes make deployment language-agnostic, which is great. But when something goes wrong and you need to trace a request through 4 different language runtimes, you'll be praying to the observability gods. Use OpenTelemetry and still plan on spending entire weekends debugging mysterious performance issues.

Version mismatches will eat your weekends. Node.js 18.2.0 breaks your WebAssembly module, Python 3.11 changes the C API your Rust extension uses, and cargo decides to recompile half the internet because someone updated a dependency. Container images help, but don't prevent the pain when you need to upgrade.

The WebAssembly Promise vs Reality

WebAssembly is supposed to be the universal runtime that solves all your polyglot deployment problems. In practice, it's more like "universal headaches with a side of debugging nightmares."

The good: WASM modules are genuinely isolated. Your Rust image processing code can't corrupt memory or crash your Python host. WASI gives you standardized file I/O and networking. You can theoretically hot-swap modules without downtime.

The ugly: Debugging WASM is like trying to fix a car with a blindfold on. Stack traces are useless. Performance profiling tools don't work. When something goes wrong, you'll spend hours trying to figure out if the problem is in your Rust code, the WASM compiler, the runtime, or somewhere in between.

The really ugly: Every WASM runtime has quirks. Wasmtime behaves differently than the browser runtime. Your code works fine in Node.js but crashes in Python. Memory leaks in WASM modules are a nightmare to track down because the host language's profiling tools can't see inside the WASM boundary.

Look, WASM works for some stuff (like running the same Rust algorithm in browsers and servers), but it's not a magic bullet for polyglot complexity. You'll trade language-specific deployment complexity for universal debugging complexity.

So now that you know what you're getting into, let's talk about what happens when you actually try to build something real with this polyglot nightmare.