If you've ever tried to build a text editor or data processor with WebAssembly, you know the pain. String handling was so bad that most people just gave up and went back to plain JavaScript. The new builtins fix the nightmare that was passing text between JS and WASM.

The Old Way Was Fucking Terrible

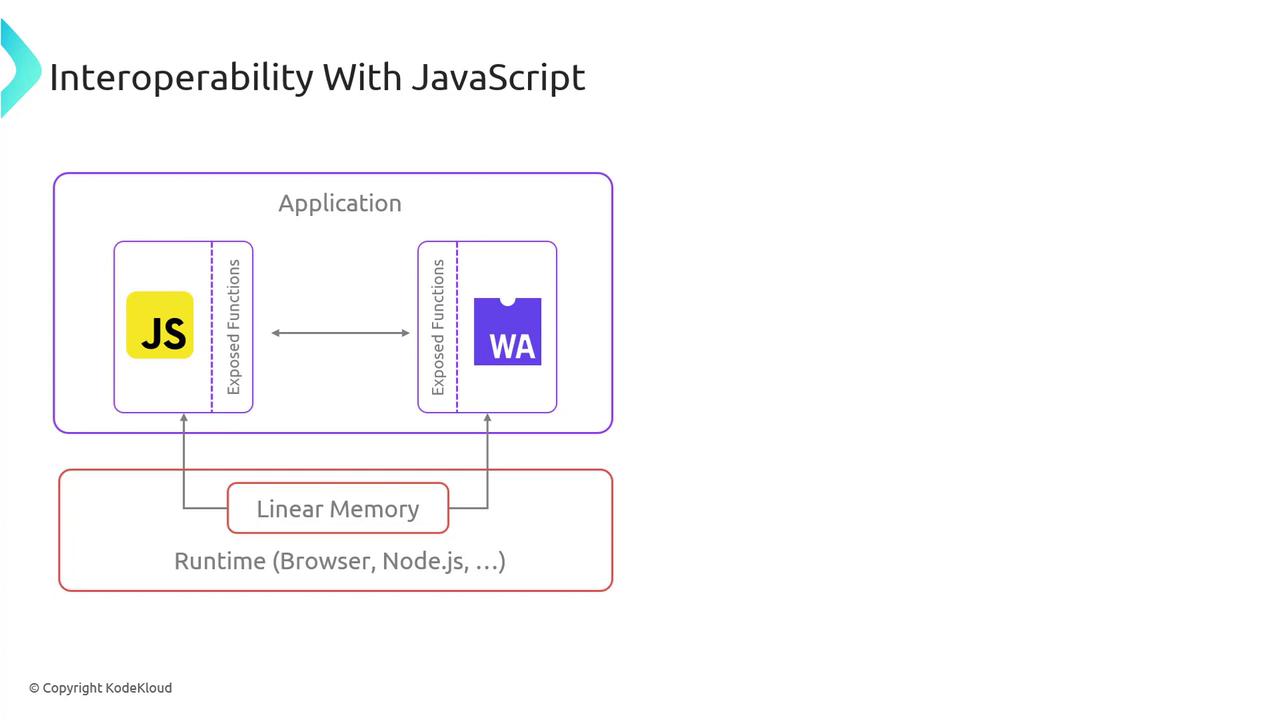

Before WebAssembly 3.0, JS strings came into WASM as externref objects—basically useless black boxes you couldn't actually use. You had three equally shitty options:

Copy everything into linear memory: This doubled your memory usage and required encoding/decoding every string. Our text editor was using 2GB just to process a 1GB file. Ridiculous.

Call back to JavaScript for everything: Every string operation became a slow-ass function call. You might as well not use WebAssembly at all.

Split processing between JS and WASM: Copy data back and forth constantly, making everything slower than just using JavaScript.

I spent like 2 weeks fucking around with a CSV parser, trying to get decent performance. Finally gave up and rewrote the whole thing in JS. Made WebAssembly feel like a waste of time.

What Actually Works Now

WebAssembly 3.0 adds builtin functions you can import that let you manipulate JS strings directly. No more marshaling bullshit, no more copying everything around.

You get functions to:

Read string properties: Get length, character codes, substrings without copying anything. Our syntax highlighter got way faster, went from taking forever to actually being usable.

Compare strings properly: Native comparison that works with JS strings directly instead of converting everything to byte arrays first.

Build new strings efficiently: Concatenation and substring operations that don't require going through the JS boundary.

Handle UTF-16 correctly: Direct access to JS's UTF-16 representation without the UTF-8 conversion nightmare that used to break on emoji.

How Much Faster Is It Really?

Our text processing pipeline got way faster. Haven't done any formal benchmarks because who has time for that shit, but the difference is obvious when you're not constantly copying strings around.

The wins come from:

- No malloc/free dance: String data stays in JS's optimized storage. No more allocating WASM memory just to hold text temporarily.

- No UTF-8/UTF-16 conversion hell: Remember spending hours debugging why emoji broke your parser? That's gone.

- Actual function calls instead of JS callbacks: Builtin functions are fast. Calling back to JS every time you need string length was killing performance.

The bigger your strings, the bigger the improvement. Our log processor used to choke on large files; now it doesn't die immediately.

Security That Doesn't Get in Your Way

The builtins keep WebAssembly's security model intact. You can read string data but can't corrupt JS strings—you have to explicitly create new ones through the provided functions.

This means WASM modules can't fuck up your JavaScript strings or access shit they shouldn't see. The interface is locked down but actually useful, unlike the old externref approach that was secure but useless.

JS engines can still do their optimization tricks without exposing internals to WASM. It just works without making you choose between security and performance, which is nice for once.