Building production-scale embedding systems is where shit gets real. I've spent the last 18 months building these systems for a fintech with 50M+ users, and learned these lessons the hard way. Your embedding costs will spiral out of control faster than you think.

Multi-Tier Vector Architecture (Or: How I Learned to Stop Worrying and Love My AWS Bill)

Pattern: Hierarchical Embedding Storage

Your first instinct will be to dump everything into Vertex AI Vector Search. Don't. Our Vector Search bill hit $18K in month two before we figured this out.

Here's what actually works in production:

- Hot Tier: Vector Search for real-time queries (<100ms latency) - only your most accessed 10% of embeddings

- Warm Tier: BigQuery Vector Search for analytical workloads - cheaper but 1-5 second latency will piss off users

- Cold Tier: Cloud Storage with compressed embeddings - batch retrieval takes 10+ seconds but costs pennies

This tiered approach cut our costs from $18K to around $4K monthly. The savings are real, but implementing the tier management logic took 6 weeks longer than planned.

Federated Vector Systems (Because Politics)

Enterprise departments require isolated vector systems: Legal needs dedicated compliance infrastructure, Marketing requires multilingual embedding models, and Compliance demands regional data residency controls.

Pattern: Domain-Specific Embedding Clusters

Every large company has departments that refuse to share infrastructure. Legal wants their own everything, marketing needs multilingual support, and compliance demands regional data residency. Fighting this is pointless - embrace the chaos.

Document Embeddings (Legal Dept) ┐

├── Vertex AI text-embedding-005 │

├── Dedicated Vector Search Index │ ──── Federated Query Router

└── Regional Data Residency │

│

Product Embeddings (Marketing) │

├── Gemini Embedding (multilingual) │

├── Pinecone Integration │

└── Global Distribution ┘

The federated approach costs 3x more than a unified system, but trying to convince legal to use shared infrastructure is a losing battle. Build the router layer with Cloud Load Balancer and accept that politics trumps efficiency.

Event-Driven Embedding Pipeline (The Right Way to Go Broke)

Pattern: Reactive Vector Updates

Batch processing sucks because your embeddings get stale. But real-time updates will absolutely murder your API quotas. I learned this when our event-driven pipeline triggered 50,000 embedding calls in 10 minutes after a content import bug. $800 gone in one afternoon.

Here's the pipeline that works (with proper rate limiting):

- Document Change Events → Cloud Pub/Sub (set up dead letter queues or you'll lose events)

- Embedding Generation → Cloud Functions with text-embedding-005 (batch size: 25 max)

- Vector Updates → Atomic upserts to vector databases (use transactions or accept data corruption)

- Cache Invalidation → Redis cache warming (Redis will go down at 2AM, plan accordingly)

Critical gotcha: Vertex AI quotas are 600 requests/minute by default. You'll hit this limit immediately in production. Request increases early and expect a 2-3 week approval process.

Multi-Model Embedding Strategy (Or: How to Overcomplicate Everything)

Pattern: Ensemble Vector Representations

Using multiple embedding models sounds smart until you're debugging why search results are inconsistent. Each model has different vector dimensions and similarity patterns. We tried this "best of breed" approach and spent 3 months just on the query routing logic.

What actually works:

- text-embedding-005: English content and code documentation (768 dimensions, consistent performance)

- Gemini Embedding: Multilingual content - but 1408 dimensions means storage costs spike 85%

- Fine-tuned embeddings: Domain-specific terminology - takes 2-4 weeks to train properly and costs $500-2000 per model

Pro tip: Pick one model and stick with it. The consistency gains from a unified approach outweigh the theoretical benefits of model ensembles.

Fail-Safe Vector Infrastructure (When Everything Goes to Hell)

Pattern: Multi-Region Redundancy with Graceful Degradation

Vector Search will go down. Not if, when. I've seen it fail during GCP regional outages twice in 8 months. Your disaster recovery better be more sophisticated than "restart everything and pray."

What actually keeps you running:

Active-Passive Setup:

- Primary: us-central1 (Vertex AI + Vector Search) - works great until GCP has "networking issues"

- Fallback: us-east1 (cached embeddings + Pinecone as backup) - costs extra but saves your ass

- Degraded Mode: Keyword search with Elasticsearch - users hate it but better than 500 errors

Cross-Region Synchronization (The Expensive Parts):

- Cloud Storage Transfer Service for embedding backups - $200/month for our 500GB of vectors

- Cloud SQL cross-region replicas for metadata - another $150/month

- Global Load Balancer with health checks - works but takes 45 seconds to failover

Real uptime: 99.7% including maintenance windows. The 0.3% downtime still costs us $50K in lost revenue per incident.

Performance Optimization Patterns (Debugging at 3AM)

Embedding Caching Strategy That Actually Works

Caching reduces API calls by 70-90%, but implementing it properly is a nightmare. This pattern saved our ass when we hit Vertex AI quotas during a traffic spike:

## Multi-level caching pattern - learned the hard way

def get_embedding_with_cache(text: str, task_type: str):

# L1: In-memory cache (Redis) - will timeout randomly

cache_key = hash(f\"{text}:{task_type}:text-embedding-005\")

try:

if cached := redis_client.get(cache_key):

return json.loads(cached)

except redis.ConnectionError:

# Redis is down again, skip to L2

pass

# L2: Persistent cache (Cloud SQL) - slower but reliable

if db_cached := embedding_cache_db.get(cache_key):

try:

redis_client.setex(cache_key, 3600, json.dumps(db_cached))

except:

pass # Redis still broken, whatever

return db_cached

# L3: Generate new embedding - pray we're under quota

try:

embedding = vertex_ai_client.embed_text(text, task_type)

except QuotaExceededError:

# You're fucked, return None and handle it upstream

return None

# Cache at both levels (if they're working)

embedding_cache_db.store(cache_key, embedding)

try:

redis_client.setex(cache_key, 3600, json.dumps(embedding))

except:

pass

return embedding

Batch Processing That Won't Explode

Parallel batching sounds great until you trigger rate limits and everything crashes:

- Batch size: Start with 25 documents - Vertex AI docs lie about the optimal size

- Concurrent requests: 5 max - anything higher triggers

RESOURCE_EXHAUSTEDerrors - Exponential backoff: Built-in retry with jitter, or you'll DDOS yourself

Reality check: Processing 1M documents takes 6-8 hours, not 4. Budget accordingly.

These patterns work in production, but debugging embedding pipelines at 3AM when everything's broken is still a special kind of hell.

Essential References for Production Embeddings:

- Vertex AI Text Embeddings Documentation

- Vector Search Setup Guide

- Google Cloud Storage Pricing

- BigQuery Vector Search

- Cloud Functions for Events

- Redis Memorystore Configuration

- Pinecone Vector Database

- Elasticsearch Vector Search

- Vertex AI Quotas Reference

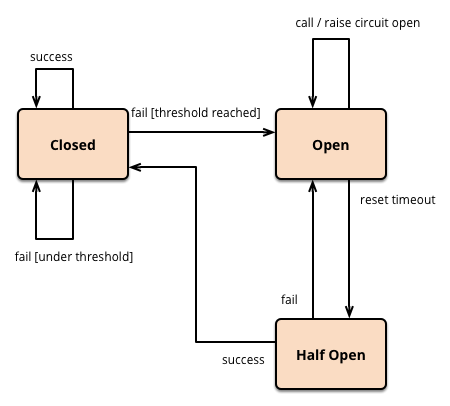

- Circuit Breaker Pattern

- Google Cloud Load Balancing

- Cloud SQL Cross-Region Replicas