Building voice apps with the OpenAI Realtime API looks like a weekend project until you hit mobile Safari. What starts as "just send audio over WebSocket" becomes debugging why your app works perfectly on Chrome but sounds like Karen is talking through a fish tank on her iPhone 11. WebSocket audio debugging will eat your soul and shit out your sanity, then come back for seconds.

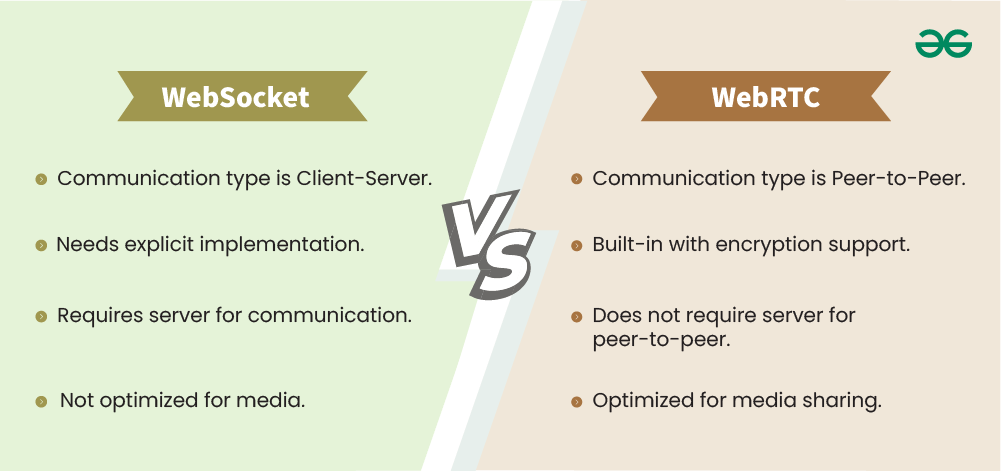

WebSocket vs WebRTC: Pick your nightmare

WebSocket Implementation (Looks easy, isn't):

- ✅ Simple to start - just send JSON over a connection

- ✅ Server logs actually make sense when debugging

- ❌ Audio format conversion is difficult

- ❌ Mobile browsers kill connections frequently

- ❌ iOS Safari audio permissions take forever

WebRTC Implementation (Complex as hell but works):

- ✅ Browsers actually handle audio properly for once

- ✅ Mobile doesn't kill connections as aggressively

- ✅ Echo cancellation works without hacks

- ❌ Setting up STUN/TURN servers is pain

- ❌ Debugging WebRTC feels like archaeology

They finally added WebRTC support in August 2025 after developers spent years begging for it. Thank fucking god. Realtime API version 2025-08-28 is the first one that doesn't hate mobile browsers.

The audio format conversion nightmare

The Realtime API wants PCM16 at 24kHz, but browsers shit out whatever format they feel like. Chrome Desktop usually gives you 48kHz float32, Safari does 44.1kHz because Apple hates standards, and mobile varies by device like it's playing roulette.

Took me 3 weeks to figure out why my app worked fine in development but made users sound like they were talking through a fish tank in production. Turns out it only happened on iPhone 11 running iOS 14.2.1 with AirPods connected. Specific as hell, impossible to reproduce locally.

// What OpenAI actually wants

const targetFormat = {

sampleRate: 24000, // Always 24kHz, no exceptions

channels: 1, // Mono only

bitsPerSample: 16, // PCM16 format

encoding: 'pcm16'

};

// What you actually get from browsers (good luck!)

const realityCheck = {

chrome: { sampleRate: 48000, format: 'float32' }, // At least consistent

safari: { sampleRate: 44100, format: 'float32' }, // Different, naturally

firefox: { sampleRate: 48000, format: 'float32' }, // Same as Chrome, miraculously

mobile: { sampleRate: 'LOL who knows', format: 'depends on moon phase' }

};

Format conversion that actually works (after 50 Stack Overflow tabs and 2 mental breakdowns):

class AudioConverter {

convertFloat32ToPCM16(float32Array, targetSampleRate = 24000) {

// Resampling hell - 3 hours of my life I'll never get back

const resampled = this.resample(float32Array, this.sourceSampleRate, targetSampleRate);

// Float32 to int16 conversion - \"simple\" my ass

const pcm16 = new Int16Array(resampled.length);

for (let i = 0; i < resampled.length; i++) {

// Don't clamp this and enjoy destroying your eardrums

const clamped = Math.max(-1, Math.min(1, resampled[i]));

pcm16[i] = Math.round(clamped * 32767);

}

return pcm16;

}

// Base64 encoding because WebSockets hate binary data

encodeToBase64(pcm16Array) {

const buffer = new ArrayBuffer(pcm16Array.length * 2);

const view = new DataView(buffer);

for (let i = 0; i < pcm16Array.length; i++) {

view.setInt16(i * 2, pcm16Array[i], true); // little-endian or die

}

// Convert to base64 for WebSocket transmission

return btoa(String.fromCharCode(...new Uint8Array(buffer)));

}

}

Browser-specific pain points and workarounds

Chrome Desktop (Actually works, shocking!)

Chrome is the only browser that doesn't actively hate developers. It still has quirks that'll ruin your Tuesday.

// Chrome config that doesn't completely suck

const chromeConfig = {

audio: {

echoCancellation: true,

noiseSuppression: true,

autoGainControl: false, // Turn this off or sound goes to shit

sampleRate: 48000

}

};

// Chrome reconnection configuration

const reconnectConfig = {

maxRetries: 10,

baseDelay: 1000,

maxDelay: 30000 // Usually reconnects quickly, sometimes takes longer

};

Safari Desktop/iOS audio handling

Safari audio permissions are more unpredictable than the weather in Seattle. Sometimes they work instantly, sometimes Safari sits there for 45 seconds pretending to think about it, sometimes the permission dialog never appears and you're left wondering if Safari is actually broken or just fucking with you personally.

// Safari permission dance that may or may not work

class SafariAudioHandler {

async initializeAudio() {

// Safari needs hand-holding for everything

const AudioContext = window.AudioContext || window.webkitAudioContext;

this.audioContext = new AudioContext({

sampleRate: 44100 // Safari throws tantrums with other rates

});

// Safari: \"No audio until user clicks something\"

if (this.audioContext.state === 'suspended') {

await this.audioContext.resume(); // Please work this time

}

// iOS Safari requires longer permission timeout

const isSafariMobile = /iPad|iPhone|iPod/.test(navigator.userAgent);

const permissionTimeout = isSafariMobile ? 15000 : 5000;

return this.requestMicrophoneAccess(permissionTimeout);

}

}

Mobile Implementation challenges

React Native Integration (abandon hope):

React Native audio makes grown developers cry. iOS kills WebSocket connections the nanosecond a user switches apps, Android randomly switches audio routes mid-conversation because fuck consistency, and both platforms conspire to make your life miserable in increasingly creative ways.

// React Native nightmare fuel

import { RNSoundLevel } from 'react-native-sound-level';

class MobileRealtimeClient {

async initializeMobile() {

// Permissions dance - prepare for rejection

const hasPermissions = await this.requestAudioPermissions();

if (!hasPermissions) {

throw new Error('User said no, app is now useless');

}

// Native audio processing - works 70% of the time

this.audioProcessor = new NativeAudioProcessor({

sampleRate: 24000, // Will probably get 44100 anyway

channels: 1, // Pray for mono

bitDepth: 16 // Fingers crossed

});

return this.connectWebSocket(); // Good luck

}

handleBackgroundState() {

// Mobile OS: \"Background app? KILL THE AUDIO!\"

AppState.addEventListener('change', (nextAppState) => {

if (nextAppState === 'background') {

this.pauseAudioProcessing(); // Audio dies anyway

} else if (nextAppState === 'active') {

this.resumeAudioProcessing(); // Maybe works

}

});

}

}

Connection reliability (because everything will break)

Reconnection logic that assumes the worst:

Here's the thing about WebSocket connections - they will drop. Not if, when. Mobile browsers will kill them when users switch apps, WiFi will hiccup, and your connection will die at the worst possible moment.

class ReliableWebSocketClient {

constructor(apiKey) {

this.apiKey = apiKey;

this.reconnectAttempts = 0;

this.maxReconnects = 10; // Usually gives up before this

this.isIntentionallyClosed = false;

this.connectionDeaths = 0; // For debugging your sanity

}

connect() {

this.ws = new WebSocket(

`wss://api.openai.com/v1/realtime?model=gpt-realtime`,

[],

{

headers: {

'Authorization': `Bearer ${this.apiKey}`,

'OpenAI-Beta': 'realtime=v1'

}

}

);

this.setupEventHandlers();

}

setupEventHandlers() {

this.ws.onopen = () => {

console.log('Connected to OpenAI (miracle!)');

this.reconnectAttempts = 0;

this.startHeartbeat();

};

this.ws.onclose = (event) => {

this.connectionDeaths++;

this.stopHeartbeat();

console.log(`Connection died #${this.connectionDeaths}. Code: ${event.code}`);

if (!this.isIntentionallyClosed && this.reconnectAttempts < this.maxReconnects) {

const delay = Math.min(1000 * Math.pow(2, this.reconnectAttempts), 30000);

console.log(`Reconnecting in ${delay}ms... (attempt ${this.reconnectAttempts + 1})`);

setTimeout(() => {

this.reconnectAttempts++;

this.connect(); // Here we go again

}, delay);

} else {

console.log('Giving up. Connection is cursed.');

}

};

this.ws.onerror = (error) => {

console.error('WebSocket error (surprise!):', error);

};

}

startHeartbeat() {

// Keep connection alive or browsers will kill it

this.heartbeatInterval = setInterval(() => {

if (this.ws.readyState === WebSocket.OPEN) {

this.ws.send(JSON.stringify({ type: 'ping' }));

}

}, 30000); // 30 seconds - any longer and mobile kills it

}

}

Hard truth: build your WebSocket client like you're preparing for nuclear war. Connections will drop at the worst possible moment, audio will break when users need it most, permissions will get revoked randomly, and mobile browsers will actively work against you. Plan for everything to fail spectacularly.

Essential references that saved my ass:

- WebSocket Browser Support - Check what actually works

- Web Audio API Compatibility - Spoiler: Safari sucks

- getUserMedia Browser Support - Mobile permissions nightmare guide

- Chrome Background Tab Throttling - Why your app dies in background

- iOS Safari WebSocket Issues - Known Safari WebSocket bugs

- React Native WebRTC Library - Community WebRTC for React Native

- MediaRecorder Browser Support - Audio recording compatibility matrix

- Web Audio Processing Best Practices - Mozilla's guide to not screwing up audio

- Chrome WebSocket Debugging - How to debug WebSocket connections

- Safari Audio Context Limitations - Apple's audio restrictions explained

- Mobile Browser Audio Permissions - Permission patterns that actually work