Integrating Claude API with React sounds simple until you deploy and everything turns to shit. The official @anthropic-ai/sdk version 0.60.0+ is actually decent (shocking for an API wrapper), but the real fuckery starts when real users hit your app.

The Security Nightmare Nobody Warns You About

Here's what happened to me twice: You stick your Claude API key in React environment variables because "it's just for testing." That key gets bundled into your /static/js/main.abc123.js file. Some script kiddie runs automated tools against GitHub Pages deployments and finds it in 90 minutes. Your $300/month API key is now generating Claude responses for their crypto pump-and-dump Discord bot.

Direct frontend integration looks tempting:

// This will bankrupt you

const anthropic = new Anthropic({

apiKey: process.env.REACT_APP_CLAUDE_KEY // Now in your bundle

});

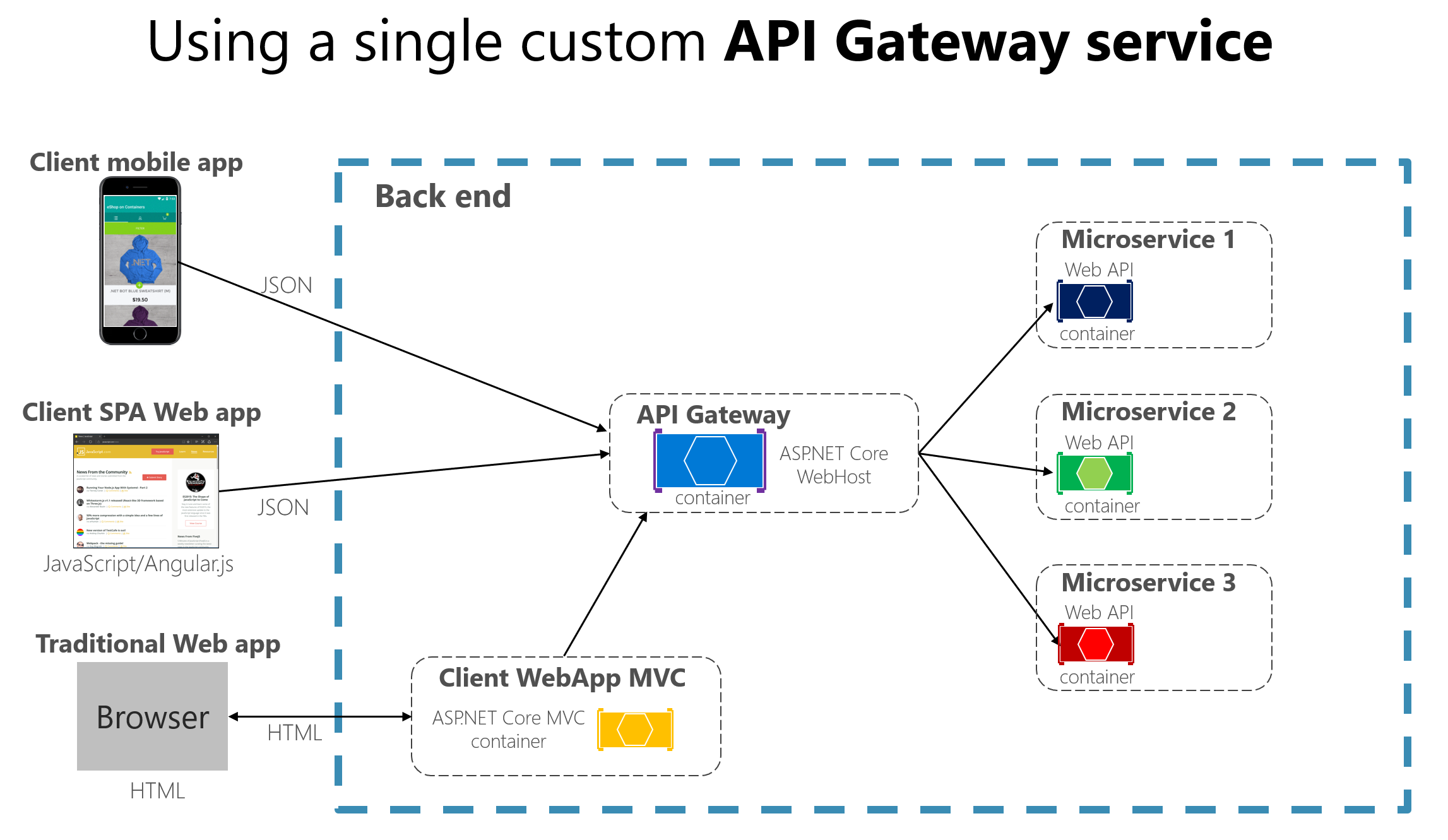

The proxy pattern is the only sane approach for production:

- React talks to your backend

- Backend talks to Claude

- API key stays server-side where it belongs

- You don't wake up to a $2000 Anthropic bill

Here's what'll ruin your weekend: Any jackass with DevTools can steal your API key from your bundle. That $300/month key you've been rationing? Now it belongs to some bot farmer generating Claude responses for OnlyFans chatbots. The @anthropic-ai/sdk mentions this in passing, but I'll scream it: NEVER PUT API KEYS IN CLIENT CODE. Not in .env.local, not in environment variables, not anywhere that gets bundled.

What you actually need to read:

Read the OWASP API security guide if you want to not get owned. GitGuardian has a good blog post on how secrets leak (spoiler: usually through git commits). The 12-factor app methodology explains proper config management. Auth0's security guide covers OAuth patterns. Mozilla's web security guidelines are comprehensive. Check Snyk's vulnerability database for known issues. The NIST cybersecurity framework provides enterprise standards. GitHub's security advisories track package vulnerabilities. Everything else is just security theater unless you're building fintech.

Integration Patterns That Actually Work

Custom Hook Pattern (what you'll actually use):

const useClaudeChat = () => {

const [messages, setMessages] = useState([]);

const [isLoading, setIsLoading] = useState(false);

const [error, setError] = useState(null);

const [retryCount, setRetryCount] = useState(0);

// The real implementation handles failures

const sendMessage = async (content) => {

if (isLoading) return; // Stop button mashers

setIsLoading(true);

setError(null);

try {

// Call your backend proxy, not Claude directly

const response = await fetch('/api/claude', {

method: 'POST',

body: JSON.stringify({ message: content })

});

if (!response.ok) throw new Error(`HTTP ${response.status}`);

const data = await response.json();

setMessages(prev => [...prev, data.message]);

} catch (err) {

setError("Something broke. Try again or blame the network.");

setRetryCount(prev => prev + 1);

} finally {

setIsLoading(false);

}

};

return { messages, sendMessage, isLoading, error, retryCount };

};

Context Provider Pattern (for when you need global state):

Works fine until you have multiple conversation threads. Then you discover why Redux exists.

Streaming: Cool When It Works, Frustrating When It Doesn't

The streaming API is genuinely useful - when it works. Claude responses arrive progressively instead of all at once after 8 seconds of nothing.

What they don't tell you:

- Streaming connections die mid-response (users love half-sentences)

- Network proxies sometimes buffer the entire response (defeats the purpose)

- Error handling gets complex when you're reassembling partial messages

- WebSocket connections drop on mobile when the app backgrounds

Real streaming implementation looks like:

const [streamedContent, setStreamedContent] = useState('');

const [isStreaming, setIsStreaming] = useState(false);

// Handle the inevitable stream failures

useEffect(() => {

if (streamError) {

// Fallback to non-streaming

sendRegularMessage(lastMessage);

}

}, [streamError]);

Performance "optimization" in practice means handling the chaos when users mash the send button 10 times while Claude is still thinking about their first message.