A fintech startup learned the hard way why authentication matters. They deployed a "quick prototype" language model endpoint for document analysis without proper authentication. Six months later, their endpoint was processing 50,000 requests per hour from bots scraping sensitive customer documents. The aftermath: regulatory fines, customer lawsuits, and over $2 million in legal settlements.

This shit happens because most teams focus on getting models working and forget that production AI systems are still web services that need proper security controls. The difference between a prototype and production isn't just performance - it's whether you get fired for exposing customer data.

Security Levels: Pick Your Poison

Hugging Face offers three security levels for Inference Endpoints, and choosing wrong will bite you in production:

Public Endpoints - Available from the internet with just TLS/SSL. No authentication required. This is fine for demo apps or internal tools processing public data. Not fine for anything that touches customer data or costs real money. I've seen startups rack up thousands in inference costs because their public endpoints got discovered by crawlers.

Protected Endpoints - Internet-accessible but require a Hugging Face token for authentication. This is the sweet spot for most production deployments. Your application authenticates with HF tokens, users never see the actual endpoint URL, and you get access logs for monitoring.

Private Endpoints - Only accessible through AWS PrivateLink or Azure private connections. These never touch the public internet. Enterprise deployments love these for compliance reasons, but they're overkill unless you're handling financial data or healthcare records.

Authentication Implementation That Actually Works

The token-based authentication is straightforward, but implementation matters. Here's what breaks in production:

Token Rotation Nightmare: Your application needs to handle token expiration gracefully. I've seen services crash every 90 days when tokens expired and nobody remembered to rotate them. Set up automated token rotation or use fine-grained tokens with longer expiration.

Secret Management Disasters: Never hardcode tokens in your application code. One startup pushed their production HF token to a public GitHub repo and had $3,000 in unauthorized usage within hours. Use proper secret management - AWS Secrets Manager, Kubernetes secrets, or environment variables at minimum.

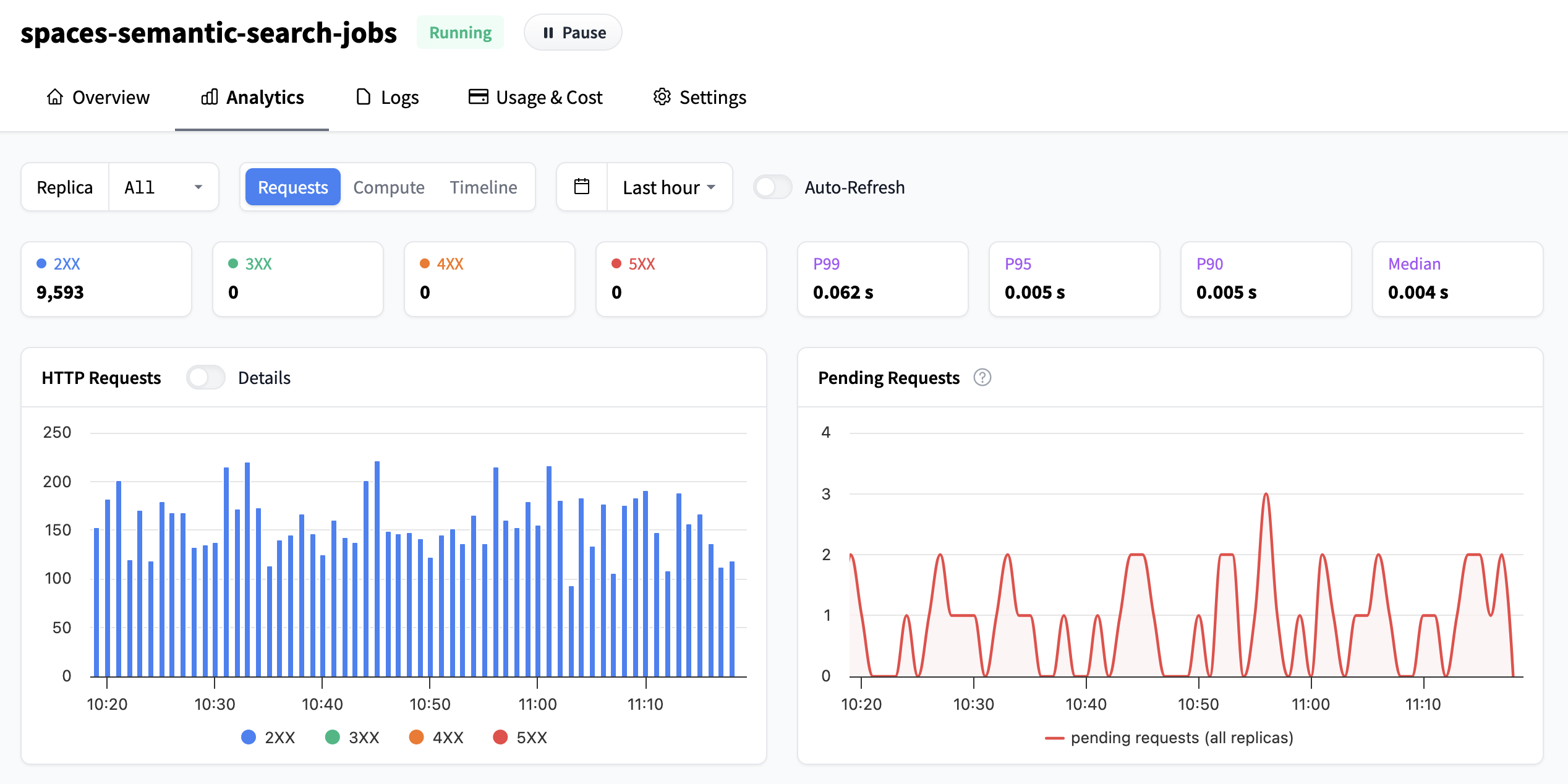

Rate Limiting Reality: Even with authentication, you can still DoS yourself. HF has rate limits, but they're generous. The real problem is when your own application logic creates request loops using exponential backoff strategies. One misconfigured retry mechanism sent 10,000 identical requests in 30 seconds and got the endpoint temporarily blocked.

Network-Level Security Controls

Authentication is just the first layer. Production deployments need defense in depth:

VPC Integration: If you're on AWS, use VPC endpoints to keep traffic private. Even protected endpoints benefit from not traversing the public internet. Latency improves and attack surface shrinks.

API Gateway Protection: Don't expose your endpoints directly to clients. Use AWS API Gateway, Azure API Management, or similar services to add:

- Request validation and sanitization

- Rate limiting per customer/IP

- Request/response logging

- Circuit breaker patterns

IP Whitelisting: For B2B applications, whitelist your application server IPs. Most corporate deployments require this anyway. It's an extra layer that costs nothing and prevents credential stuffing attacks.

The security team at one Fortune 500 company told me their policy is simple: "If it processes customer data and touches the internet, it needs three layers of authentication." Sounds paranoid until you see the breach notifications that result from skipping these controls.

Production AI isn't just about model accuracy - it's about not getting your company sued or fined because you deployed insecure endpoints. The security controls exist, they're well-documented, and they work. Use them before you become another cautionary tale.