Microsoft's edge computing device that they want you to deploy everywhere except they make it damn near impossible to actually get one. Azure Stack Edge Pro 2 is their latest attempt at solving the "my data is too slow getting to the cloud" problem by putting a 2U rack server at your location instead.

This isn't revolutionary - it's just Microsoft's version of AWS Outposts and Google Anthos. But Microsoft's approach has a special twist: you can't just buy one to test. Because why would Microsoft make anything simple?

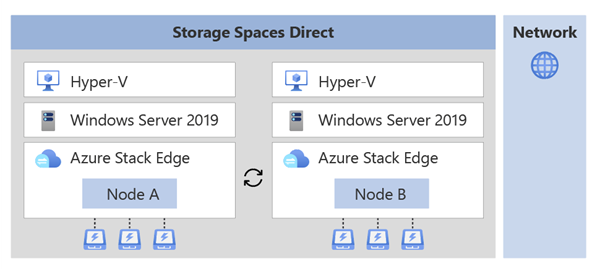

How This Thing Actually Works

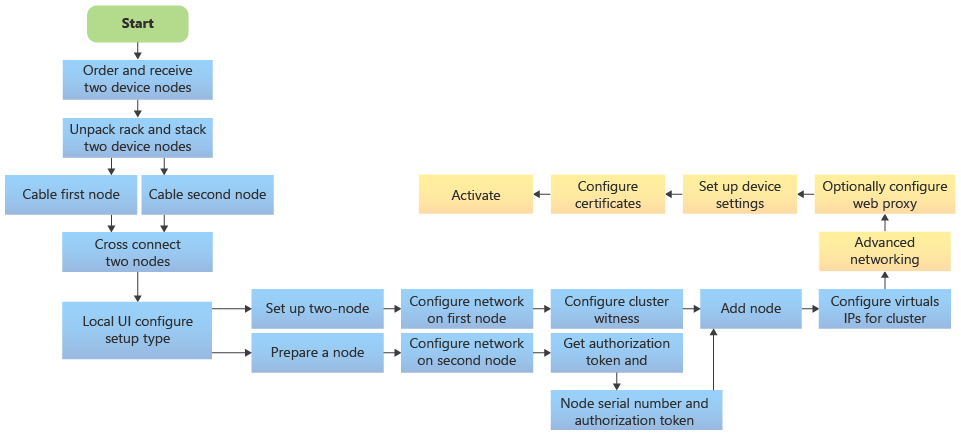

The setup would be straightforward if Microsoft's documentation wasn't scattered across 47 different pages:

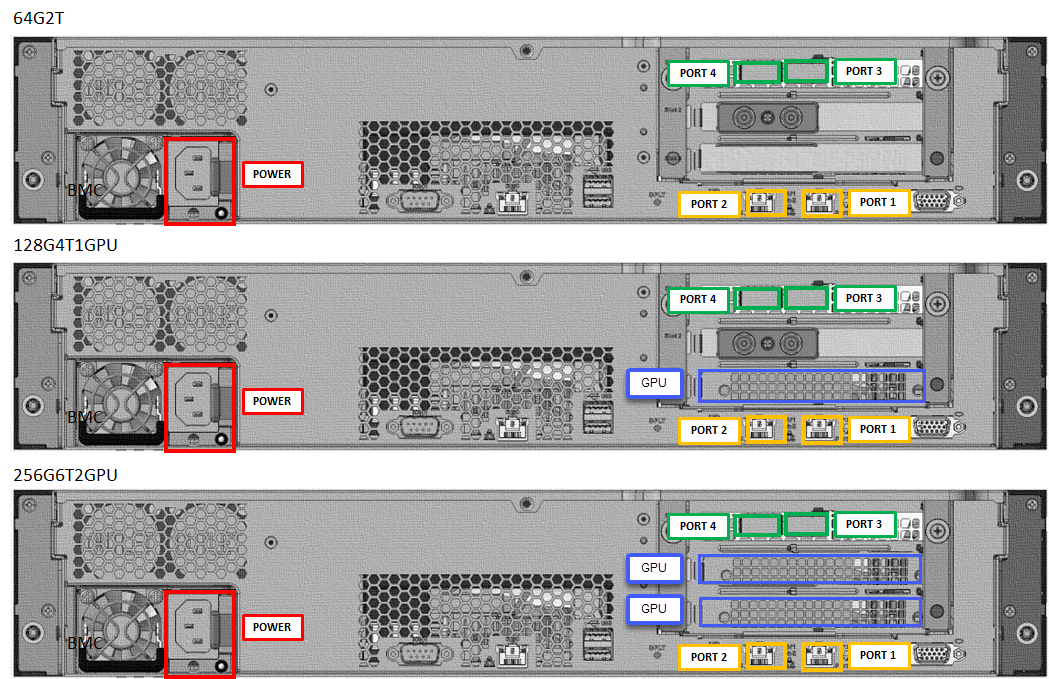

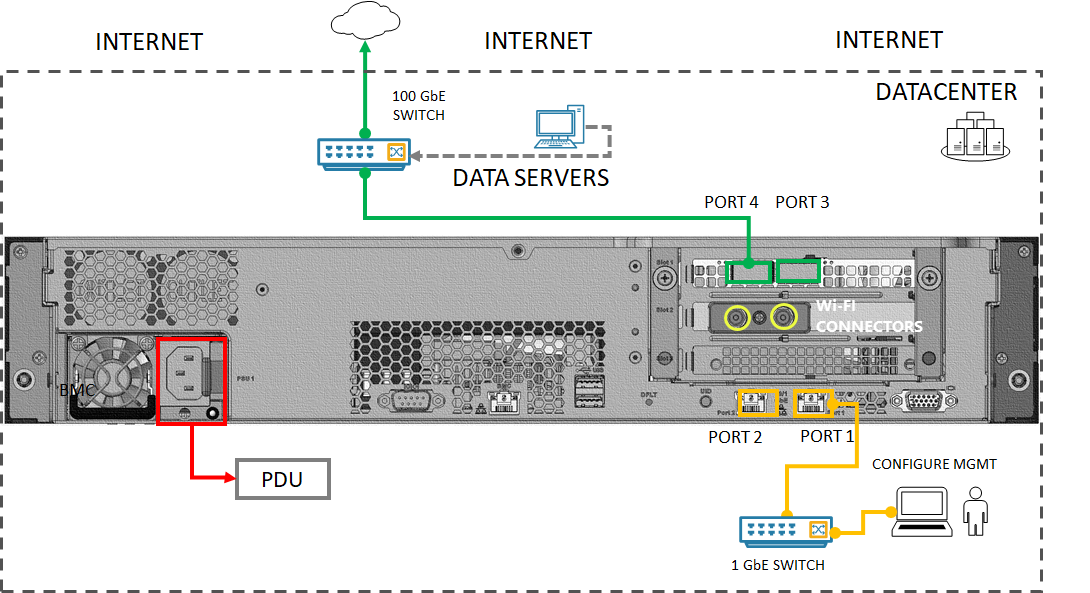

The Physical Box: A 2U rack server with optional GPUs for machine learning. Wall mounting sounds great until you spend 3 hours wrestling with cable management and realize you need power exactly where the mount is. If you're doing AI inference, pay for the GPU models. If you're just processing data, don't waste money on graphics cards you'll never use.

Azure Portal Management: You configure everything through Azure's web interface. Works fine if your edge locations have decent internet. Spoiler: they don't. Our Fresno location has been "managing device connectivity" for 6 weeks because their ISP treats anything over 10 Mbps as a premium service.

Local Web Interface: There's a local admin interface for when Azure inevitably craps out. Bookmark this IP address because you'll need it every time Microsoft has their monthly "degraded service" incident and your devices start acting weird.

What You'd Actually Use This For

There are three main reasons you'd deploy these expensive boxes:

Running AI Models Locally: Machine learning inference without sending everything to the cloud. Retail stores doing real-time inventory analysis, manufacturing floors running predictive maintenance. Works great until your model needs retraining and you discover updating ML models across 200+ locations is a special kind of hell. Took our team 6 weeks to push a minor model update because 23 devices were "offline" and couldn't be reached.

Data Filtering Before Cloud Upload: Process data locally before sending to Azure Storage. Makes sense - why upload 10GB of sensor data when you only need 100MB of processed results? Just don't underestimate bandwidth needs for model updates and management. Microsoft says "10 Mbps minimum" but good luck managing anything with less than 50 Mbps dedicated.

Local File Caching: Cache frequently accessed files locally while syncing to blob storage. Useful if your locations have garbage internet but need fast file access. Sync works when it works, but troubleshooting conflicts across dozens of devices will make you question why you didn't just become a plumber. We've got 12 devices stuck in "sync pending" status for 3 months.

The Catch: Microsoft's Minimum Order Bullshit

Here's where Microsoft reveals their true colors - you can't just buy one of these to test or for small deployments:

100-Unit Minimum: Microsoft requires at least 100 devices minimum through their Azure Edge Hardware Center. Can't test with 5 units? Too bad. Want to slowly roll out to 20 locations? Microsoft says fuck you, buy 100 or nothing. This is purely about revenue - they want enterprise money, not small business deployments.

"Validated Partner" Requirements: Translation - you need to be big enough for Microsoft to care about you, or you need to be deploying someone else's pre-approved solution. Government customers get special treatment because that's where the real money is.

Microsoft designed this for Fortune 500 companies with hundreds of locations. If you're not deploying at massive scale, AWS Wavelength or Google Distributed Cloud Edge won't force a minimum order that costs more than most people's houses.

Current Generation Hardware Reality

The Pro 2 series has been around since 2022, not 2025 like some sources claim. The actual improvements matter:

GPU Options: Single GPU, dual GPU, or no GPU models. GPU models cost significantly more but are necessary if you're doing AI inference with NVIDIA T4 tensors. Non-GPU models are fine for basic data processing but don't expect miracles.

Noise Levels: Microsoft claims these are "office-friendly" but "low noise" is relative when you're dealing with server fans. Plan for a server closet unless you enjoy industrial fan noise during meetings. I made the mistake of putting one in our open office - sounds like a jet engine spooling up every time it gets warm.

Integration Reality: Runs Kubernetes v1.24+ and Windows Server VMs, which works fine for standard deployments. The SD-WAN and mobile packet core features are for telecom providers, not retail deployments. Don't get excited about 5G features you'll never use unless you're running a private cellular network.

Microsoft pushes updates whether you like it or not. Unlike real servers where you control updates, these get patched on Microsoft's schedule. You can set maintenance windows, but expect "critical security updates" that reboot your device during business hours anyway. One patch killed our primary store's inventory system for 2 hours during Black Friday prep. Microsoft's response? "Security updates cannot be delayed for business convenience."

Microsoft pushes updates whether you like it or not. Unlike real servers where you control updates, these get patched on Microsoft's schedule. You can set maintenance windows, but expect "critical security updates" that reboot your device during business hours anyway. One patch killed our primary store's inventory system for 2 hours during Black Friday prep. Microsoft's response? "Security updates cannot be delayed for business convenience."