I've lost more work to kernel deaths than I care to admit. You're analyzing a dataset, everything's running smooth, then BAM - kernel dies. No warning, no error message, just a dead kernel and 3 hours of work gone. If this sounds familiar, welcome to the club of developers who've learned to fear the dreaded "Kernel has died. Restarting..." message.

The Memory Management Nightmare That Is JupyterLab

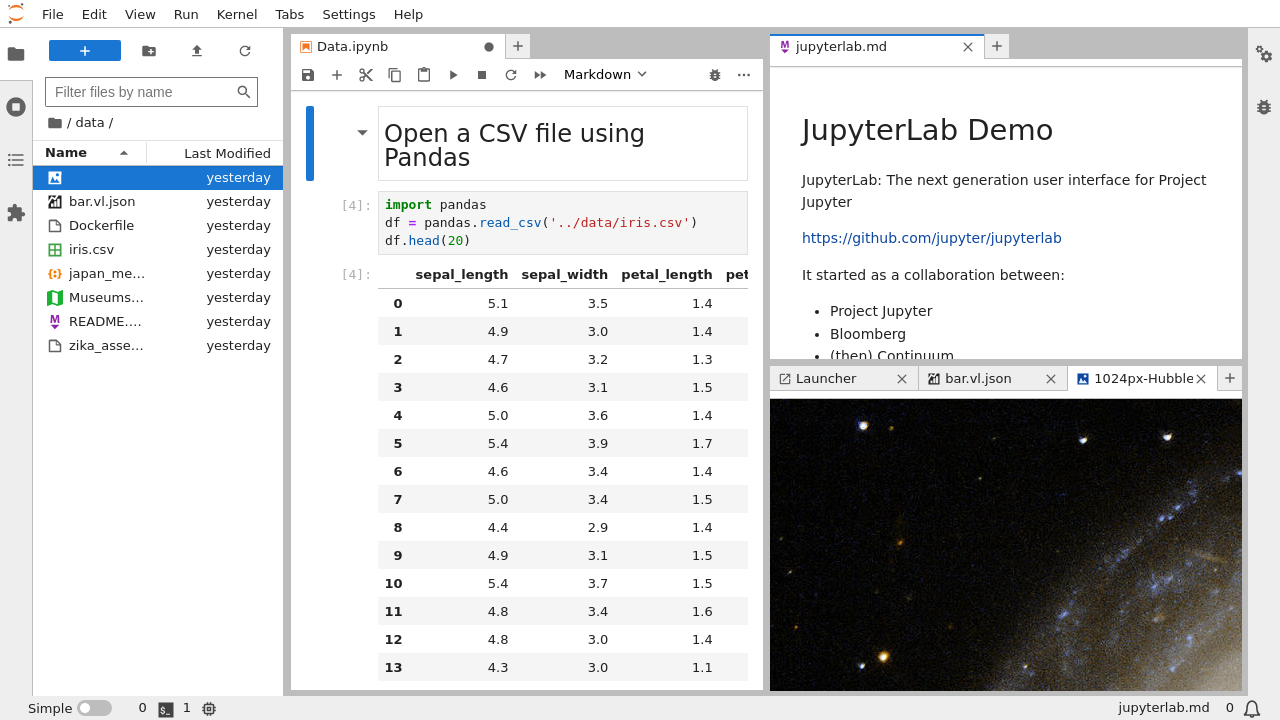

Here's what nobody tells you: JupyterLab is basically a browser running inside another browser, and it's hungry as hell. You've got:

- Browser memory: Your interface, plots, and every cell output eating RAM

- Kernel memory: Your actual Python process with variables and data

- System overhead: Extensions, server processes, and other background crap

I learned this the hard way when my "small" 2GB CSV crashed my 16GB laptop and froze the entire system. Turns out pandas needs 5-10x the file size in RAM just to load a CSV because it's doing type inference, creating indexes, and making temporary copies all over the place.

JupyterLab 4.4's Performance Fixes (Released May 21, 2025)

The JupyterLab 4.4 release fixed some issues but not the core problem:

- CSS optimization: Browser doesn't choke as much with many cells

- Memory leak fixes: Extensions clean up better (finally)

- Better startup: Loads faster, fails faster too

But here's the brutal truth: these improvements won't save you from the fundamental issue that pandas tries to load everything into memory at once.

The Size Categories That Will Screw You Over

Small datasets (< 1GB): These seem fine until you accidentally render a massive matplotlib plot that crashes your browser. I once spent 2 hours debugging why my notebook froze, only to realize a single plot was consuming 8GB of browser memory.

Medium datasets (1-5GB): This is where shit hits the fan. Your innocent 2GB CSV becomes a 12GB memory monster when pandas starts doing its thing. Your 16GB laptop? Good luck - the OS will start swapping and everything becomes unusable.

Large datasets (> 5GB): Forget pandas. Just forget it. If you try to pd.read_csv() anything this size, you deserve the kernel death you're about to get.

Why Standard JupyterLab Monitoring Sucks

JupyterLab won't tell you about memory problems until it's too late. No warning, no "hey you're about to run out of memory" - just sudden death. You need jupyter-resource-usage installed ASAP or you're flying blind:

pip install jupyter-resource-usage

## Restart JupyterLab to see the memory indicator

Even then, it only shows total system memory, not which variables are eating your RAM. For that nightmare, you need memory_profiler or Fil profiler. You can also try psutil for system monitoring, tracemalloc for Python memory tracking, or Memray for comprehensive memory profiling.

The "Solutions" That Don't Actually Work

"Buy more RAM": Yeah, because everyone has $2000 lying around for 64GB. Plus your datasets will just grow to fill whatever you have.

"Use a remote server": Great, now your notebook is slow as hell AND you can't see what's happening when it crashes. Network timeouts become your new best friend.

"Optimize your pandas code": Sure, but pandas still needs to make temporary copies during operations. Your "optimized" groupby still spikes to 40GB before settling down.

The actual solution is to stop trying to load everything into memory at once. Use Dask for familiar pandas syntax with lazy evaluation, Vaex for billion-row interactive exploration, Polars for lightning-fast performance, or Ray for distributed data processing. For traditional databases, consider DuckDB for analytical workloads.

How Your Kernel Will Die (A Field Guide)

I've seen these failure patterns countless times:

- Silent death: Kernel just stops. No error message, no logs, no nothing. Check your system logs for the OS memory killer.

- Browser freeze: Everything locks up, can't even save your work. Force-quit and hope autosave worked.

- System lockup: Your entire computer becomes unusable as swap files fill up. Hard reset incoming.

- Timeout death: Long-running operations just... stop. No completion, no error, just eternal waiting.

Each one requires different survival strategies, which we'll cover in the tools section.