Here's what nobody tells you: most AWS AI deployments are security disasters waiting to happen. Wiz Research found some nasty cross-tenant vulnerabilities in 2024, including LLM hijacking scenarios in real AWS environments. From what I've seen auditing production environments, easily 90%+ of SageMaker deployments are running with overprivileged execution roles - because copying examples from AWS docs is easier than understanding IAM.

The Three Security Catastrophes That Will Ruin Your Day

1. IAM Permission Hell (90% of Breaches Start Here)

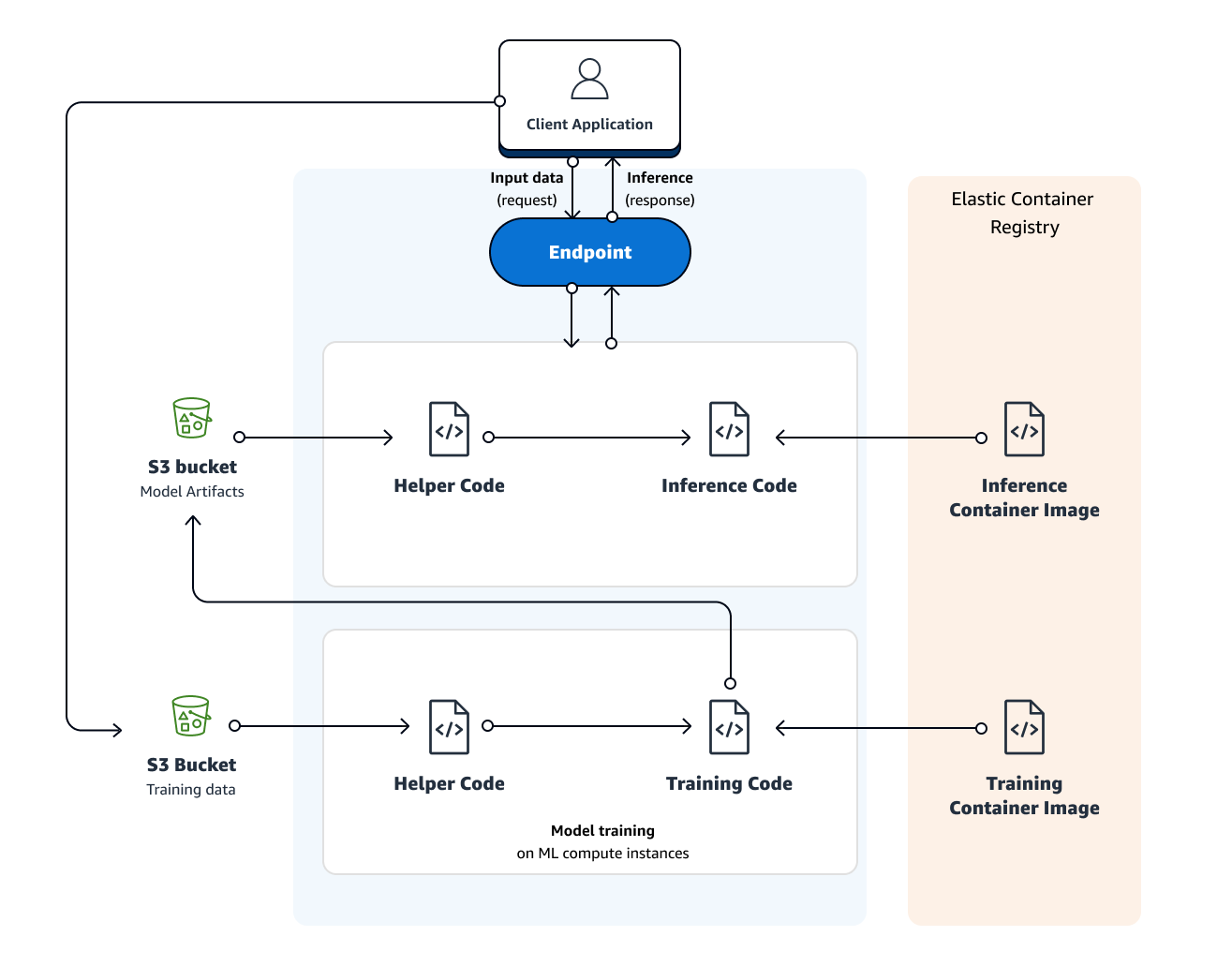

Most developers copy-paste the SageMaker execution role from AWS examples, which grants AmazonSageMakerFullAccess - essentially God mode for your ML environment. This policy allows:

- Full S3 access to buckets containing training data

- CloudWatch log creation and reading (including sensitive debug info)

- ECR repository access for container images

- VPC configuration changes

- KMS key usage for encryption/decryption

War story: Had this one company where their "ML developer" role basically had god mode because SageMaker kept throwing AccessDeniedException errors and they got tired of debugging IAM policies. Their training data was full of customer PII, proprietary algorithms in the model artifacts, and any dickhead with a compromised laptop could access everything. Took them 3 months and two security consultants to unfuck it because they had to audit every single permission and rebuild their entire RBAC system from scratch using permission boundaries and AWS Organizations SCPs. Meanwhile, their models kept failing in production because nobody knew which permissions were actually required vs just convenient. They had to maintain two separate environments - one for "getting shit done" and another for compliance theater.

2. VPC Misconfigurations That Expose Everything

Amazon SageMaker VPC configuration is where good intentions meet terrible execution. Most organizations either:

- Skip VPC entirely (training jobs run in AWS-managed infrastructure with internet access)

- Configure VPC incorrectly (NAT gateway misconfiguration exposes internal resources)

- Grant excessive security group permissions (0.0.0.0/0 access to debugging ports)

Real incident that still gives me nightmares: Had a client who opened port 8888 for Jupyter notebooks "just for a quick demo" and forgot about it for like 6 months. Some asshole found it through Shodan (because of course they did), waltzed right in, and grabbed their entire fraud detection model plus training data stuffed with financial records. Cost them around $1.8 million in regulatory fines plus another year of legal bullshit. Took 4 months to unfuck because nobody documented what they were actually using, and turns out SageMaker notebook instances don't log access by default unless you configure CloudTrail data events. Plus their security team had to manually comb through 6 months of CloudWatch logs to figure out what data got accessed - because when you don't have proper logging, you're basically flying blind. Fucking nightmare.

3. Encryption Keys Managed by Toddlers

AWS KMS integration with AI services is mandatory for compliance but implemented poorly. Common mistakes:

- Using AWS-managed keys instead of customer-managed keys (no rotation control)

- Sharing KMS keys across environments (dev keys used in production)

- Granting overly broad KMS permissions (

kms:*instead of specific actions) - No audit trail for key usage

The Vulnerability Research That Should Scare You

In February 2024, Aqua Security researchers identified critical vulnerabilities in six AWS services including SageMaker and other AI-adjacent services like Amazon EMR and AWS Glue. The vulnerabilities included:

- Remote Code Execution: Attackers could execute arbitrary code in SageMaker environments

- Full Service Takeover: Complete control over AI training and inference infrastructure

- AI Module Manipulation: Ability to modify ML models and training processes

- Data Exfiltration: Access to training datasets and model artifacts

AWS patched these specific vulnerabilities, but the research highlighted systemic issues in how AWS AI services handle authentication, authorization, and network isolation.

Model Security: The Blindspot Everyone Ignores

Model Poisoning and Theft: Your trained models are intellectual property worth millions, yet most organizations store them in S3 buckets with public read access. Amazon SageMaker Model Registry provides versioning and approval workflows, but doesn't prevent authorized users from downloading and stealing models.

Training Data Contamination: If attackers can inject malicious data into your training pipeline, they can poison your models. This is especially dangerous for Amazon Bedrock custom fine-tuning, where contaminated training data can compromise foundation models. Implement data validation pipelines and AWS Glue DataBrew for anomaly detection.

Inference Time Attacks: Production inference endpoints can leak training data through carefully crafted queries. Amazon SageMaker endpoints need rate limiting, input validation, and monitoring to prevent extraction attacks. Use Amazon API Gateway with custom authorizers and AWS WAF for additional protection.

The Compliance Nightmare: GDPR, HIPAA, and SOC2 Reality

GDPR Article 25 (Data Protection by Design): AWS AI services can be GDPR-compliant, but not by default. You must:

- Implement data minimization in training pipelines

- Enable automatic data deletion after retention periods

- Provide data subject access request capabilities

- Document all data processing activities

HIPAA Business Associate Agreements: Amazon Bedrock supports HIPAA workloads, but only if you configure it correctly:

- Enable encryption at rest and in transit

- Use VPC endpoints to avoid internet routing

- Implement audit logging for all PHI access

- Regular access reviews and permission auditing

SOC2 Type II Controls: AI workloads require additional controls beyond standard AWS SOC2:

- Model drift monitoring and alerting

- Training data lineage and provenance tracking

- Automated vulnerability scanning of ML containers

- Incident response procedures for model failures

The harsh reality: most organizations fail their first compliance audit because they treat AI workloads like traditional applications. AI systems require specialized controls that auditors are just starting to understand.