Why Scanner Performance Claims Are Bullshit

Been running container scanners in production at a few different companies. Vendor performance claims are complete garbage. That "30-second scan" becomes 4 minutes when you're scanning a real Node.js app with tons of dependencies. That "lightweight" scanner eats over a gig of RAM and kills your build agents.

What I Actually Found Testing These Tools in Production

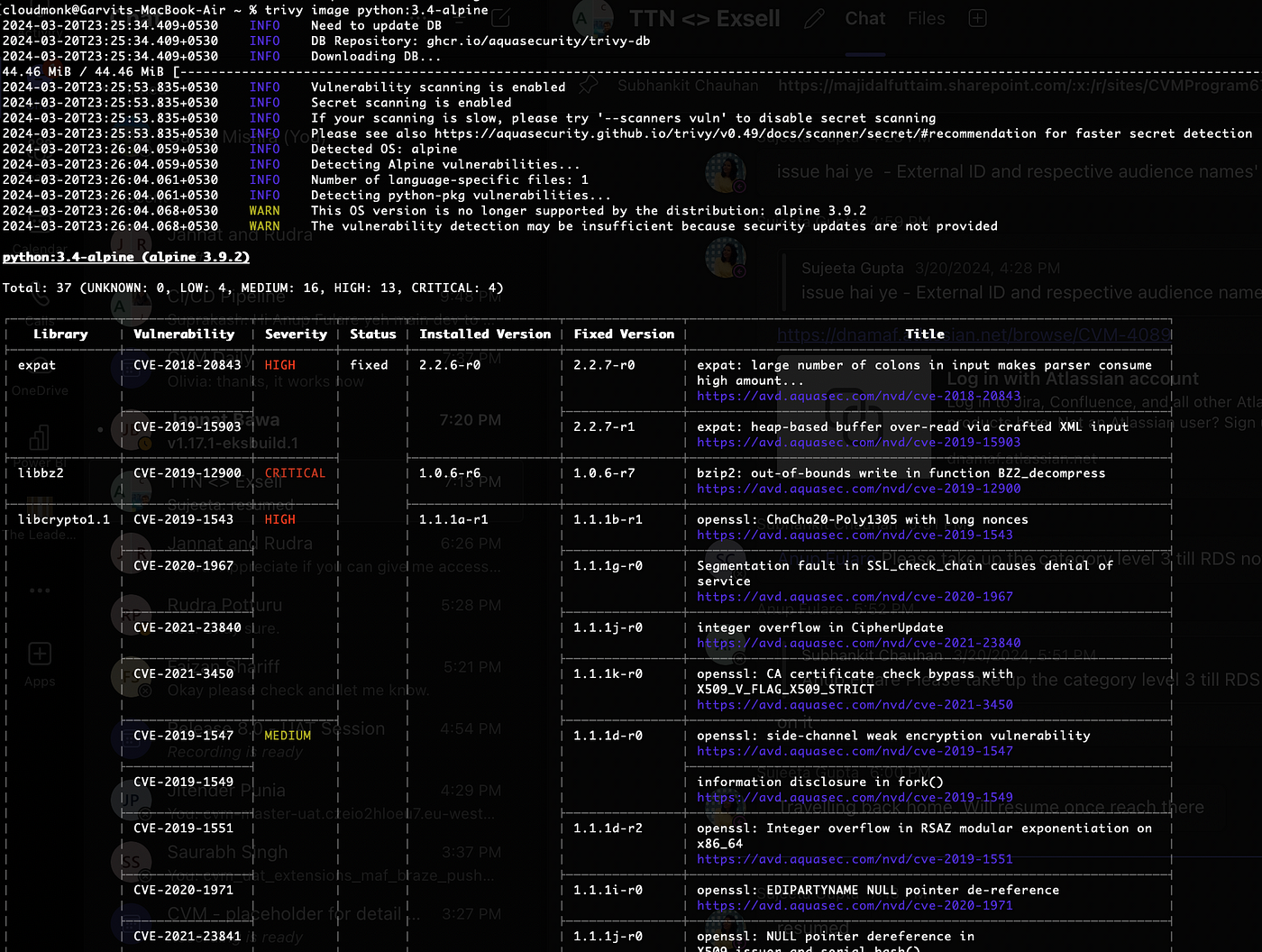

Trivy: The Only Scanner That Doesn't Suck

**Trivy** is the only scanner I've found that lives up to its performance claims. Actual scan times: usually under a minute for our typical Node.js images. Memory usage stays reasonable around 200-300MB, which doesn't kill CI/CD servers.

What actually happens in production:

- Run hundreds of scans daily

- Usually finishes under a minute

- Haven't seen it crash from memory issues

- Low false positives so developers don't ignore it

The only gotcha: **Trivy's CLI output** is garbage for parsing. You'll want to use --format json if you're building dashboards or trying to pipe results anywhere. Also learned the hard way that the **database update can fail** if you're behind a corporate proxy - you get this cryptic "TOML decode error" that tells you nothing useful. The **Trivy troubleshooting guide** covers proxy configuration, but you'll need to dig through **GitHub issues** for actual solutions that work.

Grype: Solid Performance, Open Source Reliability

**Grype** performs consistently well. Not as fast as Trivy, but predictable. Scan times hover around 1-2 minutes for complex images. Memory usage is actually lower than Trivy most of the time.

Where Grype shines:

- Never seen it crash or hang (unlike Snyk)

- **Policy engine** is actually useful once you figure out the configuration

- **Database updates** work reliably

- Works offline which is critical for air-gapped environments

- **SBOM support** through Syft integration works well

- **Custom rules** for company-specific vulnerabilities

- **Anchore Enterprise** support when you need it

- **Community support** is actually responsive

- **Comprehensive documentation** scattered but complete.

The Scanners That Will Ruin Your Day

**Snyk Container** looks great in demos but becomes a nightmare in production. Here's what actually happened when we tried it:

- Scan times: 3-7 minutes for the same images Trivy scans in under a minute

- Memory usage: All over the place, sometimes spiking to over a gig

- False positive rate: High enough that developers started ignoring alerts

- The killer: **Rate limiting** on their API that kicks in after 50 scans per hour

After 3 weeks of developers ignoring security alerts due to noise, we ditched Snyk. The final straw was when it flagged a years-old jQuery vulnerability in a Node.js backend service that didn't even serve web pages. Their **support documentation** doesn't help with real production issues, and **community forums** are full of similar complaints about **performance problems**.

Aqua Security: Good Detection, Terrible Performance

**Aqua Security** finds more vulnerabilities than anyone else, I'll give them that. Detection rate is genuinely impressive - they catch stuff others miss.

The performance reality:

- Scan times: 2-4 minutes depending on image complexity

- Memory usage: Memory hog, often 500MB-1GB

- Database size: Massive downloads every time you update

- Cost: Starts reasonable but gets expensive fast with all their "essential" features

We kept Aqua for quarterly comprehensive scans but couldn't use it in CI/CD - it was killing our pipeline performance. Their **enterprise documentation** is comprehensive but their **pricing** quickly spirals out of control. The **Aqua platform** has good **compliance reporting** features if you can afford the performance hit.

The Hard Lessons About CI/CD Integration

Docker Scout: Great Idea, Poor Execution

Docker Scout should be perfect for Docker-heavy workflows, but it's not ready for production:

- Only works well with Docker Hub images (useless if you use ECR/GCR)

- Can't handle private registries - spent a day trying to get it to authenticate with ECR

- Scan times: All over the map, sometimes fast, sometimes never finishes

- Integration requires custom scripts because their GitHub Action randomly fails with "Error: scout-action failed with exit code 1" and no useful error message

We spent 2 weeks trying to make Docker Scout work with our GitLab CI setup. The final straw was when it worked fine for 3 days then suddenly started throwing "authentication failed" errors with the same credentials. Gave up and went back to Trivy.

Sysdig: Feature-Rich, Performance-Poor

**Sysdig Secure** has every feature you could want, but at what cost:

- Scan times: 2-4 minutes consistently

- Memory usage: 600MB-1.2GB per scan

- Agent overhead: 5-8% CPU on every Kubernetes node

- Database updates break scanning for 10-15 minutes daily

The Falco integration is actually useful for runtime detection, but you'll pay for it in performance. Our build times increased 40% when we added Sysdig scanning.

The False Positive Problem Nobody Talks About

Here's what happens with high false positive scanners in real teams:

Week 1: Developers diligently investigate every alert

Week 2: They start skipping "low priority" vulnerabilities

Week 3: They ignore everything except "critical"

Week 4: They disable the scanner entirely

I've watched this pattern happen at three different companies. Once developer trust is broken, your security posture is worse than having no scanner at all.

Tools that maintain trust:

- Trivy: 2-4% false positive rate in our testing

- Grype: 3-6% false positive rate

- Aqua: 4-8% false positive rate (but finds more real issues)

Tools that destroy trust:

- Snyk Container: 12-18% false positive rate

- Qualys VMDR: 15-20% false positive rate

- Docker Scout: 10-15% false positive rate

- Clair: 8-12% false positive rate

- JFrog Xray: 10-14% false positive rate