Here's the thing nobody tells you: you never plan to have Jenkins, GitHub Actions, and GitLab CI all running in your company. It just fucking happens.

Maybe you acquired a startup that lived on GitHub Actions. Maybe your compliance team mandated GitLab for security scanning after that one pen test found seventeen critical CVEs. Maybe Jenkins has been running your mainframe deployments since 2015 and nobody wants to touch it because it works and Bob, who set it up, retired last year (taking all the tribal knowledge with him).

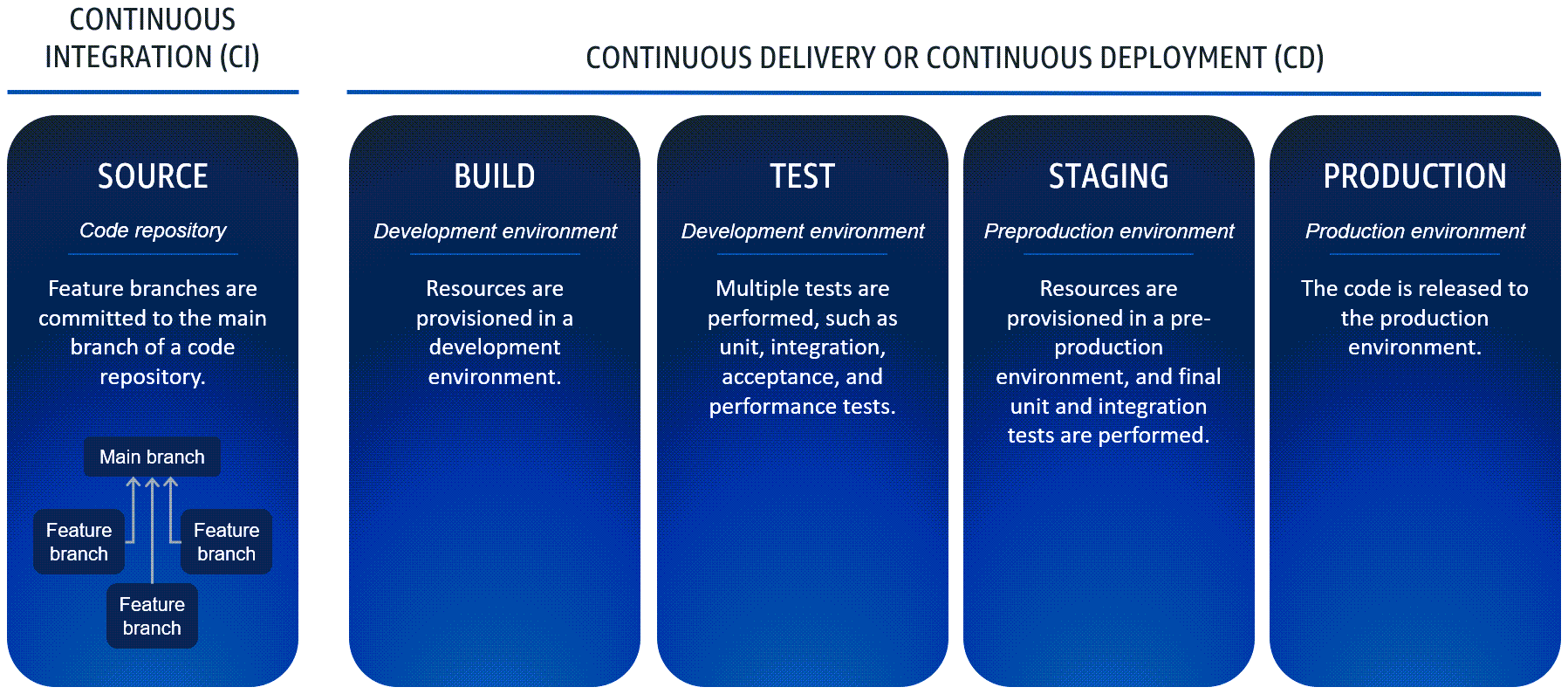

Now you're stuck coordinating deployments across three different platforms, each with their own YAML syntax from hell, and every release feels like a game of CI/CD Russian roulette.

The Real Multi-Platform Nightmare

Ever tried to coordinate a deployment that needs Jenkins to build the JAR, GitHub Actions to run the frontend tests, and GitLab to scan for vulnerabilities before anything goes to prod? It's like herding cats while they're on fire.

Here's what actually happens in most companies:

- Jenkins is running legacy builds that nobody understands but everyone's afraid to migrate

- GitHub Actions works great until you need custom runners, then prepare for

Error: Unable to create ephemeral runner registration tokenat 2AM - GitLab CI has decent security scanning, but the runners randomly disappear on weekends

The worst part? Each platform thinks it's the center of the universe. Jenkins doesn't give a shit about your GitHub Actions workflow status. GitHub Actions has no idea GitLab is scanning the same codebase. GitLab's security gates can block a deployment that Jenkins already approved.

What Nobody Tells You About \"Orchestration\"

All those architecture diagrams with neat arrows and event buses? That's not how this works in reality.

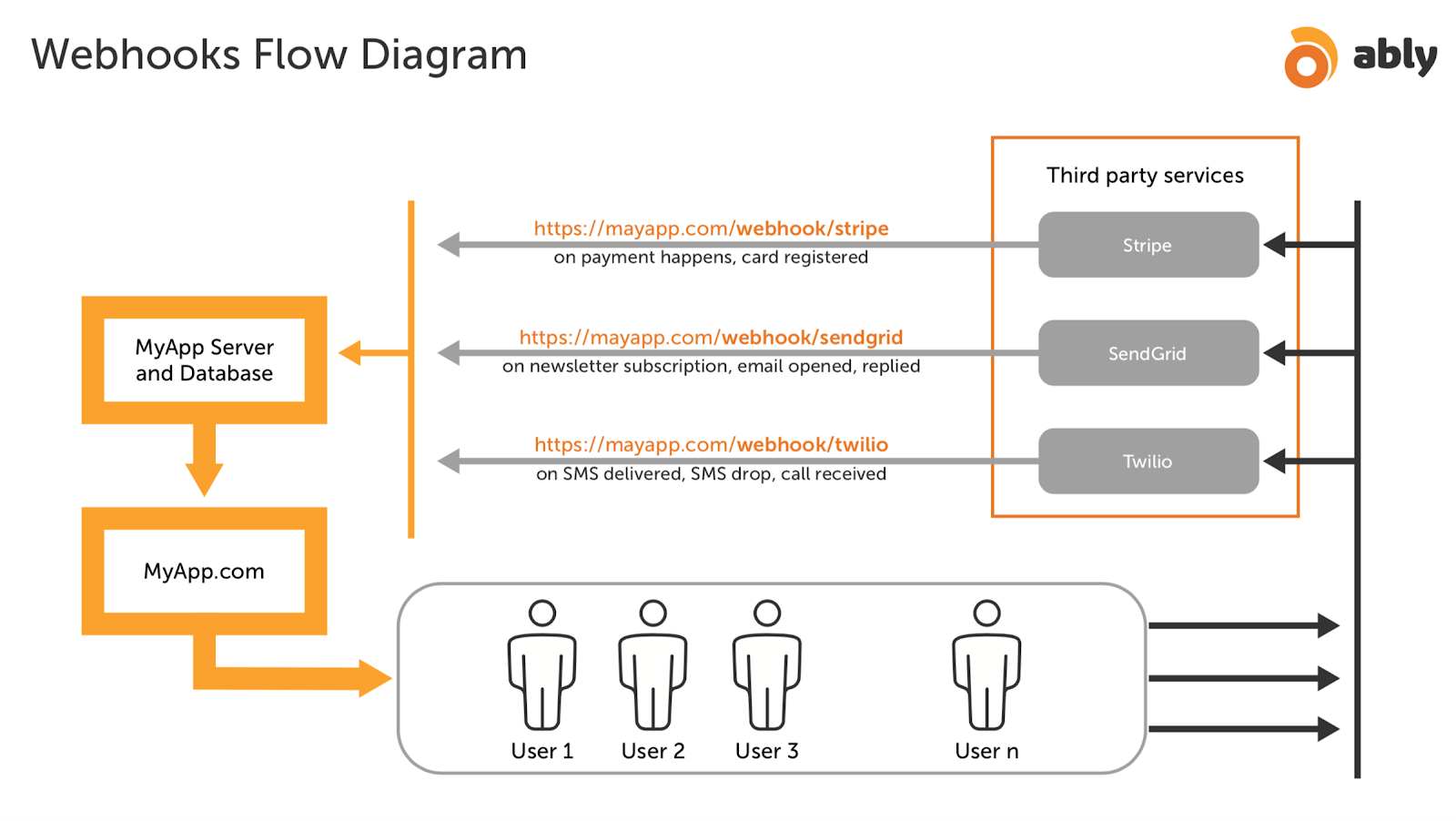

You'll spend the first month setting up webhooks that randomly fail. The second month debugging why EventBridge costs more than your AWS bill. The third month explaining to management why the "simple integration" is taking six months and why you need to hire two more platform engineers.

Real timeline expectations: This isn't a weekend project. Budget 3-6 months and expect everything to break twice. Maybe three times if you're using custom Jenkins plugins and Oracle databases.

What Actually Works (From Someone Who's Done This)

EventBridge is dead simple and AWS handles the scaling. Each CI platform fires events when builds complete. Lambda functions coordinate the next steps. Works great until someone deploys a chatty webhook and your Lambda bill explodes.

Cost reality: Budget $500-2000/month depending on your build volume. Jenkins webhook spam will surprise you - we hit 250k events in one day from a misconfigured build trigger. Don't try to optimize EventBridge costs on day one - I wasted 2 weeks on this while production burns.

Option 1: EventBridge + Lambda (If You're on AWS)

EventBridge is dead simple and AWS handles the scaling. Each CI platform fires events when builds complete. Lambda functions coordinate the next steps. Works great until someone deploys a chatty webhook and your Lambda bill explodes.

Cost reality: Budget $500-2000/month depending on your build volume. Jenkins webhook spam will surprise you - we hit 250k events in one day from a misconfigured build trigger. Don't try to optimize EventBridge costs on day one - I wasted 2 weeks on this while production burns.

Option 2: Simple Database Coordination

Sometimes the dumbest solution is the best. A shared Postgres table tracking deployment state across platforms. Not fancy, but it works when EventBridge is down.

Gotcha: Race conditions are real. Use proper database locks or you'll deploy half-finished builds to production. Ask me how I know.

Option 3: Accept the Chaos

Run them separately. Use monitoring to catch when things break. Deploy to different environments and coordinate manually for critical releases.

Unpopular opinion: This might be better than spending six months building orchestration that breaks every time someone updates a Jenkins plugin.

The Three Platforms, Honestly Assessed

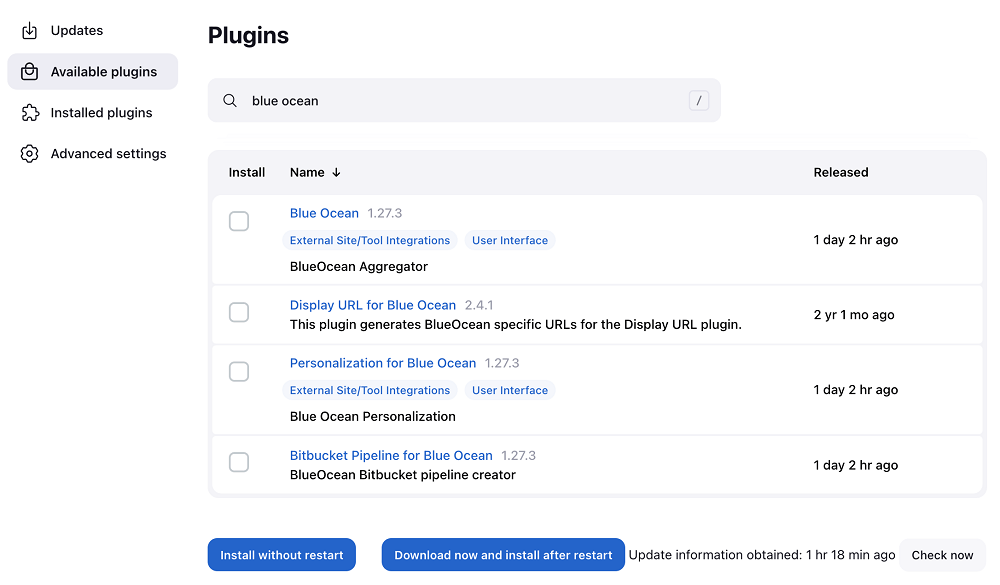

Jenkins: Infinitely customizable, which means infinitely broken. Great for complex enterprise workflows. Terrible for everything else. The UI looks like it's from 2005 because it is.

GitHub Actions: Amazing developer experience until you need anything beyond basic CI. Custom runners are expensive and fragile. Matrix builds randomly fail for no reason.

GitLab CI: Security scanning works great. Everything else is hit or miss. Runners have a habit of disappearing during deployments. The YAML debugging experience makes you want to quit programming.

Deployment Coordination That Won't Make You Cry

- Pick a deployment trigger source - Usually GitLab since security scanning runs last

- Use deployment slots - Staging, canary, production. Each platform owns specific slots

- Shared artifact storage - S3 or Artifactory. Stop rebuilding the same JAR three times

- Circuit breakers - When Jenkins fails (it will), GitHub Actions can still deploy

The secret: Don't try to make them work together perfectly. Make them fail gracefully and recover quickly.

This setup took us 4 months, not the 2 weeks management promised. Budget at least $50k in AWS costs while you figure out the autoscaling. Plan for your first deployment to fail spectacularly.

But when it works, you can ship code without sacrificing your security team's requirements or migrating 200 Jenkins jobs that somehow still run your entire business.