The standard OpenAI API costs about $0.01 per 1K input tokens for GPT-4. Enterprise tier? You're looking at minimum $50k annual commitments plus 3-6 months of sales calls where they refuse to tell you the actual price until you sign three NDAs.

Here's what that money actually buys you, tested in production environments that process 50M+ requests monthly:

Your Data Won't Train Their Next Model (They Promise)

Standard API: Your prompts disappear into OpenAI's training data black hole. Enterprise: Zero data retention for training - they keep logs for 30 days max for abuse monitoring, then delete everything. Your legal team will still have nightmares, but at least there's paperwork.

Reality check: Two companies I worked with got absolutely fucked when their compliance team found contractors secretly using standard API. The audit findings were brutal. Budget 6 months to clean up shadow AI usage before your next SOC 2 audit.

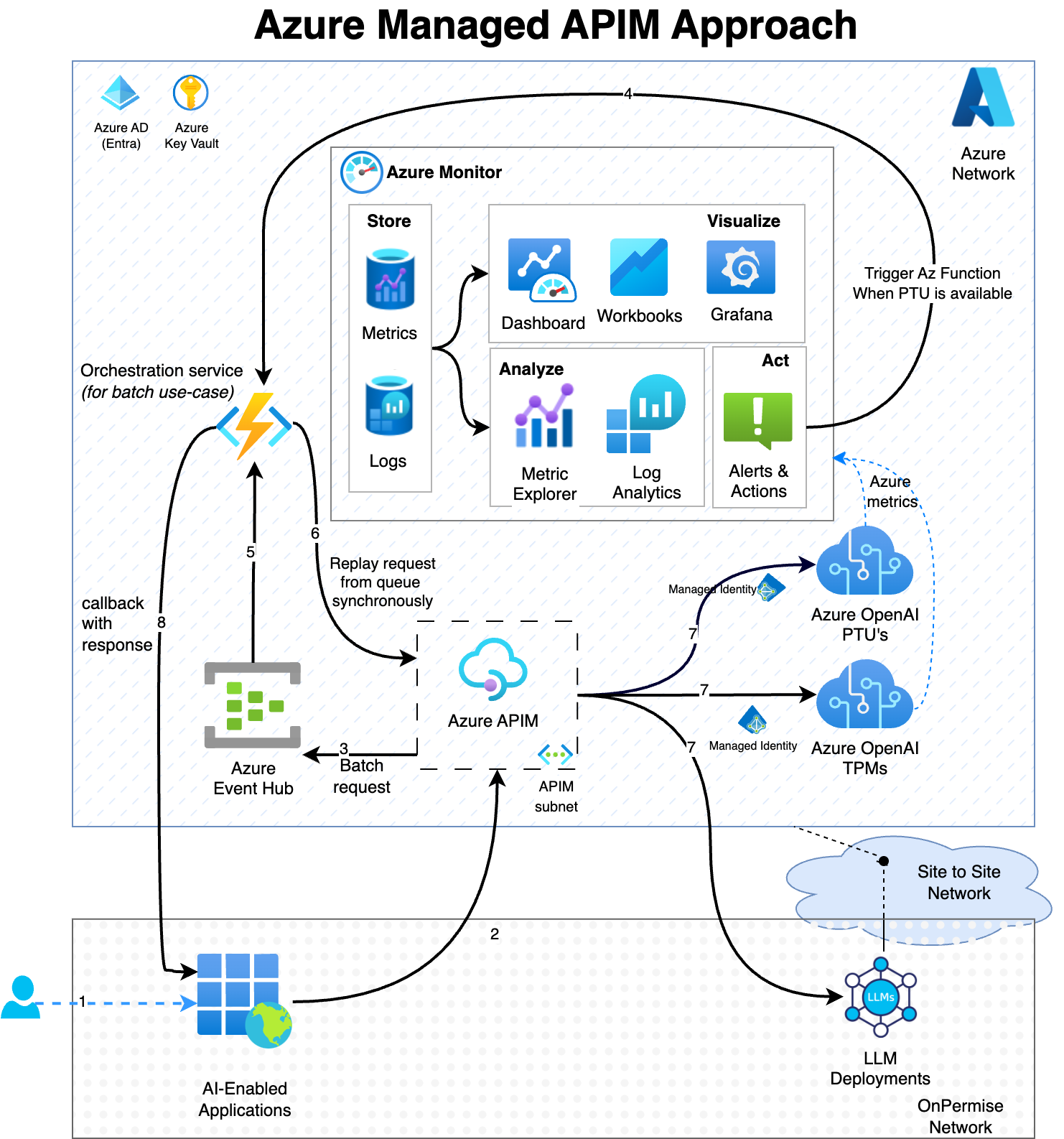

Dedicated Capacity Actually Works

Standard API on Black Friday 2023: 429 errors every 30 seconds, 15-second response times when ChatGPT went viral and broke the internet. Enterprise Scale Tier: consistent 1.2s latency because you have dedicated GPU allocation. The difference is having your own lane vs fighting traffic.

War story: Our customer service bot went down during a product launch because we hit rate limits on standard tier. Took down the entire support queue for 3 hours. Cost us more than a year of enterprise pricing in refunds and angry customers who couldn't get help during our biggest launch.

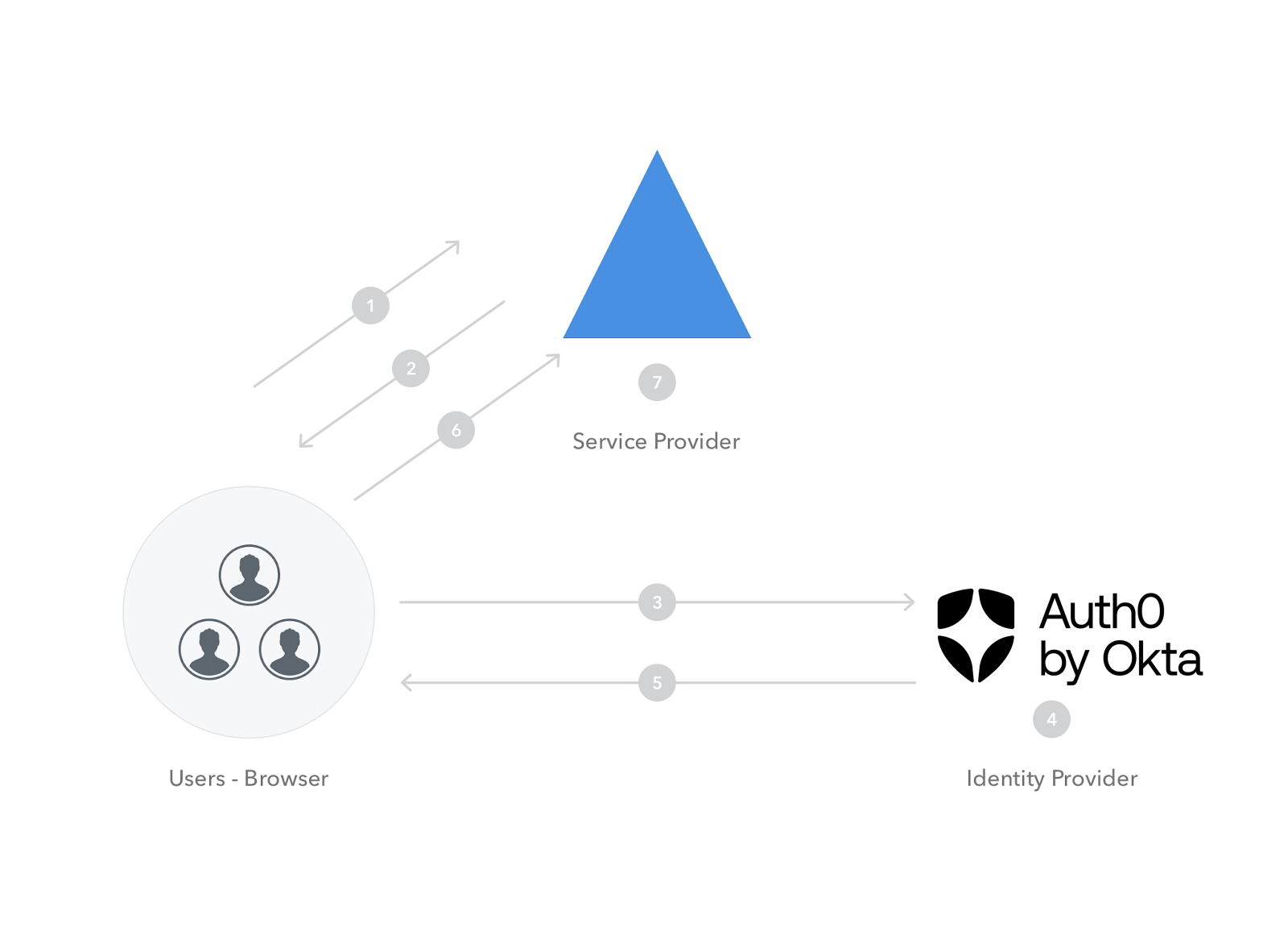

SSO Integration That Doesn't Suck

Enterprise gives you SAML SSO that actually works with your identity provider. Standard API? You're managing API keys like it's 2015. I've seen too many leaked keys in Slack channels and git commits - nothing kills a Friday afternoon like rotating compromised API keys.

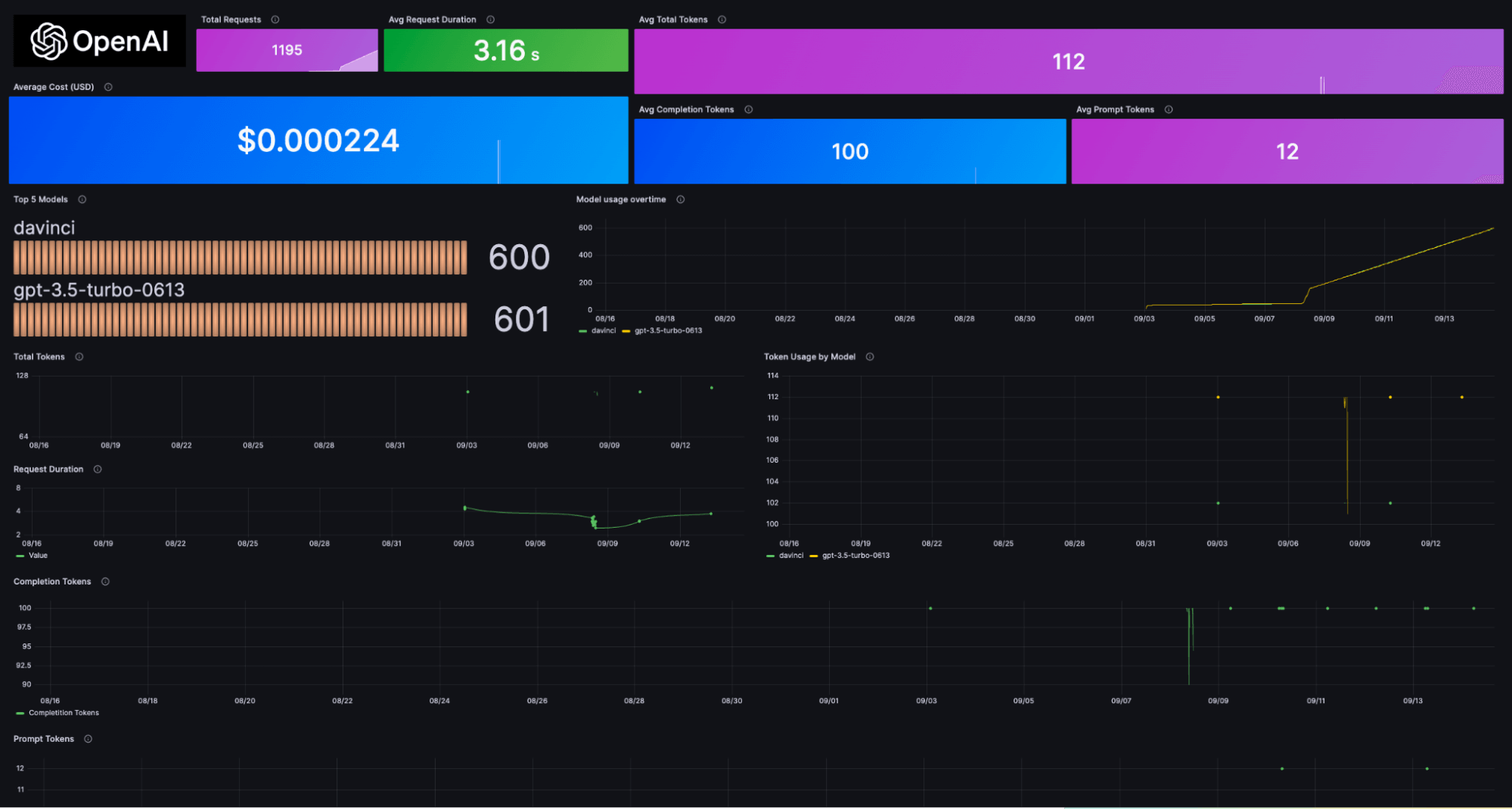

The admin dashboard shows which specific users are burning through tokens, not just aggregate usage. Useful when someone in marketing decides to generate 10,000 product descriptions without telling anyone and you suddenly hit your monthly quota on day 3.

The Support Actually Supports

Standard API support: Submit a ticket, get a response in 3-5 business days from someone reading scripts. Enterprise: Phone numbers that humans answer. Had a critical issue with GPT-4 responses getting stuck in infinite loops - enterprise support had our custom deployment patched within 4 hours.

Pro tip: The enterprise Slack channel with OpenAI engineers is worth the price alone when you're debugging weird model behavior at 3am. Nothing beats being able to ping an actual human instead of screaming into the void of their support portal.

The bottom line: Enterprise pricing exists because the standard API will eventually let you down when it matters most. Whether that's worth 10x the cost depends on how much your business can afford AI downtime - and how good you are at explaining random outages to your CEO.

The reality check: Enterprise API costs 10x more but eliminates the randomness that kills production systems. Whether that's worth it depends on how much your business can afford AI failures during peak usage.

Next step: The comparison table below breaks down exactly what your money buys versus standard API - spoiler alert, it's mostly peace of mind and someone to blame when things break.