Our first RAG system took down production twice in the first week. The second time was during a customer demo. I learned some hard lessons about what actually matters when you're not dealing with clean demo data.

The Three Things That Will Ruin Your Day

Vector Database Hell

- I started with Pinecone because everyone said it was "production ready." It worked great until we hit 50k concurrent users during a product launch. Pinecone queries started timing out randomly, their support told us to "implement retries," and our search quality went to shit. Switched to pgvector running on dedicated hardware - yeah it's more work, but at least I can actually debug it when things break. Weaviate and Qdrant have similar scaling issues but better error reporting.

![]()

Claude Bills That Make You Cry

- Claude 3.5 Sonnet costs $15 per million output tokens. Sounds reasonable until your chatbot goes haywire and racks up $2,400 in three hours because some user figured out how to make it write novels. Context caching can cut costs by 90% but fails silently and you don't find out until your bill arrives. Anthropic's pricing helps estimate costs but doesn't account for retry failures or runaway queries.

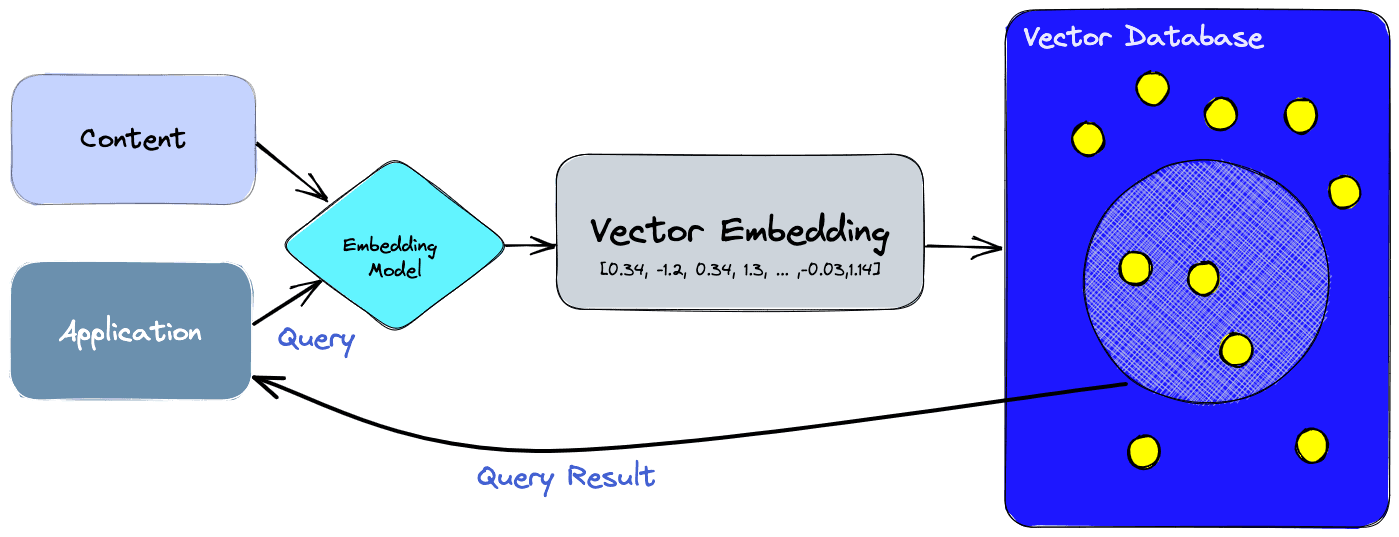

Embeddings That Randomly Suck

- Used OpenAI's text-embedding-3-large for eight months. Everything worked fine until one day search results turned to garbage. Turns out they updated the model and didn't tell anyone. All our existing embeddings were suddenly incompatible. Re-embedding 50 million chunks took three days and cost $8k. Sentence Transformers and Cohere embeddings have similar version compatibility issues.

The Stuff That Actually Breaks In Production

Chunking Is Where Dreams Go To Die

Your perfect 512-token chunks work great on clean markdown. Then you hit a PDF with tables, graphs, and footnotes. I spent two weeks debugging why our legal document system kept missing contract terms. Turns out fixed chunking was splitting table headers from their data. The AI would confidently answer questions about contract limits while looking at orphaned table cells with no context.

Solution that actually works: Semantic chunking using sentence embeddings to group related content. Takes 3x longer to process but stops breaking on complex documents. LlamaIndex chunking strategies and Unstructured's chunking strategies offer alternatives. Still fails on poorly formatted PDFs - garbage in, garbage out. Adobe's PDF extraction API works better for complex layouts but costs more.

Network Timeouts Will Kill You

Here's why your users hate waiting - each query hits multiple services and they all love to be slow when you need them most:

- Embedding API: Usually fine, sometimes takes 2+ seconds when OpenAI's having issues

- Vector search: Fast when cache hits, slow as hell when it misses and has to scan millions of vectors

- Claude API: Anywhere from 2-15 seconds depending on how much it wants to think

Chain them together and you're fucked - 8-12 seconds per query, sometimes way longer when everything decides to be slow at once. Users get impatient and bail. I learned this when our analytics showed 60% query abandonment during peak hours.

Fix that actually works: Kill anything that takes too long. Embeddings taking >500ms? Use cached results. Vector search timing out? Return approximate matches. Claude being Claude? Return shorter responses. Better to give users something than nothing - learned this during our Product Hunt launch when everything went to hell.

Redis saves your ass for embedding caching and proper Nginx timeouts prevent the whole system from dying when one service shits the bed.

Memory Leaks in Production

LangChain leaks memory like crazy when processing large documents. We were restarting pods every 4 hours because memory usage would hit 8GB and everything would crawl. Turns out LangChain's PDF loaders keep entire documents in memory even after processing. PyPDF2 and pdfplumber have similar issues.

Switched to streaming document processing and manual garbage collection. Ugly but works. Our pods now run for weeks without issues. Memory profilers like py-spy help identify specific leaks.

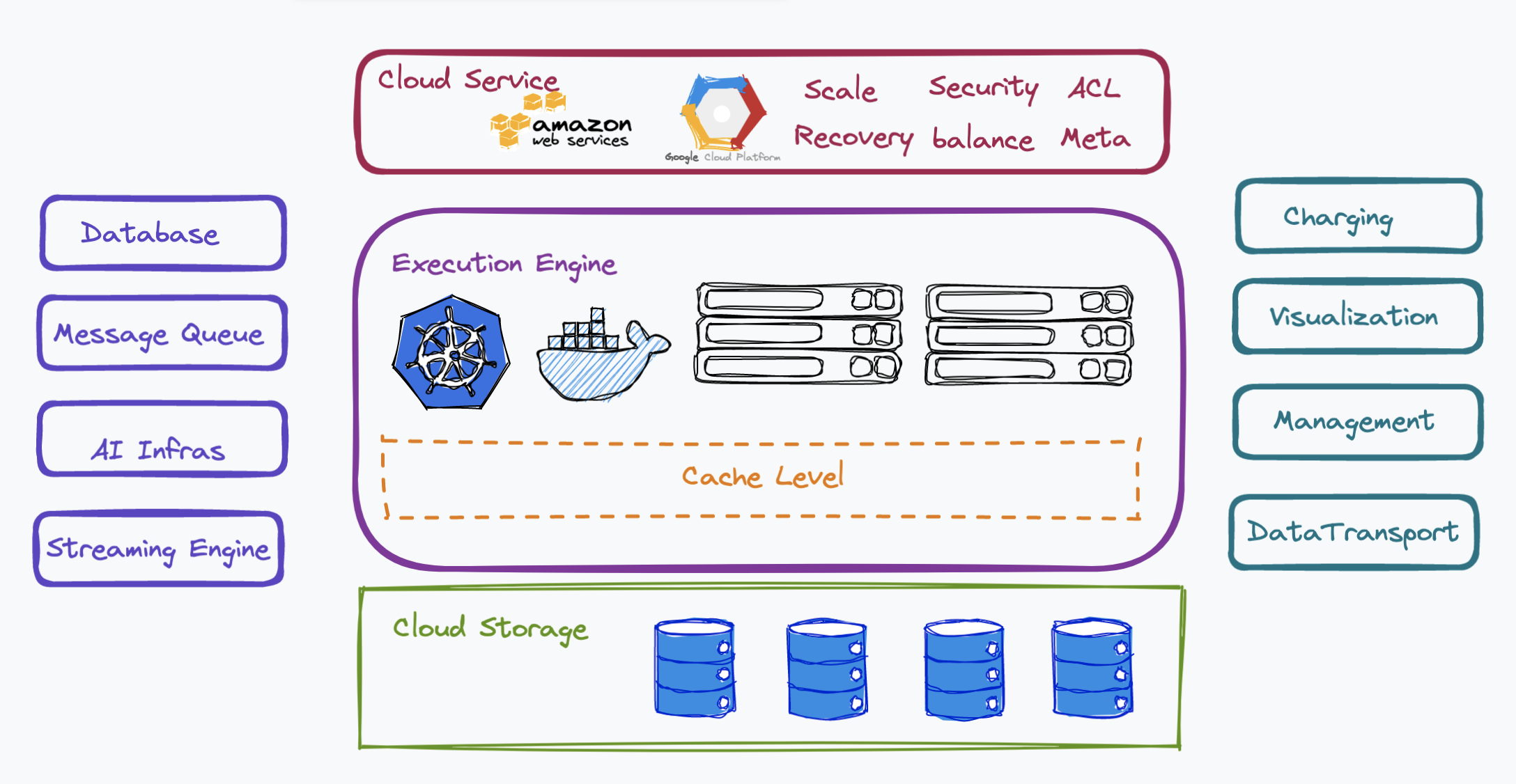

What Production RAG Actually Looks Like

Forget the clean architecture diagrams. Real production RAG is held together with duct tape and circuit breakers.

We run three embedding models in parallel because they all have different failure modes. If one fails, we fall back to the others. Response quality drops by maybe 10% but the system stays up. OpenAI, Cohere, and HuggingFace Transformers all have different rate limits and failure patterns.

Our vector database has warm standby replicas because Pinecone will randomly go read-only during maintenance windows they don't announce. Failover takes 30 seconds but beats being down for hours. PostgreSQL with pgvector offers better control over maintenance windows.

Claude prompts are versioned and A/B tested because every prompt change can break edge cases you never thought of. We've rolled back prompts at 2am more times than I care to count. LangSmith helps track prompt performance across versions.

The monitoring dashboard has 47 different metrics because everything can fail in a different way. P95 latency, embedding error rates, vector similarity score distributions, Claude token usage by hour. If one goes weird, something's about to break. Grafana, Datadog, and New Relic all integrate with RAG monitoring pipelines.

This isn't the elegant system I planned. It's the system that survives contact with real users, real data, and real deadlines.