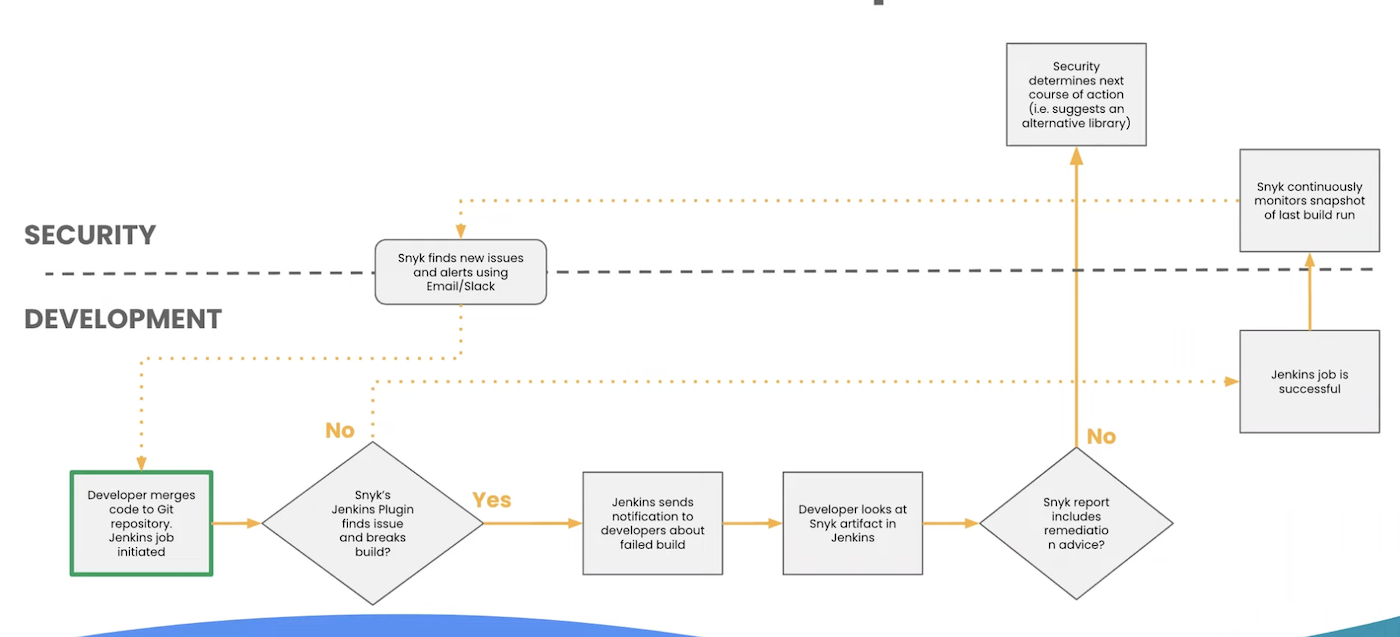

I've debugged Snyk authentication failures more times than I care to remember, and it never gets less painful. When Snyk can't authenticate to your registry, your entire deployment pipeline goes to shit. Here's what actually breaks and why.

The Error Messages That Tell You Nothing

When Snyk auth fails, you'll see these lovely error messages that are about as helpful as a chocolate teapot:

Error: authentication credentials not recognized- This is Docker Hub's way of saying "something is wrong" without being remotely helpful about whatFailed to get scan resultswith HTTP 401 - Could be expired tokens, wrong creds, or the registry being an assTLS handshake timeout- Usually your corporate firewall being difficult, not auth at allManifest not found- Either the image doesn't exist or your tokens don't have the right permissions (learned that the hard way)

Snyk's error catalog says these failures happen for "various reasons" which is corporate speak for "we don't know either, good luck."

Registry-Specific Ways Things Break

Every registry vendor has found creative ways to make authentication painful:

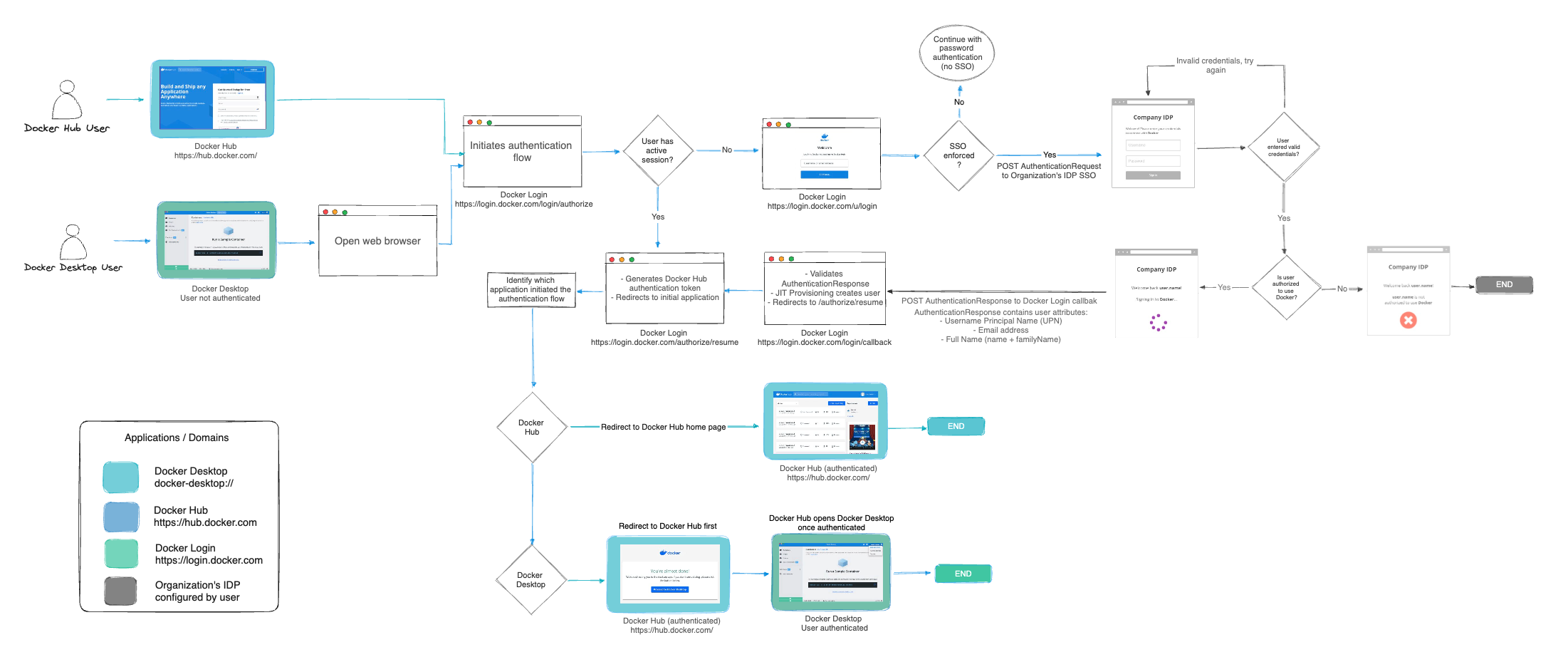

Docker Hub randomly stops working and nobody knows why. One day your pipeline works fine, the next day it's throwing authentication errors. Docker's access token docs say to use access tokens instead of passwords, which works until Docker decides to change something without telling anyone. Check their status page when this happens - their rate limiting also causes auth-looking failures that'll waste hours of your time.

AWS ECR tokens expire every 12 hours because AWS loves making your life miserable. Set up a cron job to refresh tokens or prepare to get paged at 3am when your scans fail. Speaking of AWS being difficult, the integration guide doesn't mention that ECR in us-east-1 behaves differently than other regions for some AWS-specific reason nobody talks about. You'll hit weird throttling issues that AWS documentation pretends don't exist.

GitHub Container Registry requires personal access tokens with specific scopes, and if you get the scopes wrong, it fails silently. GitHub's integration docs are actually decent for once, which is genuinely surprising. Their token scope bullshit is documented properly, unlike most of their other features.

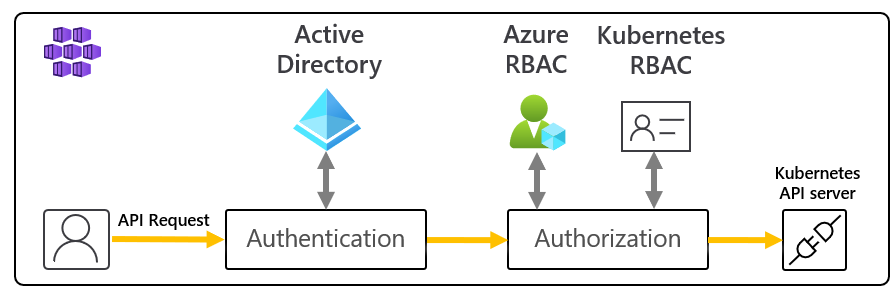

Private registries are where dreams go to die. I've seen grown engineers cry over certificate chains and custom auth headers. Docker's registry API spec explains how authentication should work in theory, while Harbor's docs cover enterprise setups if you enjoy pain. Nexus Repository and JFrog Artifactory docs are equally terrible for different reasons.

What Really Breaks (The Stuff They Don't Tell You)

After fixing this shit dozens of times, here's what actually causes auth failures:

Token expiration is the #1 killer. AWS ECR tokens expire every 12 hours, Docker Hub tokens can expire randomly, and nobody tells you until your CI fails. Write a script to check token validity or you'll hate your life.

IAM permissions are like Russian roulette. You need exactly the right permissions or nothing works. That ecr:BatchCheckLayerAvailability permission sounds optional but it's not - learned that when I spent 4 hours debugging "access denied" errors.

Network bullshit masquerading as auth problems. Your corporate firewall, proxy configs, and DNS resolution can all cause what looks like authentication failures. I once spent a day debugging "authentication failed" only to find out the registry hostname wasn't resolving correctly. Pro tip I learned the hard way: DNS is always the problem - it's basically the first law of devops troubleshooting.

When Everything Goes Wrong

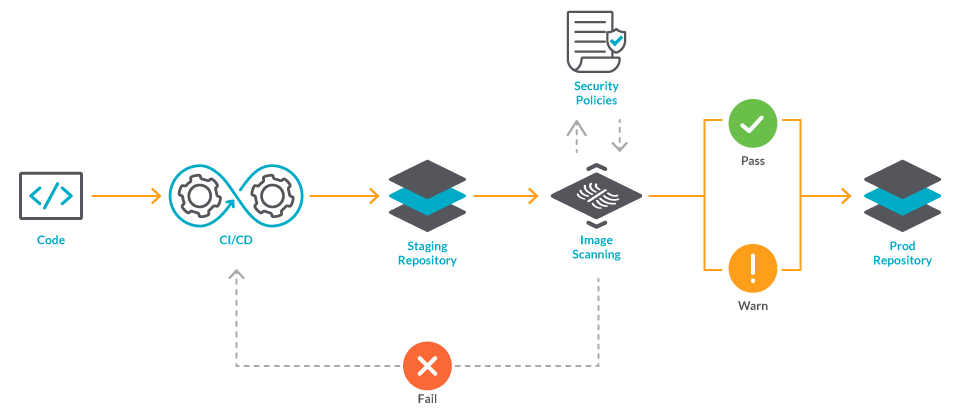

When auth breaks, your entire pipeline dies. CI fails at the security scan step, deployments get blocked, and developers start threatening to disable security checks entirely. I've been there - it's 3am, production deployment is blocked, and Snyk is throwing cryptic authentication errors.

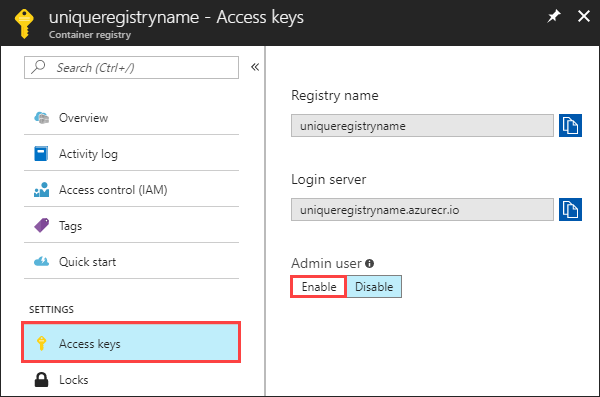

Kubernetes integration adds another layer of complexity because now you need image pull secrets, service accounts, and RBAC configs that all have to be perfect or nothing works. When this inevitably breaks (and it will), you'll be debugging RBAC permissions at 2am wondering why you didn't just become a carpenter.

How to Actually Debug This Shit

First rule of debugging auth failures: actually read the error message. I know it's usually garbage, but occasionally it contains a useful hint buried in the bullshit.

Test your credentials outside of Snyk first. If docker login fails with the same creds, the problem isn't Snyk-specific. If it works, then Snyk is being special.

Check if you can reach the registry at all using curl -I https://registry-host/v2/. If this fails, you have network problems, not auth problems.

Time your failures. If they happen randomly, check token expiration. If they happen at the same time every day, you probably have a cron job or scheduled task interfering with something.

The Docker daemon troubleshooting guide covers the obvious stuff, while their networking troubleshooting docs might actually help when DNS is being a bastard. Their security best practices are worth reading if you want to prevent half these problems from happening in the first place. For Kubernetes setups, check the troubleshooting guide and network debugging docs.

Now that you understand what breaks and why, let's move on to the solutions that actually work.