Protocol Buffers is Google's answer to "JSON is too slow and too big." Released in 2008 after they got tired of parsing massive JSON payloads between services. Now it's the standard for microservices that actually need to perform.

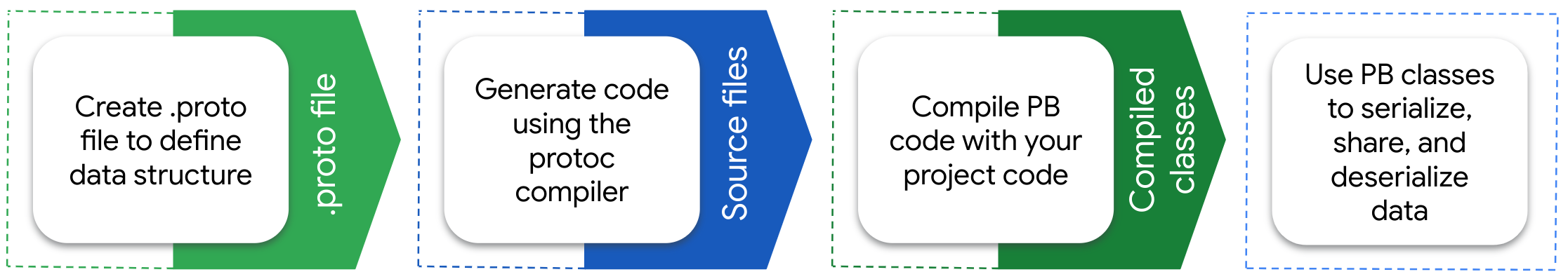

The basic idea: you write a schema in a .proto file that looks like a simplified struct definition. Run it through the protoc compiler and it spits out classes for your language. Those classes serialize to a binary format that's roughly half the size of JSON.

The Real Benefits (and Why They Matter)

Schema changes don't break everything: You can add fields without breaking existing clients. Try adding a field to JSON and watch half your services crash. With protobuf, old clients ignore new fields and new clients use defaults for missing ones. Field numbers are forever though - reuse field 5 and you'll spend a weekend fixing compatibility issues.

Actually typed data: JSON's "id": "123" vs "id": 123 bullshit doesn't happen with protobuf. If you define a field as int32, it's an int32. No guessing whether that user ID is a string or number this time.

Performance that matters: In my tests with microservice payloads, protobuf was consistently 2-3x faster to serialize and parse than JSON. Message sizes dropped by 30-40%. Your mileage will vary based on data structure, but the difference is real.

The Tradeoffs (Because Nothing's Free)

Debugging is pain: Binary format means you can't just curl an endpoint and read the response. Need the schema and tools to decode it. I've spent hours debugging protobuf issues that would have been obvious with JSON.

More complexity: Instead of just sending JSON, now you need schema files, code generation, version management. Great for microservices, overkill for your blog's REST API.

Learning curve: Field numbers, reserved keywords, evolution rules. Takes a few days to get comfortable if you're used to "just send JSON."

When Companies Actually Use This

Google uses it everywhere internally because they built it. gRPC uses protobuf by default, which is why it caught on in the microservices world. Netflix and other companies use it for service-to-service communication where bandwidth and latency matter.

Most teams switch from JSON when they hit performance walls - either too much bandwidth usage or too much CPU spent parsing. If you're building a web API that returns user profiles, stick with JSON. If you're building microservices that exchange thousands of messages per second, protobuf starts making sense.