Docker Desktop requiring a license for teams over 250 people was the final straw. We needed something that worked the same but didn't cost $21/month per developer. Podman turned out to be exactly that, plus it fixed security issues I didn't even know we had.

No More Daemon Bullshit

Docker's daemon architecture always bugged me. You have this privileged service running as root that manages everything, and if it crashes, all your containers die. I've had the Docker daemon lock up and take down local development environments more times than I care to count.

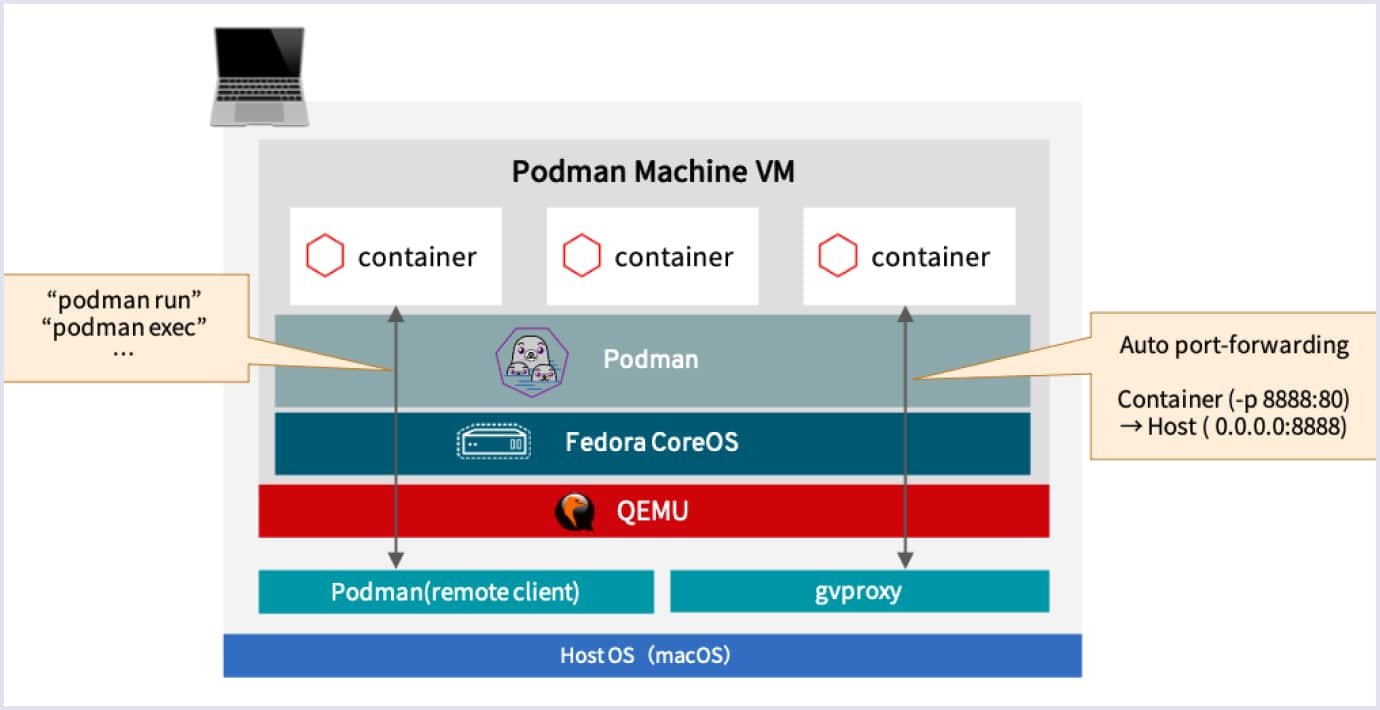

Podman runs each container as a direct child process of your user session. No daemon, no root privileges unless you explicitly ask for them. When I run podman run nginx, it starts nginx as my user, not as root. If one container segfaults, it doesn't affect anything else.

The security implications are massive. No more /var/run/docker.sock that basically gives any container root access to your entire system. CVE-2019-5736 scared the shit out of everyone running Docker in production - containers could escape and overwrite the host's runc binary. Podman's architecture makes this class of attack much harder.

The Migration Was Actually Easy

I was dreading switching our entire team's workflow, but it took about 30 minutes. Most Docker commands work identically:

docker run→podman rundocker build→podman builddocker ps→podman ps

I just added alias docker=podman to everyone's shell and 90% of our scripts kept working. The remaining 10% were edge cases around networking and volume permissions that we fixed as we found them.

The only real gotcha was rootless containers can't bind to ports below 1024 without configuration. So if you have nginx listening on port 80, you need to either run sysctl net.ipv4.ip_unprivileged_port_start=80 or map to a higher port.

Kubernetes Integration That Actually Works

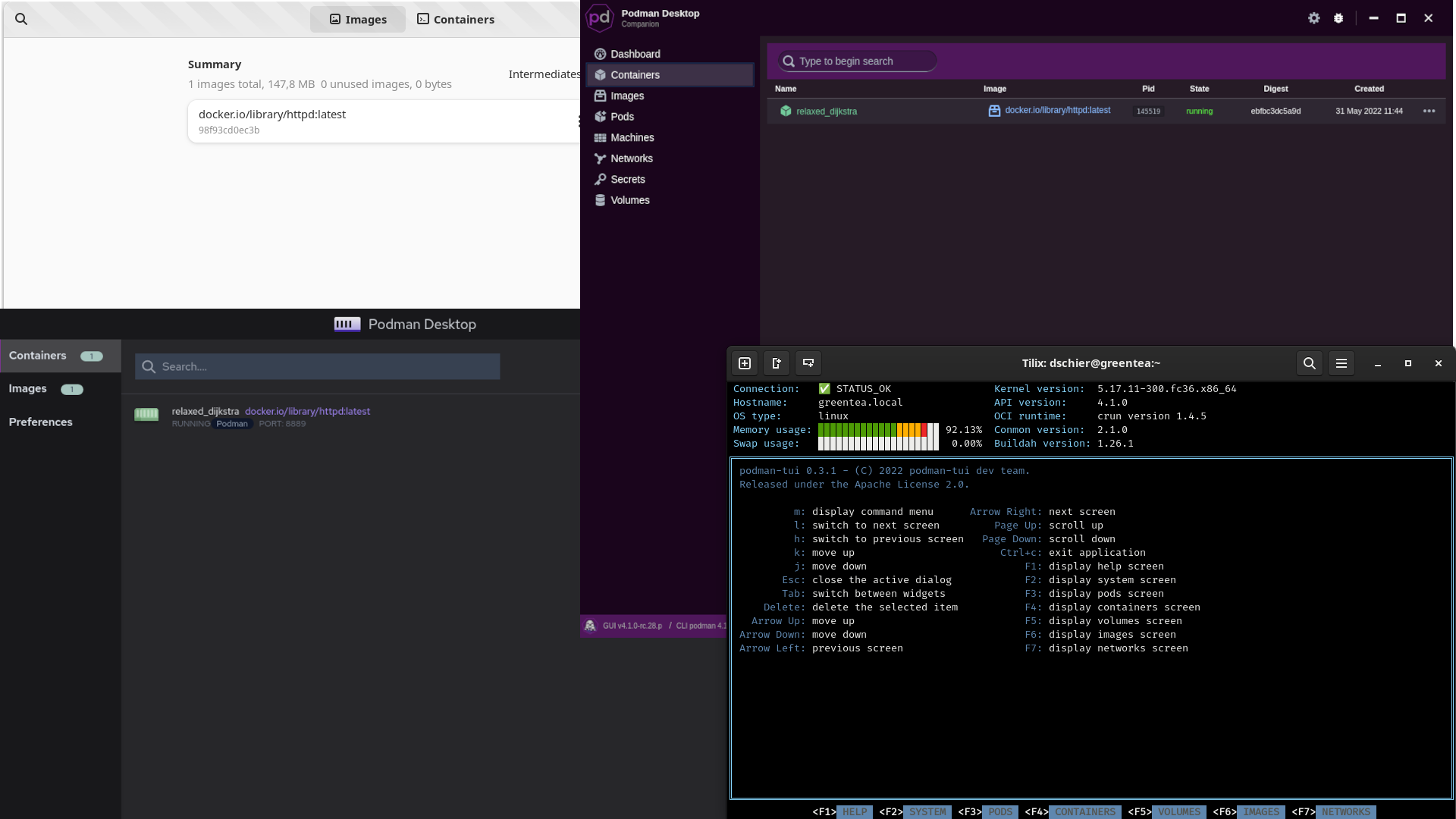

Docker Compose is fine for simple stuff, but we deploy to Kubernetes. The impedance mismatch between local development and production was always annoying. Podman supports pods natively, so you can group containers locally exactly like they'll run in production.

Better yet, podman generate kube creates valid Kubernetes YAML from your local setup. No more manually translating Docker Compose files or dealing with kompose's quirks. What runs locally can deploy to Kubernetes with minimal changes.

## Create a pod with nginx and redis

podman pod create --name webapp -p 8080:80

podman run --pod webapp --name web nginx

podman run --pod webapp --name cache redis

## Generate production-ready Kubernetes YAML

podman generate kube webapp > webapp-deployment.yaml

Real World Performance

I'm not going to quote bullshit benchmarks about "22% faster startup" because performance depends entirely on your workload. What I can say is that removing the daemon overhead makes container startup feel snappier, especially in CI where you're spinning up containers constantly.

The memory usage is noticeably lower too. Docker's daemon uses about 100MB just sitting there doing nothing. Podman only uses memory when containers are actually running. For development laptops, this matters.

The real win is in CI/CD. We run containers in GitLab CI and GitHub Actions without needing Docker-in-Docker or special privileges. Rootless containers just work, no daemon socket mounting or security exceptions needed.

This is why Red Hat moved RHEL to Podman by default - it's more secure and doesn't require maintaining a privileged daemon. If you're running enterprise Linux, Podman is probably already installed.

But the real question is how this all works under the hood, and whether the architectural differences actually matter in practice.