DNS Resolution Failures - Why Everything Breaks

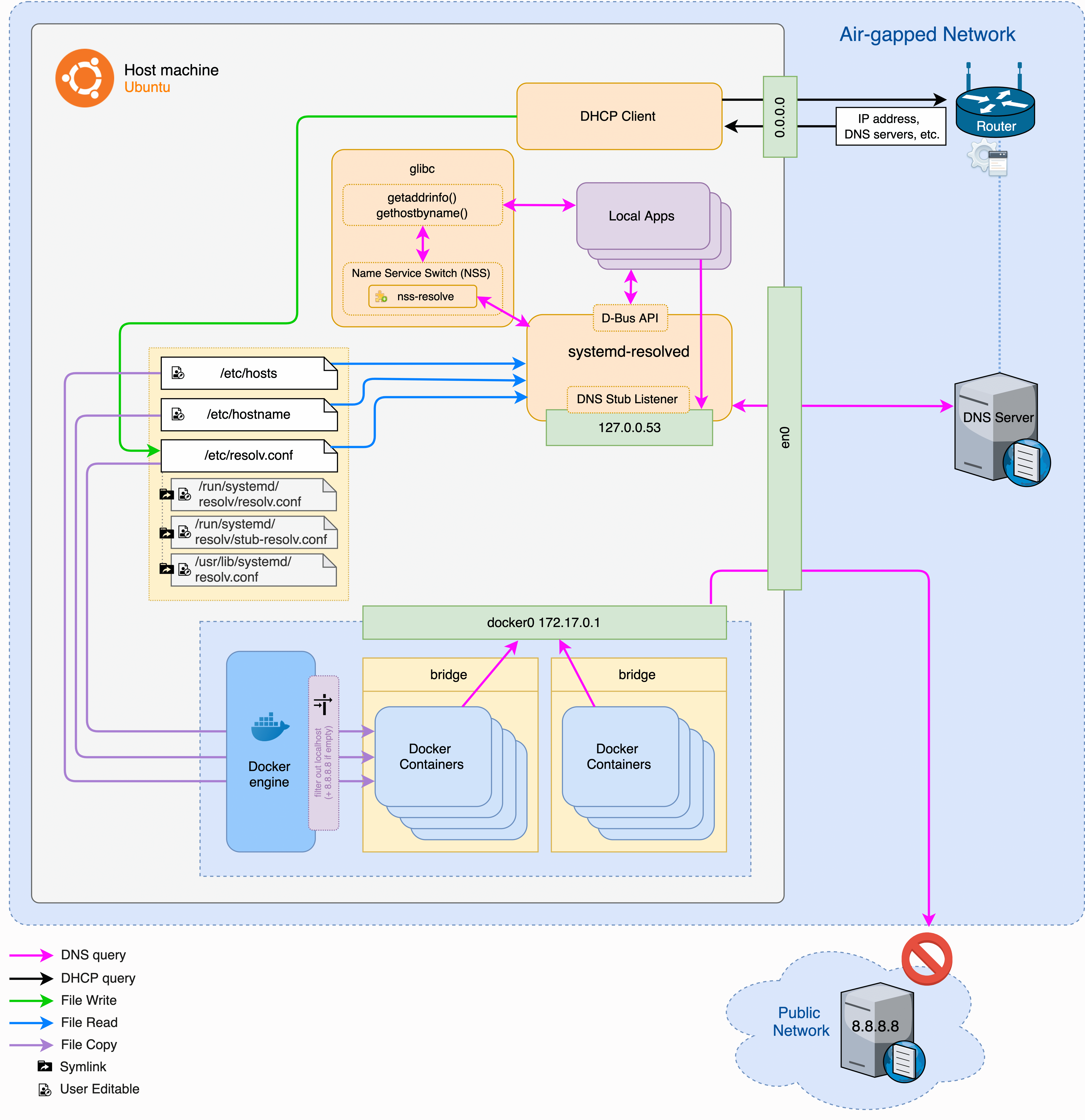

Most Docker networking bullshit starts with DNS. Your containers can ping 8.8.8.8 just fine but somehow "google.com doesn't exist." You get those useless "temporary failure in name resolution" errors even though your host machine works perfectly.

This has broken production for me more times than I want to count. Usually happens when Docker's DNS server gets into a fight with systemd-resolved. Ubuntu's recent versions made this worse - they switched networking stuff around and now Docker's DNS routing breaks in new and creative ways.

Docker containers are supposed to use Docker's internal DNS at 127.0.0.11. But systemd-resolved on the host sometimes forces containers to inherit the host's resolv.conf, which points to 127.0.0.53 instead. Then nothing resolves.

Had this exact thing happen with a Node API container. Could reach the database by IP but couldn't hit external APIs like Stripe. First I spent two hours thinking it was a firewall issue, checked iptables, security groups, the works. Then I thought maybe it was the Stripe API keys, wasted another hour testing different endpoints. Finally realized I should check what DNS the container was actually using - the container's /etc/resolv.conf was pointing to some random systemd bullshit instead of working DNS. Fixed it by adding explicit DNS servers to the compose file, but only after losing half a day to stupid assumptions.

The quick diagnosis:

## Check what DNS servers your container is using

docker exec container_name cat /etc/resolv.conf

## Should show 127.0.0.11, not 127.0.0.53 or random IPs

## If it shows 127.0.0.53, you've got systemd-resolved interfering

The emergency fix:

## Override DNS for immediate relief

docker run --dns=8.8.8.8 --dns=1.1.1.1 your_image

## For docker-compose, add to your service:

dns:

- 8.8.8.8

- 1.1.1.1

Docker's DNS docs are useless for the systemd-resolved bullshit - they mention it in one paragraph buried in 'advanced configuration' like anyone reads that far. The official Docker DNS troubleshooting guide covers jack shit about Ubuntu's networking changes. systemd-resolved conflicts are well documented on GitHub but the Docker team doesn't prioritize fixing them. Ubuntu forums are full of workarounds. This is still an active problem in 2025 - just check Reddit for the latest horror stories about containers randomly losing internet access.

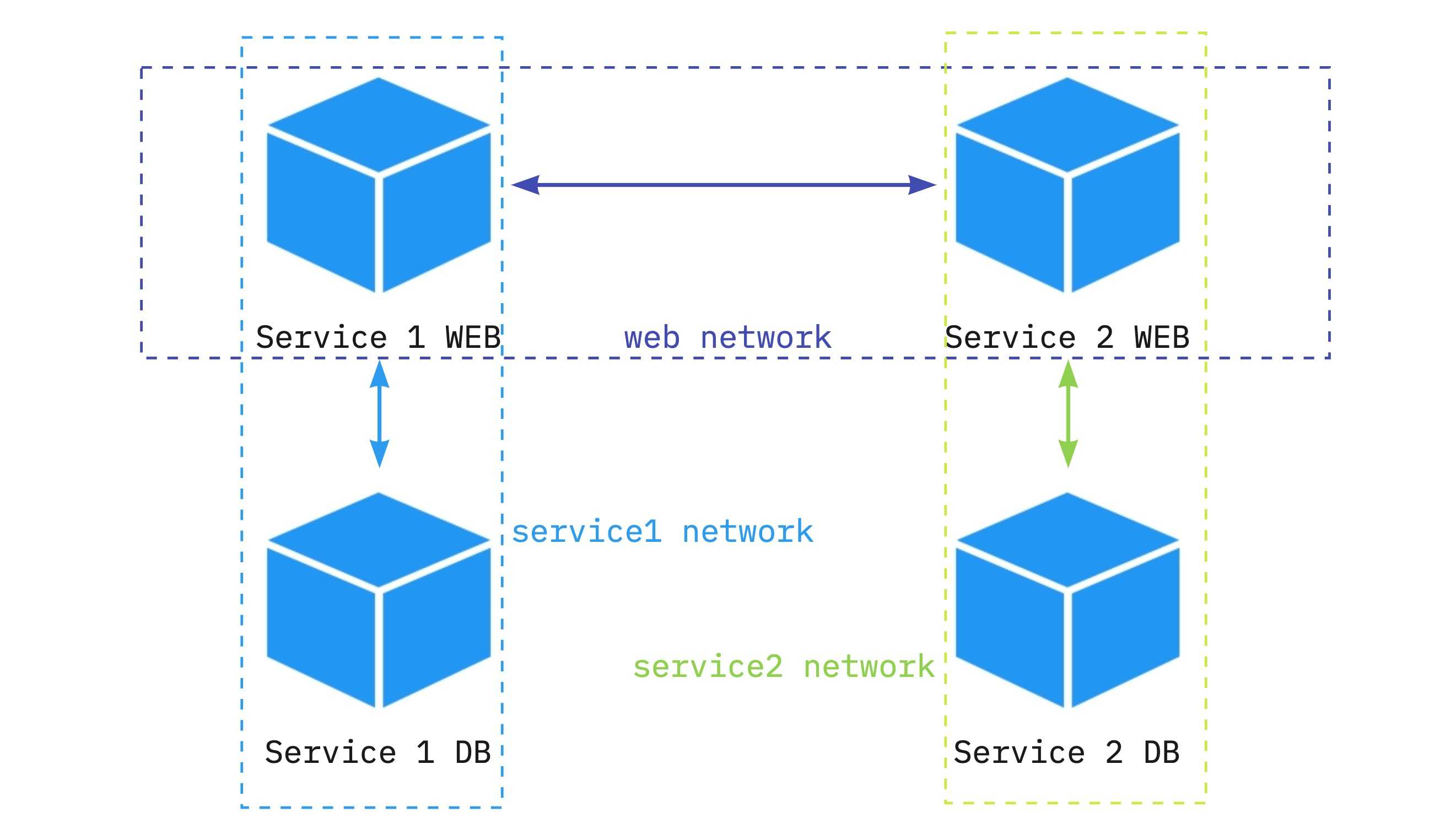

Container-to-Container Communication Issues - Why Your Containers Act Like Strangers

Problem symptoms: Your containers are supposed to be on the same network but they act like they've never met. Container A tries to reach Container B using its name and gets "connection refused" or "could not resolve host".

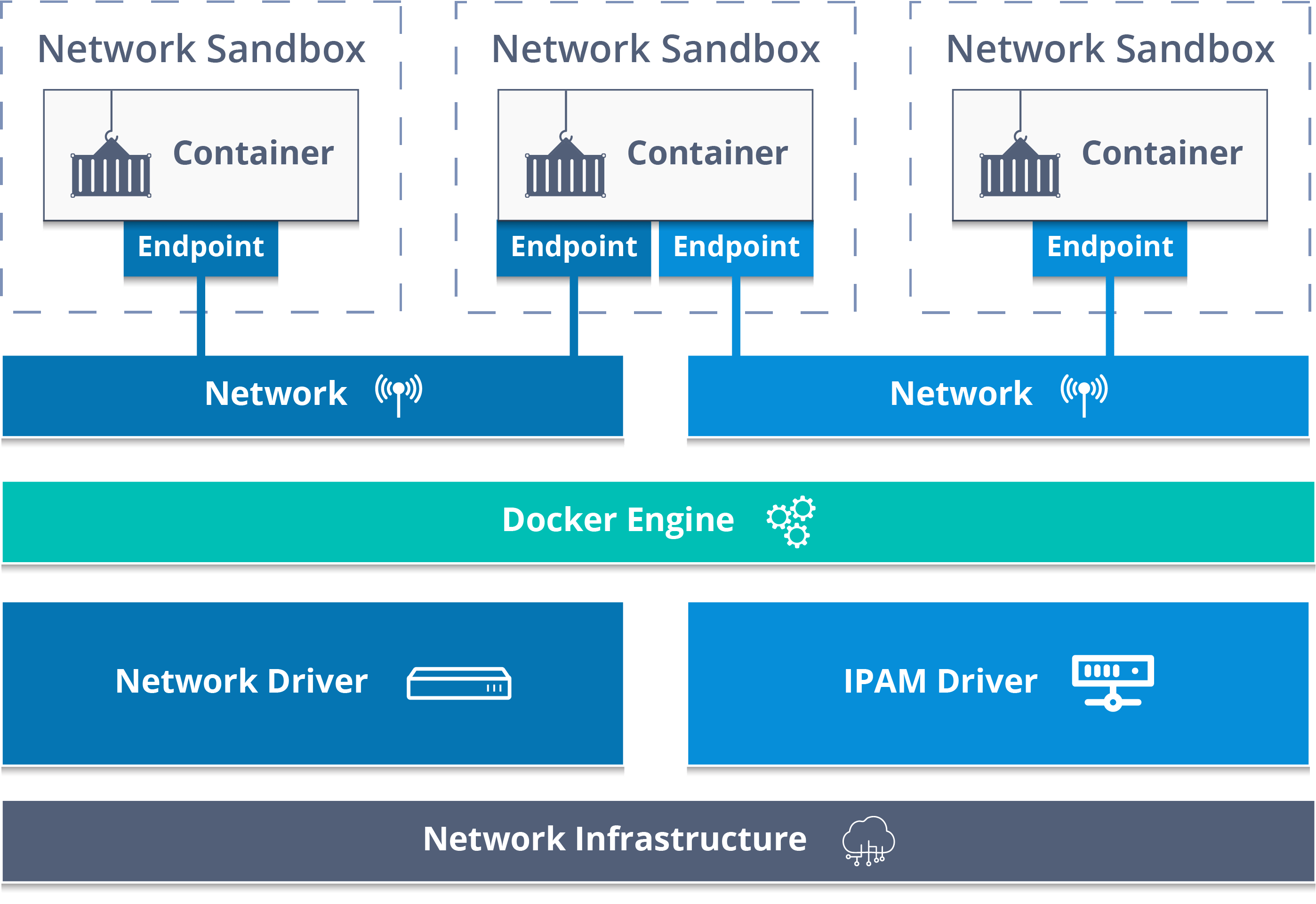

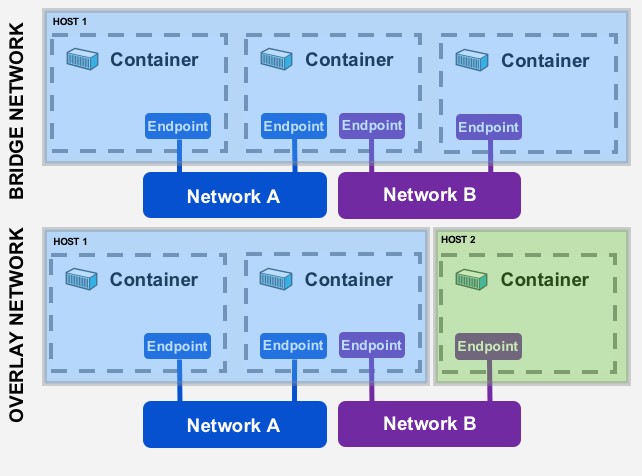

Why this bullshit happens: Docker's default bridge network only supports IP-based communication between containers. You can't just use container names like http://api:8080 - that only works on custom bridge networks. The Docker bridge network documentation hides this shit in "advanced configuration" where nobody reads it until their containers are on fire. Stack Overflow has hundreds of questions about this. Docker Compose networking automatically creates custom networks, which is why it works there but not with docker run. The Container Network Model explains the architecture but doesn't help when you're debugging at 3AM.

Real example from production:

## I tried this first like an idiot

docker run --name db postgres

docker run --name app --link db myapp # deprecated and I knew it but tried anyway

## Obviously didn't work, spent an hour figuring out why

## Check what network they're actually on (should have done this first)

docker network ls

docker network inspect bridge

The actual solution:

## Create a custom bridge network

docker network create --driver bridge myapp-network

## Connect both containers to it

docker run --name db --network myapp-network postgres

docker run --name app --network myapp-network myapp

## Now container "db" is resolvable from "app"

This shit is documented in the Docker networking guide, but of course it's in "advanced configuration" where you only find it after wasting half your day. Docker Labs networking tutorials have hands-on examples. Kubernetes networking concepts work similarly if you're headed that direction.

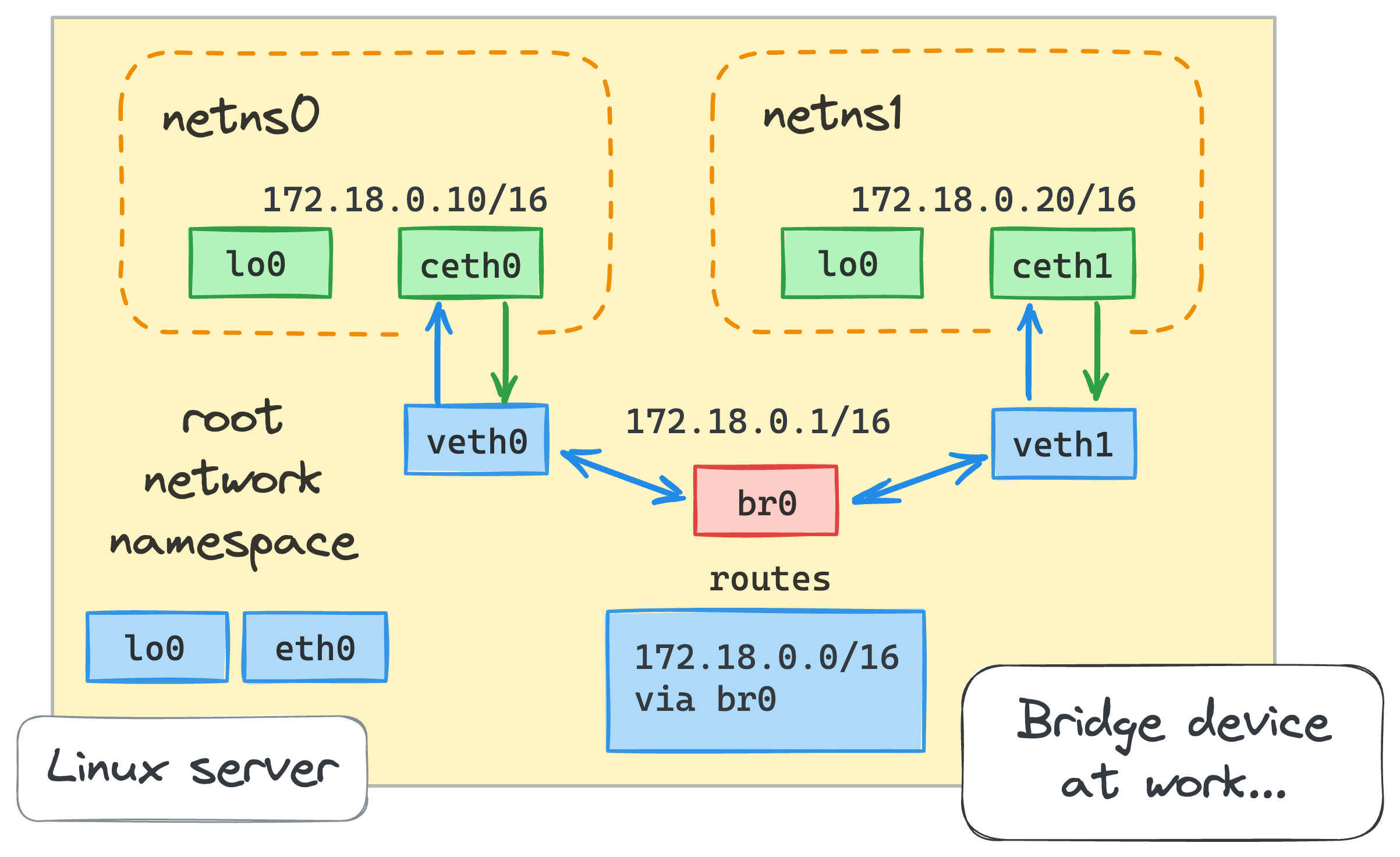

Port Mapping Issues - When Docker Says It Worked But It Fucking Didn't

Problem symptoms: You set up port mapping with -p 8080:8080 and Docker says it worked, but when you try to hit it from outside - connection refused. This is Docker networking's favorite way to waste your afternoon.

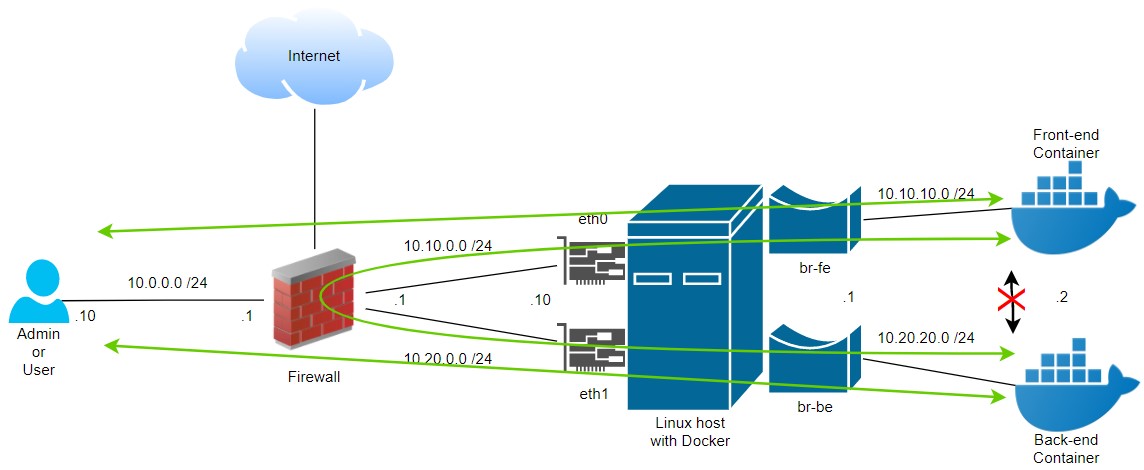

The real culprit: Your application is binding to 127.0.0.1 instead of 0.0.0.0. When an app binds to localhost inside a container, it's only accessible from within that container's network namespace. Docker can map the port all it wants, but there's nothing listening on the external interface.

Had this exact problem with a Flask API. Worked fine in dev, connection refused in production. Started by restarting the container like five times thinking that would magically fix it. Then spent hours checking AWS security groups, thinking the firewall was blocking it. Tried different ports, thinking there was a conflict. Even wondered if our load balancer was broken. Finally ran netstat inside the container and saw Flask was binding to 127.0.0.1 instead of 0.0.0.0. One line change in the Flask app and it worked. The failed connection retries from our health checks ran up our AWS bill while I was debugging, because of course they did.

Check what your app is actually listening on:

docker exec container_name netstat -tulpn

## Look for 0.0.0.0:8080 not 127.0.0.1:8080

Network security considerations: Cloud platform firewalls (AWS security groups, GCP firewall rules) and local iptables rules can prevent external access to mapped ports.

Debug port mapping issues:

## Check if Docker actually mapped the port

docker port container_name

## Test from the Docker host first

curl localhost:8080

## Then test from container's perspective

docker exec container_name curl localhost:8080

## Check what's blocking external access

sudo iptables -L -n | grep 8080

Platform differences: Docker Desktop on Windows and macOS uses virtualization layers that can affect port mapping behavior, particularly with WSL2 integration. Docker Desktop for Windows docs explain the virtualization stack. WSL2 integration guide covers the networking quirks. Host networking can provide better performance but sacrifices isolation - only use it when you've actually measured bridge networking as a bottleneck. Docker performance benchmarks show the overhead differences.

Ubuntu 24.04 Networking Changes

Ubuntu 24.04 introduced networking changes that affect Docker container internet connectivity. Common symptoms include:

- Containers can't reach external websites

- DNS resolution fails intermittently

docker run ubuntu ping google.comfails

The diagnosis:

## Check if systemd-resolved is interfering

systemctl status systemd-resolved

## Check Docker daemon configuration

cat /etc/docker/daemon.json

## Look for DNS conflicts

docker run --rm busybox nslookup google.com

The fix that actually works:

## Edit /etc/docker/daemon.json

{

"dns": ["8.8.8.8", "1.1.1.1"],

"fixed-cidr": "172.17.0.0/16"

}

## Restart Docker daemon

sudo systemctl restart docker

This issue broke tons of deployments when Ubuntu 24.04 came out. Containers could reach local stuff fine but external HTTPS requests would randomly fail. I initially thought it was our CDN or some AWS outage, checked the status pages like an idiot. Then I thought maybe our container images were corrupted, rebuilt them from scratch. Tried different Ubuntu versions, different Docker versions, nothing worked. Spent a whole weekend diving into kernel logs and iptables rules before finally finding some random GitHub comment mentioning systemd-resolved conflicts. Once I knew what to look for, the fix was simple, but getting there was pure hell. The Docker docs mention it but don't explain how much it breaks everything. Ubuntu release notes buried the networking changes. Docker Forums have detailed troubleshooting threads. GitHub issues track the ongoing problems.

WSL2 and Docker Desktop Networking - Welcome to Hard Mode

Docker Desktop with WSL2 on Windows is a special kind of hell. Port forwarding just stops working randomly. Containers can't reach Windows host services. Everything breaks after Windows updates. Microsoft and Docker somehow created the most frustrating dev experience possible.

Port forwarding dies after Windows updates or when your machine sleeps. Containers can't reach SQL Server or other Windows services because WSL2 is in its own weird network space. You get random "connection refused" errors that magically fix themselves when you restart Docker Desktop.

Windows-specific debugging:

## Check WSL2 networking

wsl --list --verbose

wsl --status

## Test container to Windows host connectivity

## From inside container, Windows host is accessible via host.docker.internal

docker run --rm busybox ping host.docker.internal

The Windows workarounds:

## For reaching Windows host from container

docker run --add-host=host.docker.internal:host-gateway your_image

## For persistent port mapping issues

## Restart the whole Docker Desktop, not just restart containers

Docker Desktop networking on Windows requires additional configuration for complex networking scenarios.

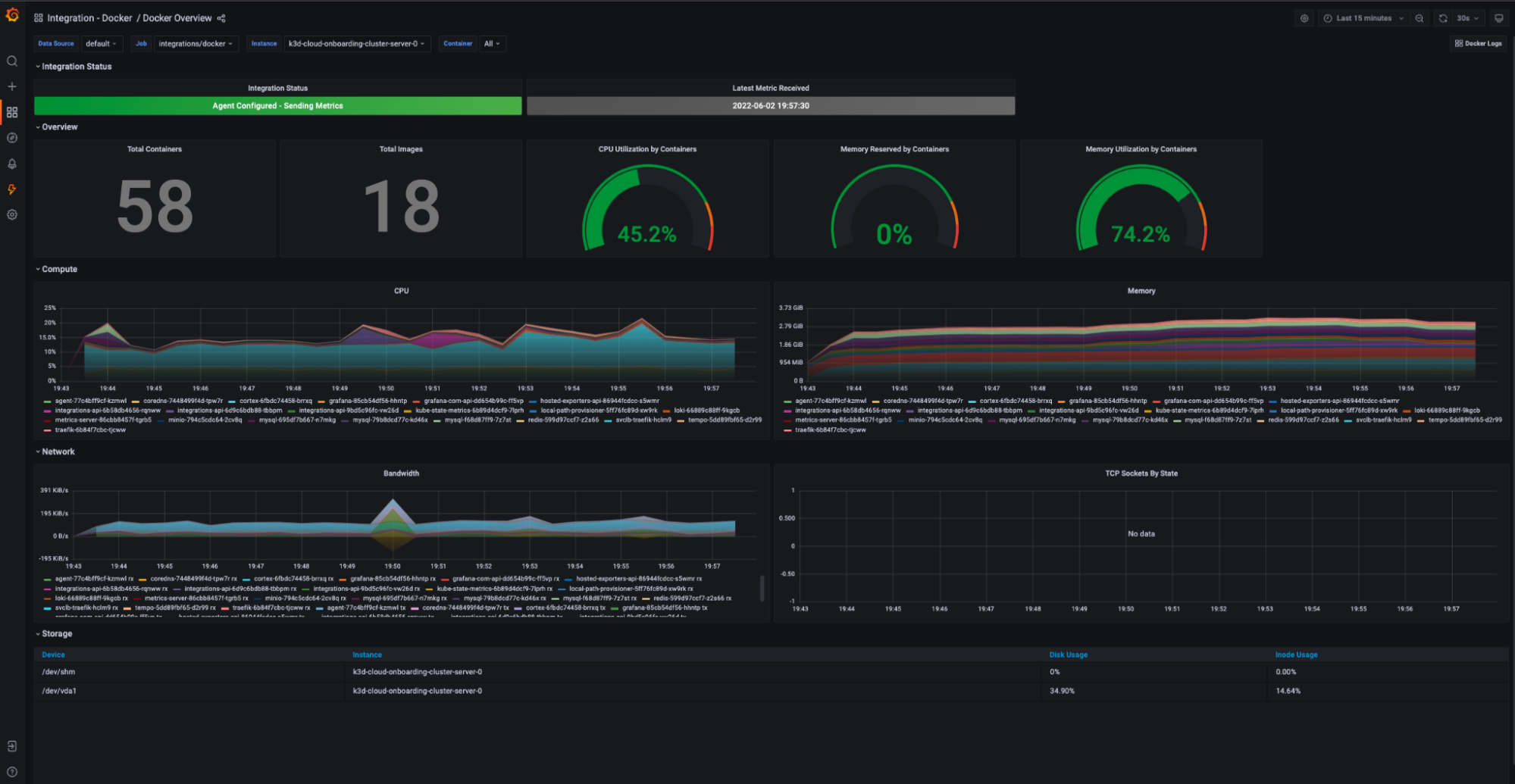

Production Monitoring: Catching Network Issues Before They Kill You

The metrics that matter:

- DNS query response times (should be < 50ms)

- Container-to-container connection success rates

- External API call success rates from containers

Essential monitoring commands:

## Real-time network connections

docker exec container_name ss -tulpn

## Network interface statistics

docker exec container_name cat /proc/net/dev

## DNS resolution timing

docker exec container_name dig google.com

Most teams don't monitor container networking until it breaks in production at 3AM. Set up alerts for DNS failures and connection timeouts - they're leading indicators that your containers are about to shit the bed completely.

The reality of Docker networking: it works great in development and staging, then mysteriously breaks in production with error messages that tell you nothing useful. When containers can't talk to each other, you need a systematic debugging approach - not random configuration changes and hope.

The next section walks through the exact debugging process that finds the root cause fast when your networking is fucked and management wants answers.