Pod Security Policies were a clusterfuck. If you've ever spent 3 hours debugging why your pod wouldn't start because of some obscure RBAC binding you forgot about, you know what I'm talking about. PSA fixes that mess by ditching the complex policy-to-pod mapping nightmare that made PSPs impossible to debug.

PSA has been enabled by default since Kubernetes 1.23, but it starts in "privileged" mode globally - meaning it doesn't actually enforce anything until you configure namespace labels. This is actually smart because otherwise every cluster upgrade would break half your workloads.

Why Pod Security Policies Had to Die

PSPs got the axe in Kubernetes 1.21 and were completely removed in 1.25. Good riddance. Here's why they sucked:

- RBAC hell - You had to create ClusterRoles, RoleBindings, and ServiceAccounts just to figure out which policy applied to your pod. When it broke, good luck debugging that maze. I spent an entire Friday tracing through RBAC bindings trying to figure out why our monitoring pods stopped working after someone "cleaned up" some old ServiceAccounts.

- Policy selection mystery - Multiple PSPs could match your pod, and Kubernetes picked one using logic that nobody could predict. I've seen production outages caused by the "wrong" PSP getting selected after an innocent namespace cleanup.

- Cryptic errors - Error messages like

unable to validate against any pod security policytold you absolutely nothing about what was actually wrong. You'd spend hours trying to figure out if it was RBAC, the policy itself, or some other random thing. - No testing - You couldn't test policies without potentially breaking production. Every PSP change was a "hope and pray" moment, usually followed by "oh shit, that broke the logging daemonset."

The day PSPs stopped working in 1.25 staging went down for 3 hours because someone missed migrating the monitoring namespace. That's when I learned PSA the hard way.

How PSA Actually Works

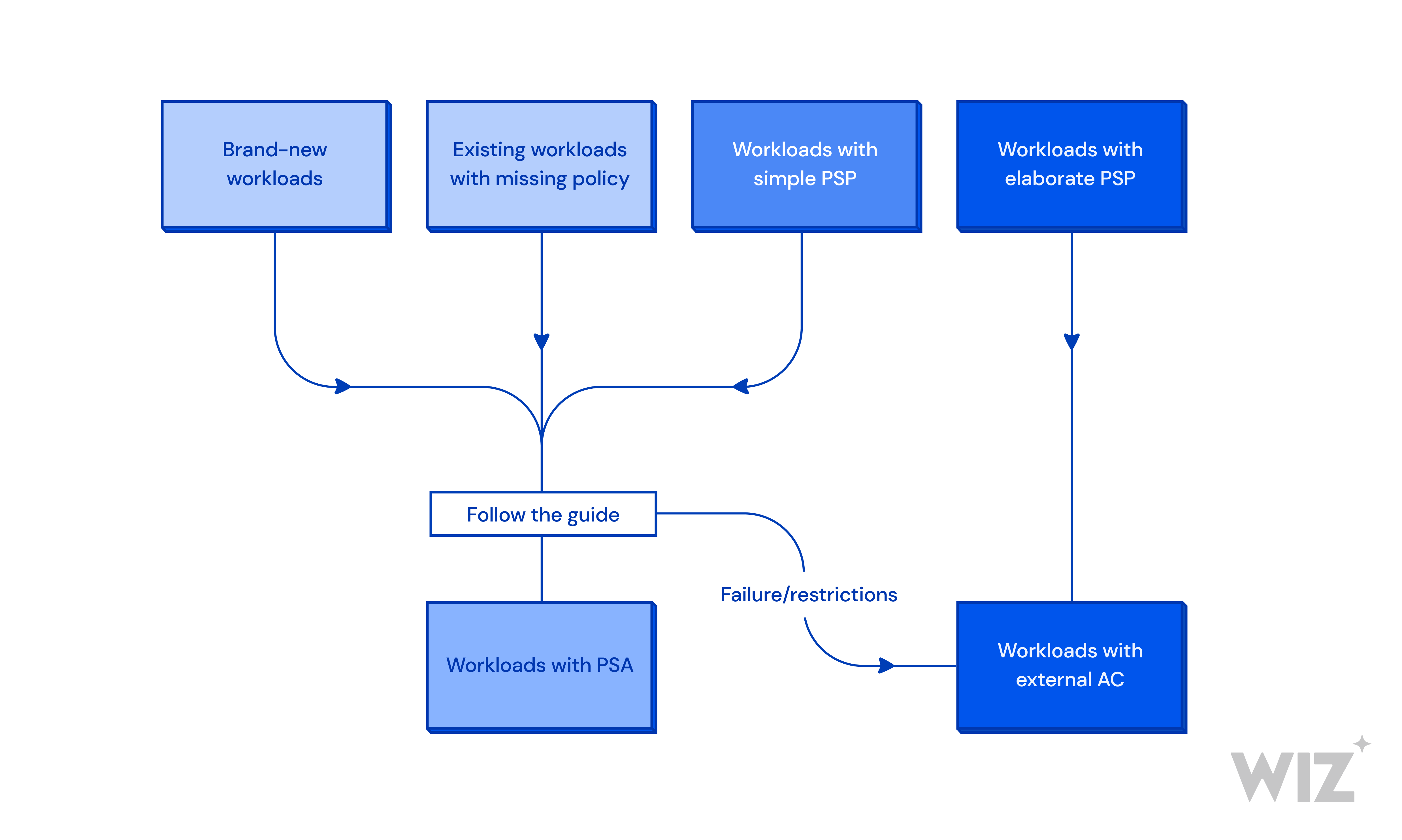

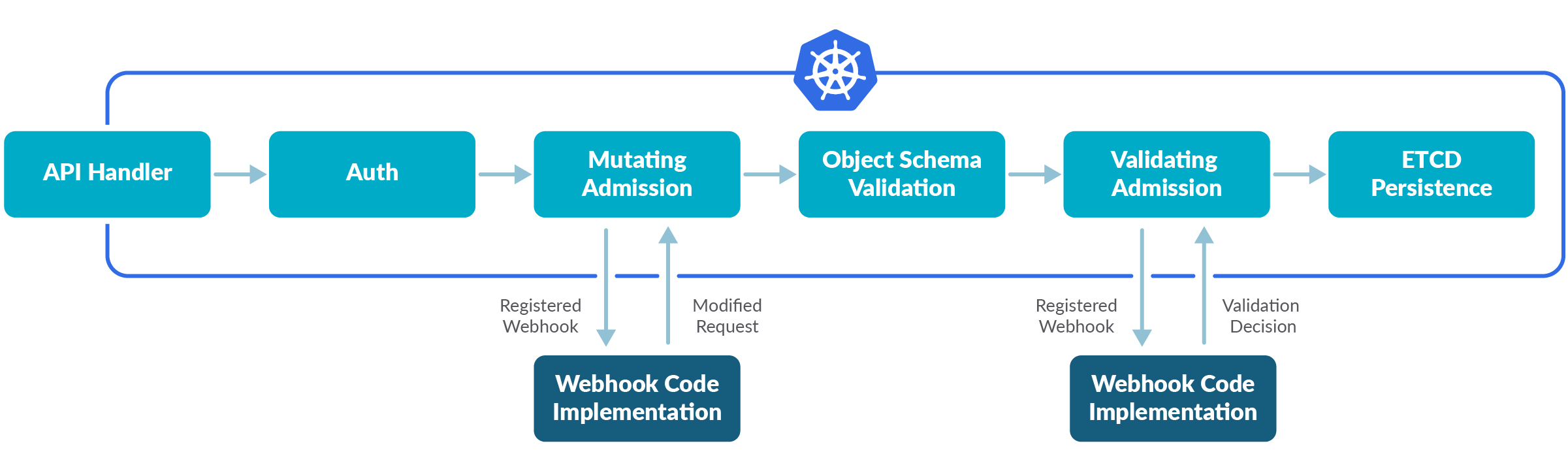

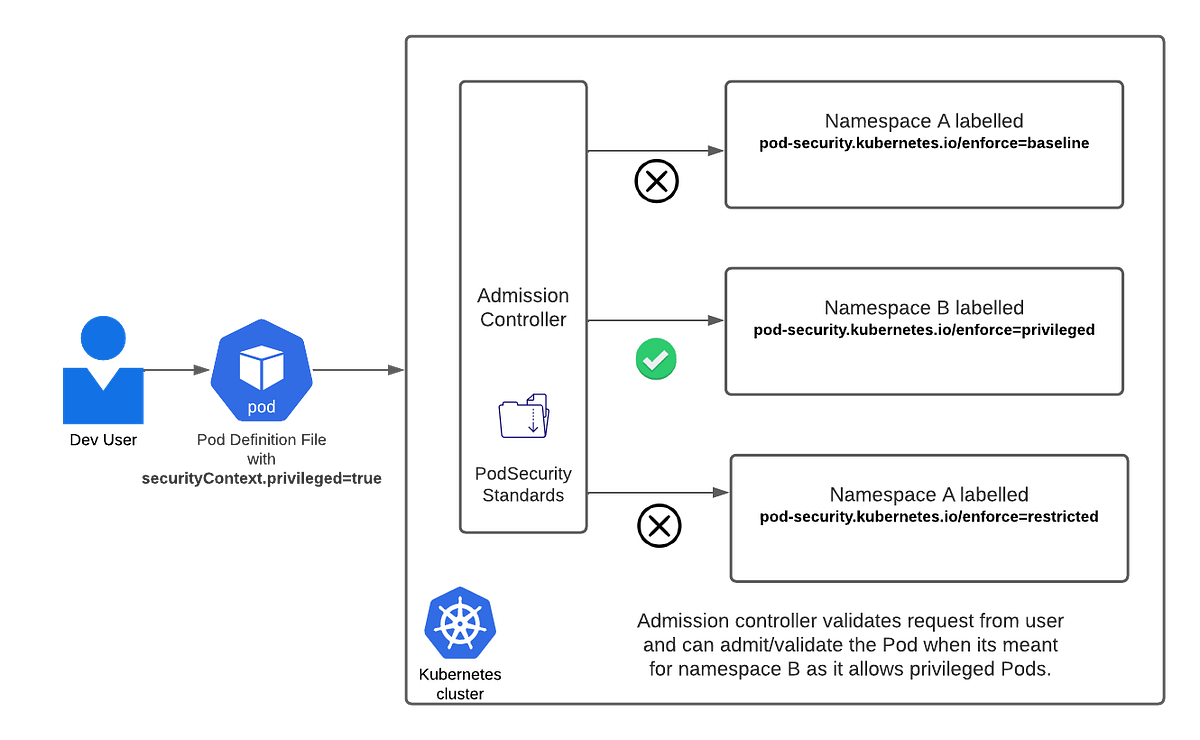

PSA is a validating admission controller built into the Kubernetes API server. Unlike PSPs that required RBAC gymnastics, PSA uses simple namespace labels to define what's allowed. That's it. No ClusterRoles, no bindings, just labels.

When you try to create a pod, PSA checks it against the namespace's security profile before the pod even gets to the scheduler. If your pod violates the policy, you get an error immediately instead of watching it fail mysteriously later.

The key difference: PSA doesn't validate existing pods. Your old shit keeps running even if it violates the new policy. This saved my ass during our migration - we could enable PSA gradually without taking down production workloads.

The Three Security Profiles

PSA gives you three security levels. Pick your poison:

Privileged - Anything goes. Your containers can be root, mount the host filesystem, access the host network - basically do whatever the fuck they want. Use this for system pods (kube-proxy, CNI drivers) and when you absolutely need privileged access. Most legacy apps end up here because nobody wants to fix their shit.

Baseline - Blocks the obviously dangerous stuff like privileged containers and host networking, but still allows root users. This is where most production workloads live because developers haven't figured out how to run their apps as non-root yet. It's the "good enough" security level.

Restricted - The paranoid security level. Forces non-root users, read-only root filesystems, drops all Linux capabilities. Your app probably won't work with this unless it was designed with security in mind from day one. Great for new cloud-native apps, nightmare for legacy stuff.

Why PSA Actually Works

Here's what makes PSA better than the PSP shitshow:

- Namespace labels are explicit - You can

kubectl get namespace -o yamland immediately see what security policy applies. No more guessing which of your 47 PSPs is actually being used. - Built into the API server - No more webhook timeouts taking down your deployments. PSAs runs inside kube-apiserver, so it's as reliable as the rest of Kubernetes.

- Three enforcement modes -

enforceblocks stuff,auditlogs violations,warnshows warnings to users. You can enable audit mode first to see what would break before you enforce anything. - Better error messages - Instead of cryptic PSP errors, you get messages like

violates PodSecurity "restricted:latest": securityContext.runAsNonRoot != true. Still not great, but way better than before.

The downside: PSA is namespace-level only. You can't have dev and prod workloads in the same namespace with different security requirements anymore. But honestly, if you were doing that with PSPs, you were probably doing it wrong anyway.

If you want to dig deeper into this stuff:

- Pod Security Standards specification - Detailed breakdown of what each security level blocks

- Kubernetes security best practices - Overall security guidance for Kubernetes clusters

- Admission controller architecture - How PSA fits into the Kubernetes request flow

- Security context best practices - How to configure pods to work with PSA

- Namespace organization patterns - Structuring your cluster for PSA

- Kubernetes RBAC guide - Understanding permissions and security contexts

- Container security fundamentals - Why these restrictions matter for security