K8s security out of the box is a fucking joke. I'm talking `--anonymous-auth=true` enabled by default on kubelet until v1.10, etcd running without TLS encryption, API server bound to 0.0.0.0:8080 with zero authentication. The defaults scream "we care more about your developer experience than not getting breached."

kube-bench crawls through your cluster's guts and checks about 100 different ways your security is fucked. Learned this the hard way after spending 6 hours manually checking configs when a PCI audit flagged our payment processing cluster as "fundamentally insecure." The auditor literally said our k8s v1.8 cluster was "designed for attackers" because we had the default kubelet config from the getting started guide.

What Actually Gets Checked

Here's what kube-bench catches that will bite you in production:

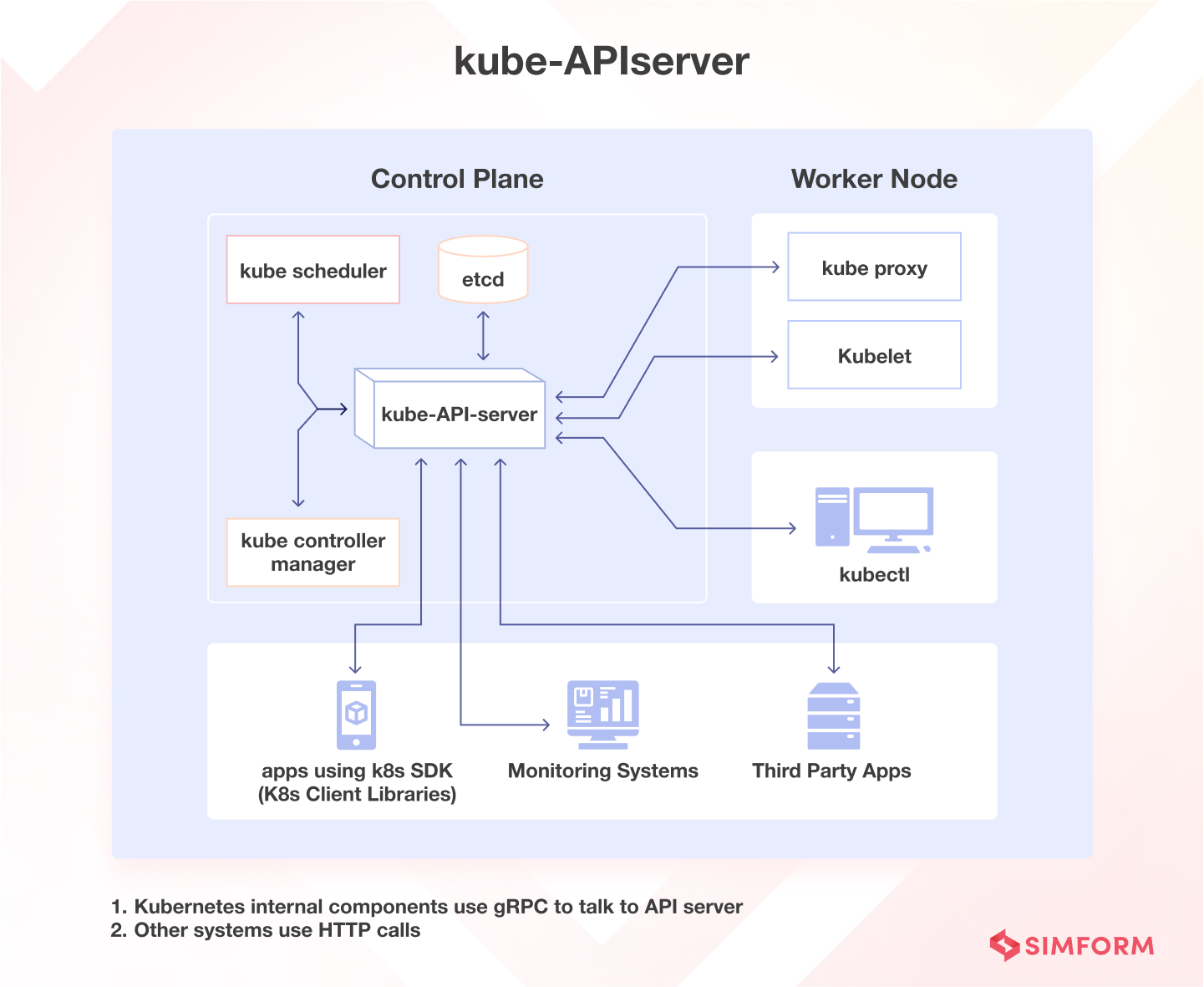

API Server Shit That'll Get You Owned:

- `--anonymous-auth=true` still running (default until K8s v1.6)

- `--authorization-mode=AlwaysAllow` because someone was too lazy to set up RBAC

- Missing `--enable-admission-plugins=PodSecurityPolicy` (deprecated in v1.21 but still better than nothing)

- `--insecure-bind-address=0.0.0.0` - might as well post your cluster IP on 4chan

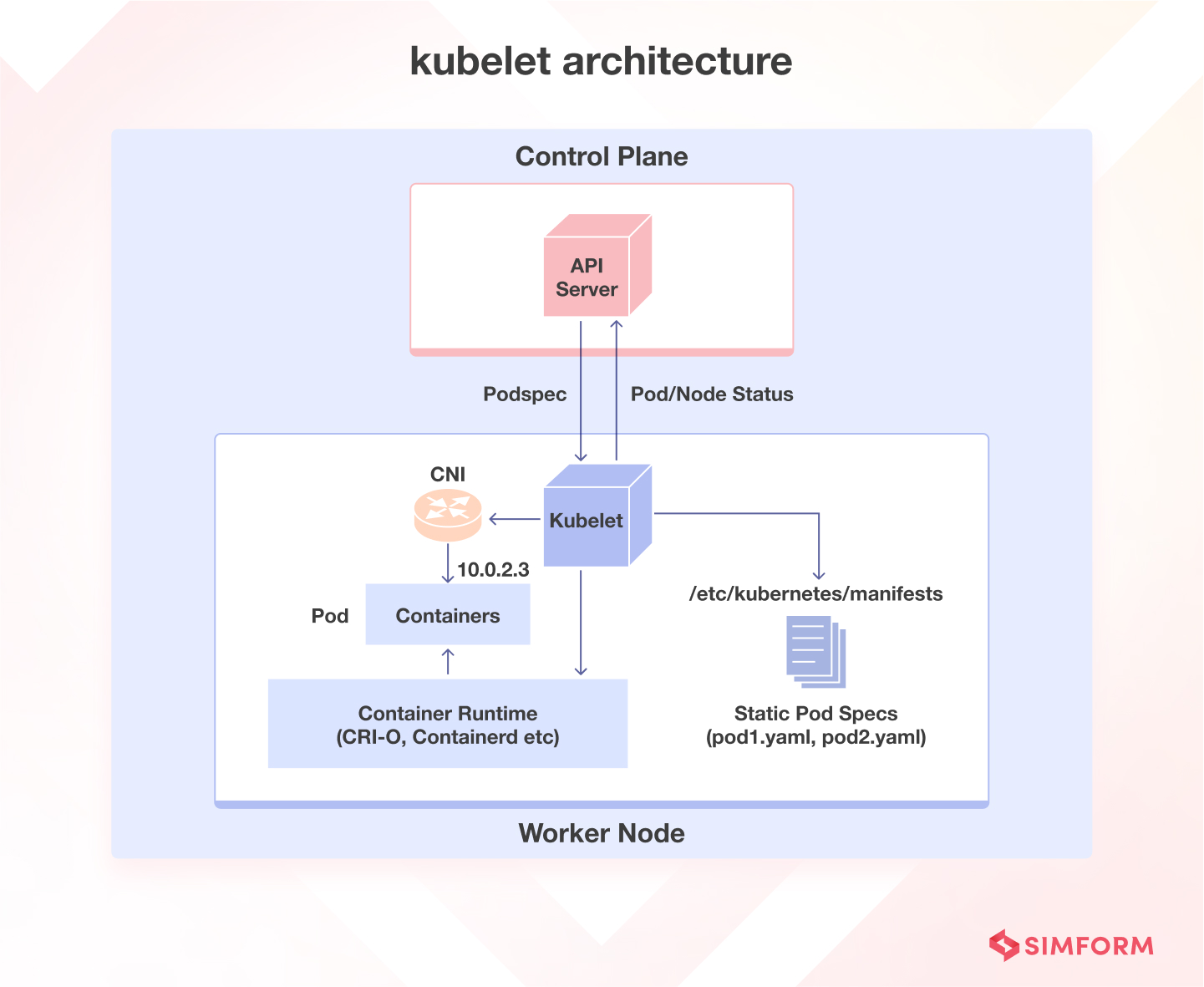

kubelet Fuckups That Keep Me Up at Night:

- Port `10255` wide open for anyone to scrape (disabled by default in v1.10, finally)

- `--allow-privileged=true` because someone needed to run a privileged pod "just once"

- `--anonymous-auth=true` on kubelet (why would you even do this?)

- Config file at `/var/lib/kubelet/config.yaml` with permissions

644instead of600- because why not let everyone read your cluster secrets?

etcd - The Crown Jewel Everyone Forgets to Secure:

- `--client-cert-auth=false` because someone thought TLS was "too complicated"

- Data directory at `/var/lib/etcd` with

755permissions - might as well make it a public FTP server - Peer-to-peer traffic unencrypted because "it's internal network traffic, what could go wrong?"

Node-Level Ways to Get Pwned:

- Docker socket at `/var/run/docker.sock` mounted with

666permissions because "containers need access" - `vm.overcommit_memory=1` allowing memory bombs that can crash your nodes

- No AppArmor profiles because "they break stuff" and no seccomp because "too much work"

This motherfucker runs about 100 checks total. Takes maybe 30 seconds unless you're on a potato cluster. About 40% of checks fail on EKS because Amazon won't let you see master node configs (learned this after 3 hours of debugging "permission denied" errors).

The CIS Kubernetes Benchmark is basically a 300-page PDF of paranoid security requirements that auditors cum over.