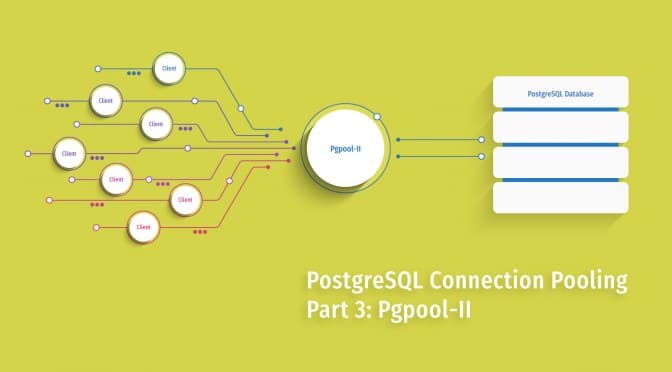

Pgpool-II is a connection pooler that sits between your application and PostgreSQL databases. Think of it as a bouncer at a club - it manages who gets in, where they go, and kicks people out when they overstay their welcome.

The Reality of Process-Based Architecture

Pgpool-II uses a process-based model like PostgreSQL itself, spawning up to 32 child processes by default. Each process uses about 2MB of RAM baseline, so you're looking at 64MB just for the proxy before it does anything useful. When you hit max connections, it just starts dropping new ones - learned this the hard way during a traffic spike that took down our staging environment for 2 hours.

The connection pooling architecture differs significantly from thread-based poolers like PgBouncer. Each Pgpool process maintains its own pool of backend connections, which can lead to suboptimal resource utilization compared to shared connection pools.

The parent process forks children to handle connections, and here's where it gets fun: if a child process crashes (which happens more often than you'd like with SSL issues), the parent kills all other children and respawns them. So one bad connection can temporarily murder your entire connection pool.

Connection Pooling That Actually Pools

Unlike application-level pooling where your framework pretends to be smart, Pgpool-II maintains persistent connections to your PostgreSQL servers. This saves you from the 50ms handshake overhead on every new connection. In practice, we saw connection establishment time drop from 45ms to under 1ms for pooled connections.

But here's the catch: connections are per-process, not shared. If you have 32 Pgpool processes and 4 backend servers, you could theoretically open 128 connections to your database cluster. Set max_pool = 4 and you're looking at 512 potential connections. Do the math before your DBA murders you.

Query Routing (When It Works)

Pgpool-II can automatically detect SELECT statements and route them to read replicas while sending writes to the primary. This sounds great until you realize it's session-based routing, not statement-based.

The load balancing mechanism analyzes SQL queries to determine routing, but load balancing conditions can be more complex than expected. Enterprise load balancing techniques show that pgpool's query-aware routing has limitations compared to simpler approaches.

If your web app holds connections open (like every single web framework does), your "load balancing" becomes "randomly pick a server and hammer it for the next 10 minutes." We've seen scenarios where one replica gets 80% of the read traffic while others sit idle because of long-lived connections. Load balancing comparisons often highlight this session affinity issue.

Failover: The Part That Actually Matters

When your primary database shits the bed, Pgpool-II can automatically fail over to a replica. The good news: it usually works. The bad news: every client connection gets dropped during failover, so your application better handle ECONNRESET gracefully.

Failover detection uses SELECT pg_is_in_recovery() to identify the primary. With default health check intervals, you're looking at 10-30 seconds of downtime while Pgpool figures out what happened. Set health checks too aggressive and you'll get false positives from network hiccups. PostgreSQL high availability strategies provide broader context for when automatic failover makes sense.

The watchdog feature coordinates multiple Pgpool instances for failover coordination, but production experiences show that watchdog can be complex to configure properly without split-brain scenarios.

SSL Performance Hell

SSL overhead with OpenSSL 3.0.2 will destroy your CPU. We saw 300% CPU utilization on a 4-core box just handling SSL handshakes. Version 4.6.3 finally addresses some of this, but you'll still want to monitor CPU usage carefully if you're terminating SSL at Pgpool.

The SSL performance issues are well-documented in the GitHub repository, where users report 75% CPU utilization with SSL enabled. This is tied to broader OpenSSL 3.0 performance issues that affect many applications.

Pro tip: terminate SSL at a load balancer in front of Pgpool if you can. Your CPU will thank you.