I've been optimizing browser automation since Selenium WebDriver was the only game in town. OpenAI's remote browser architecture creates performance challenges that most developers don't see coming until they're debugging production failures at 3am.

Why Remote Browsing Murders Performance

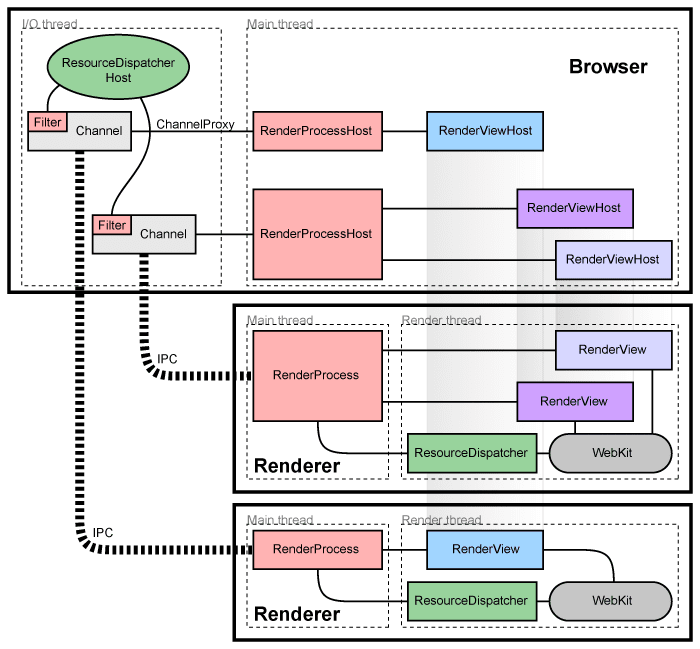

Unlike Playwright or Puppeteer running on your infrastructure, OpenAI's browser executes every action through their remote servers. Each click follows this path:

- Take screenshot of current page state

- Upload screenshot to OpenAI's vision model

- AI analyzes image and decides what to click

- Execute action on remote browser

- Wait for page response

- Take new screenshot

- Repeat for every single interaction

That's minimum 2-3 seconds per action, often much longer. I timed a simple 5-step checkout flow - 47 seconds total. A human does the same thing in under a minute, making this automation slower than manual work.

The latency compounds with workflow complexity. Multi-step processes that should take 2 minutes stretch to 15+ minutes because every micro-interaction requires a full AI decision cycle.

Peak Hours Will Fuck Your SLA

API Performance monitoring becomes critical during peak traffic periods when OpenAI's infrastructure experiences higher load.

OpenAI's browser infrastructure gets hammered during US business hours (9am-5pm PST). Response times spike from 2-3 seconds to 8-10 seconds per action during peak usage.

Run your automation during off-peak hours if possible. I moved a client's daily data entry automation to 3am PST - went from 40% failure rate during business hours to 12% failure rate at night. Same workflow, same logic, just different server load.

OpenAI's status page shows regular performance degradation during peak times, though they don't break out browser automation metrics separately. Tools like Datadog APM and New Relic can help you track these patterns in your own infrastructure. Grafana Cloud offers excellent OpenAI API monitoring dashboards that show response time trends over time.

Memory Leaks in Long-Running Sessions

Browser sessions that run longer than 30 minutes start showing performance degradation. The remote browser accumulates JavaScript memory leaks, DOM bloat, and cache buildup that you can't clear programmatically.

Solution: Kill and restart browser sessions every 20-25 minutes for long workflows. Yes, it's hacky. Yes, it works. I built a session rotation system that cycles browser instances before they hit memory limits:

// Session rotation to prevent memory leaks

class BrowserSessionManager {

constructor(maxSessionTime = 20 * 60 * 1000) { // 20 minutes

this.maxSessionTime = maxSessionTime;

this.currentSession = null;

this.sessionStartTime = null;

}

async getSession() {

if (this.shouldRotateSession()) {

await this.rotateSession();

}

return this.currentSession;

}

shouldRotateSession() {

return !this.currentSession ||

(Date.now() - this.sessionStartTime) > this.maxSessionTime;

}

}

Token Usage Optimization

Monitor your OpenAI token usage through their platform dashboard to track costs and identify expensive operations.

Every screenshot costs tokens for image analysis, plus additional tokens for action planning and error recovery. A single form field can burn 200-500 tokens depending on page complexity. The OpenAI Tokenizer helps estimate costs, while GPT Token Counter libraries enable programmatic cost tracking.

Reduce screenshot frequency: Configure longer delays between actions to let pages fully load. Taking screenshots of partially loaded pages forces the AI to guess about missing elements, leading to failures that require expensive retry cycles.

Batch similar actions: If filling multiple form fields, structure the workflow to complete all fields on one page before moving to the next. Each page transition requires a new screenshot analysis cycle.

Use text-based interaction when possible: Simple clicking and form filling is more token-efficient than complex visual analysis. Save screenshot-based decisions for when you actually need to understand visual layouts. OpenAI's API documentation provides guidance on optimizing vision model usage, while cost optimization guides offer specific strategies for reducing token consumption.

Failure Recovery That Actually Works

Error tracking and monitoring tools become essential for identifying patterns in automation failures.

The default error handling is garbage - generic "task failed" messages with no actionable information. Build your own retry logic with specific failure pattern recognition using patterns from Resilience4j and circuit breaker implementations. Tools like Sentry help categorize and track recurring failure patterns across your automation workflows.

// Retry logic for common failure patterns

async function retryWithFallback(action, maxRetries = 3) {

for (let attempt = 0; attempt < maxRetries; attempt++) {

try {

return await action();

} catch (error) {

if (error.message.includes('element not found')) {

// Wait for page to load completely

await sleep(3000);

continue;

} else if (error.message.includes('network timeout')) {

// Exponential backoff for network issues

await sleep(Math.pow(2, attempt) * 1000);

continue;

} else {

// Non-retryable error

throw error;

}

}

}

throw new Error(`Failed after ${maxRetries} attempts`);

}

The 3AM Debugging Rules

Browser debugging tools and methodologies remain relevant even when working with remote browser automation.

When browser automation breaks in production (and it will), you need debugging strategies that work without access to remote browser dev tools. Learn from Chrome DevTools best practices, Firefox debugging guides, and WebDriver debugging techniques that apply to remote browser scenarios:

Save page state before failures: Take screenshots immediately before and after failed actions. The AI can't tell you what went wrong, but you can see the visual state that confused it.

Log everything obsessively: Action timestamps, token usage, error messages, page URLs. OpenAI doesn't provide detailed execution logs, so build your own using structured logging libraries and APM tools. OpenTelemetry provides excellent distributed tracing for complex automation workflows.

Test failure scenarios manually: Don't just test happy paths. Manually trigger the conditions that break automation - slow page loads, dynamic content, network timeouts. Use chaos engineering principles and failure injection tools to simulate real-world failure conditions.

Build manual fallbacks: When automation fails, have a way for humans to complete the workflow. Your 3am debugging session shouldn't block business operations. Study graceful degradation patterns and progressive enhancement strategies used in web development.

I learned these rules the hard way debugging a payment processing automation that started failing randomly on Fridays. Turned out the payment provider's site loaded differently under high traffic, breaking our visual element recognition. Manual fallback saved us from losing weekend sales while we fixed the automation. Load testing tools like k6 and performance monitoring help identify these traffic-dependent failures before they hit production.