Most developers think this browser is just Chrome with ChatGPT baked in. That's like saying the iPhone was just a phone with internet. You're missing the point entirely.

The Operator Agent Integration Layer

The Operator agent isn't a chatbot sidebar - it's a programmatic interface to user intent. Instead of building forms and menus, you can build intent-driven interfaces where users describe what they want to accomplish.

Here's what this actually means: Your web app can register "capabilities" with the browser's agent system. When a user says "book me a table for Thursday night," the agent can route that intent directly to your restaurant app's booking system.

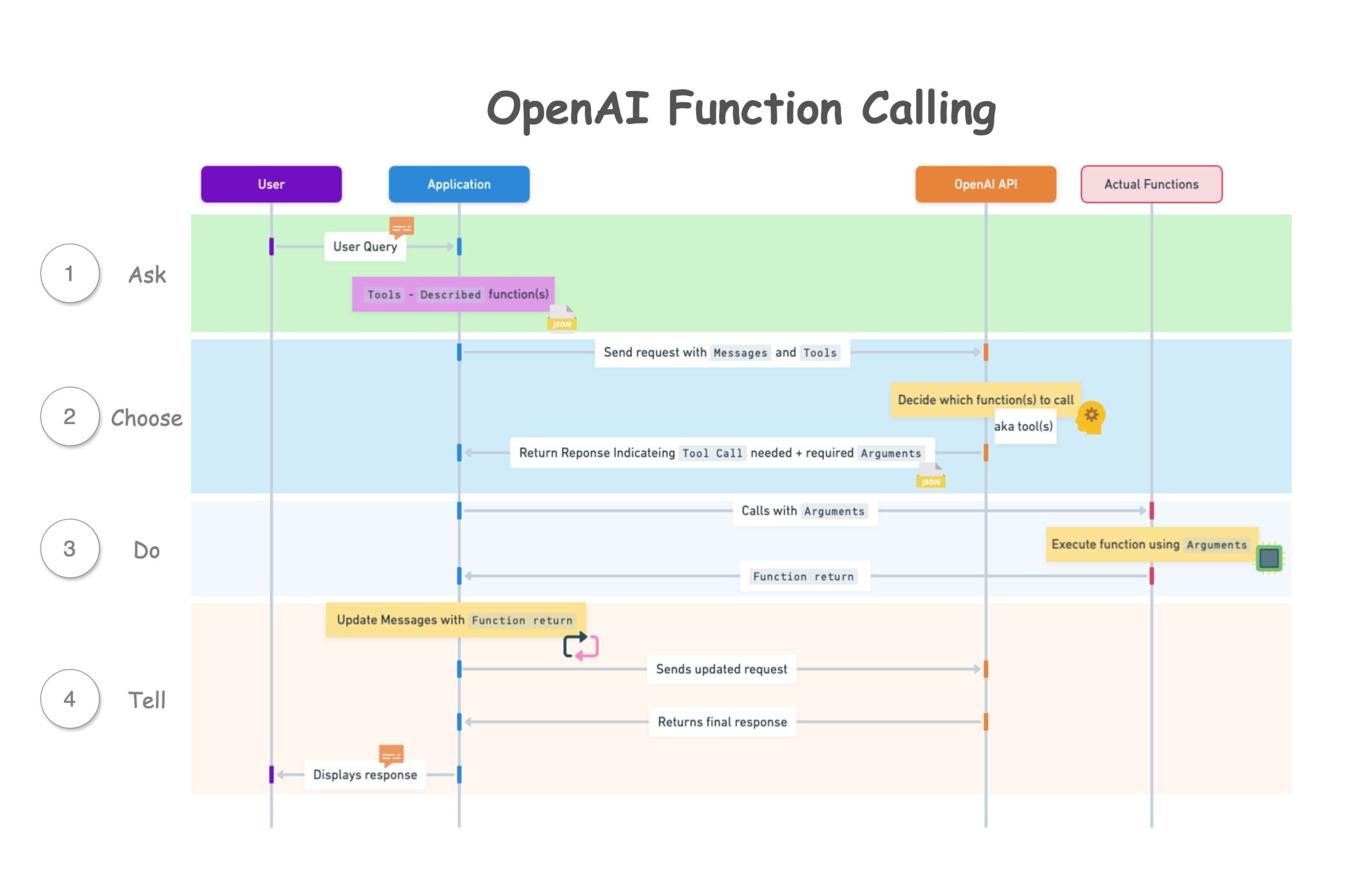

Traditional browser extensions work through the Chrome Extension APIs - you inject scripts, listen for DOM events, and manipulate page content. OpenAI's browser adds an AI intent layer on top of this model.

Web API Integration Patterns

The browser exposes new JavaScript APIs that let your web applications:

- Register intent handlers - Tell the agent what your app can do

- Receive structured intents - Get user requests as structured data instead of raw text

- Provide capability metadata - Help the agent understand when and how to use your app

- Handle agent callbacks - Respond to the agent's requests for information or actions

// Hypothetical OpenAI Browser API (based on current patterns)

if (window.openai && window.openai.agent) {

window.openai.agent.registerCapability({

name: 'restaurant_booking',

description: 'Book restaurant reservations',

parameters: {

date: 'string',

time: 'string',

party_size: 'number',

preferences: 'string'

},

handler: async (params) => {

// Your booking logic here

return await bookTable(params);

}

});

}

This is fundamentally different from traditional web APIs. Instead of waiting for users to navigate to your booking form, fill out fields, and submit, the agent can invoke your capability directly based on natural language intent.

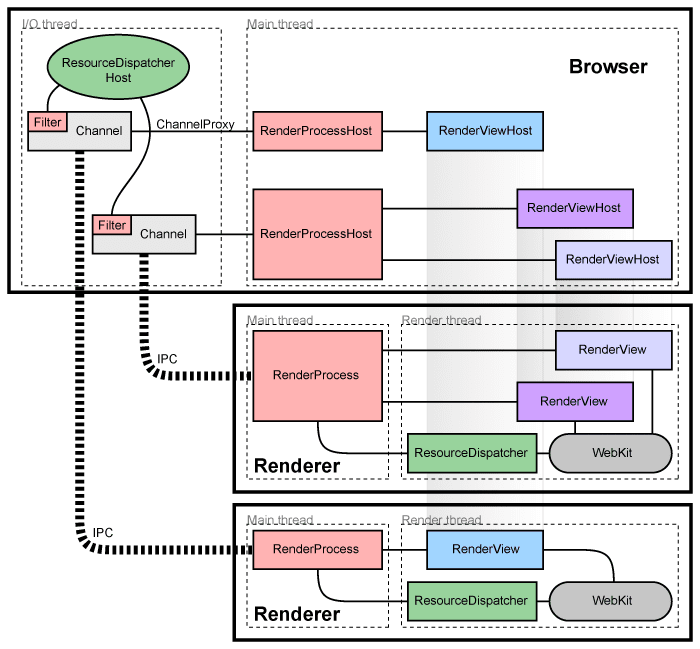

The Remote Browser Architecture Challenge

Unlike Playwright or Puppeteer which run browsers on your infrastructure, OpenAI's agent runs browsers on their infrastructure. This creates unique integration challenges:

Local vs Remote State: Your application logic runs locally, but the browsing happens remotely. State synchronization becomes critical - if your app updates data, the remote browser session needs to know about it.

I've spent years building browser automation with Playwright and Selenium. The remote architecture pattern has serious implications:

- Latency: Every interaction goes through the network

- State management: Local application state vs remote browser state

- Error handling: Network failures compound browser automation failures

- Debugging: You can't inspect the remote browser directly

Authentication and Session Management Nightmare

Here's where this gets nasty. Traditional web apps manage user sessions through cookies and local storage. OpenAI's browser runs remotely, so session management becomes a distributed systems problem.

Your authentication flow now looks like:

- User authenticates with your app locally

- Agent needs to authenticate with your app in the remote browser

- Both sessions need to stay synchronized

- If either session expires, the whole flow breaks

I've debugged similar issues with BrowserStack remote browsers. Session synchronization is hell - you end up with users logged in locally but logged out remotely, or vice versa.

Extension Development Model

OpenAI's browser uses Chromium as its base, so traditional Chrome extensions should work. But the AI integration layer adds new possibilities and complexities.

Extensions can potentially:

- Register capabilities with the agent system

- Provide context to improve agent understanding

- Handle agent requests for extension-specific actions

- Modify how the agent interacts with specific websites

But here's the catch: Extensions running in a remote browser have limited access to local resources. No access to your local filesystem, limited ability to communicate with local services, and potential issues with extension storage APIs. Chrome's Manifest V3 restrictions make this even worse - service workers can't stay alive indefinitely in remote contexts.

Real-World Implementation Challenges

I've built production web scraping systems with Puppeteer and automated testing with Playwright. Remote browser automation introduces problems you don't see in traditional web development:

Resource Management: Remote browsers consume resources on OpenAI's infrastructure. Expect usage limits, timeouts, and potential costs for heavy usage.

Debugging Hell: When your intent handler fails, you need to debug across multiple layers: your local code, the agent's intent interpretation, the remote browser execution, and the target website's response.

Bot Detection: Websites are getting aggressive about blocking automation. OpenAI's browser might get blocked by Cloudflare, reCAPTCHA, and other anti-bot systems. Shopify sites are particularly brutal - they'll block you after 3 automated actions. Found this out the hard way during a client demo.

Version Compatibility: The browser's API surface will evolve. Your integration code needs to handle different browser versions and API changes gracefully.

The developer experience is fundamentally different from traditional web development. You're not just building for users anymore - you're building for users AND an AI agent that needs to understand and execute user intent programmatically.