I've spent years hardening browser security for big companies. This OpenAI browser architecture is batshit crazy.

The Architecture is Insane

Normal browsers work like this: You type, click, browse - everything happens on your machine. Your bank password? Stays local until you hit submit. Your private docs? Local until you upload them.

OpenAI's ChatGPT agent throws this model out the window. Every keystroke, mouse movement, and form field gets sent to their servers first. Their AI agent then puppets a remote browser on their infrastructure.

Here's the nightmare: Your bank password goes to OpenAI first, then to your bank. Every keystroke, every click, every form you fill out.

This breaks the browser isolation model that security teams spent years implementing.

Traditional browsers isolate processes to protect your machine from malicious websites. OpenAI's model does the opposite - it exposes your data to remote servers.

What Data They're Slurping Up

When I say "everything," I mean everything:

- Your passwords as you type them (yes, including banking)

- Every search query (including that weird medical stuff)

- Email content as you compose it

- Screenshots of everything you look at

- Time spent reading specific content

- How you navigate between pages

This isn't like Chrome's telemetry where they collect aggregate usage stats. OpenAI can literally watch you type your divorce lawyer's email in real-time.

I tested similar remote browser architectures when I was evaluating some security solution, maybe it was Menlo or Netskope, can't remember. The attack surface is enormous - but at least those tools kept malicious content away from our endpoints. OpenAI does the exact opposite by funneling everything through their infrastructure first.

Security researchers have already figured out how to trick AI agents into leaking your data. Just last week, some security firm found a reverse shell vulnerability in ChatGPT agent that enables arbitrary command execution - exactly the kind of shit I predicted would happen.

Your IT Team Will Lose Their Shit

If you're in enterprise security, this browser violates basically every principle we've built security around for the past 20 years.

I spent way too long implementing browser isolation precisely to keep sensitive data OUT of remote browsers. OpenAI's model puts sensitive data INTO remote browsers owned by a third party.

Here's what's going to break:

- DLP systems - Data Loss Prevention can't see what's happening in OpenAI's browsers

- Network monitoring - Your SIEM has no visibility into user actions

- Compliance audits - SOX/HIPAA auditors will shit themselves when they see this architecture

- Incident response - Good luck forensically investigating what happened when everything occurred on OpenAI's servers

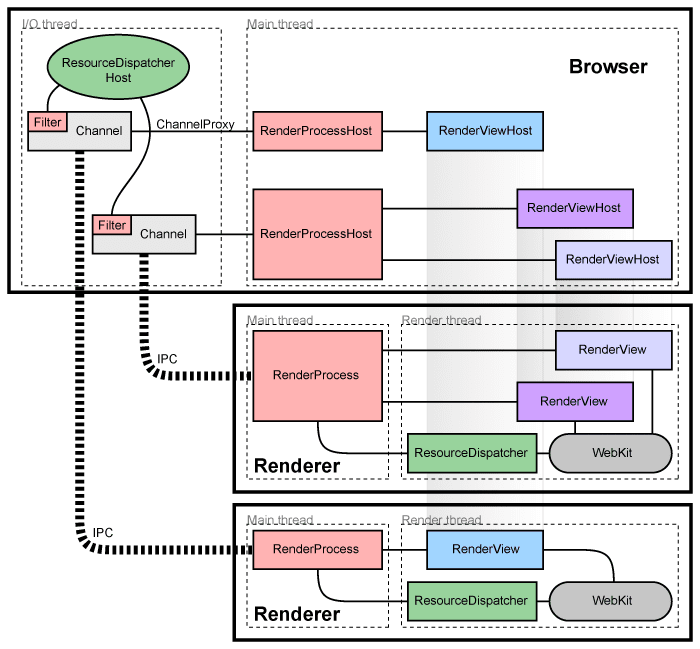

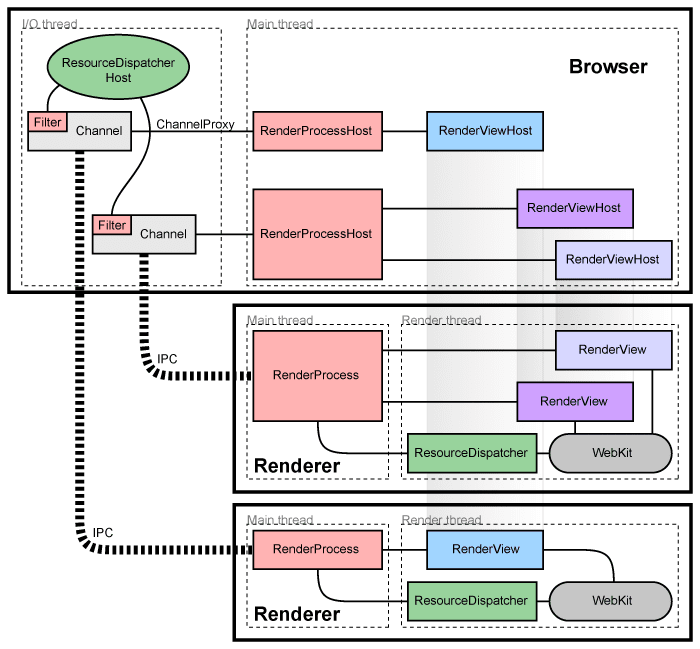

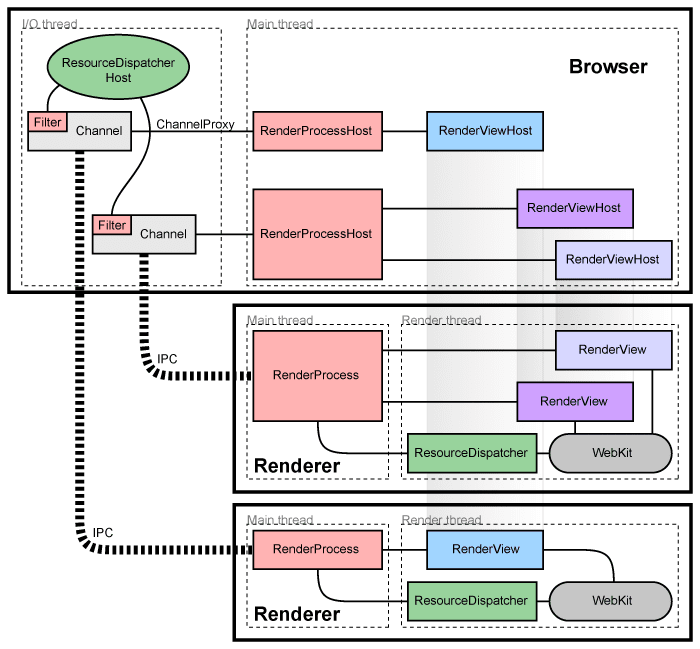

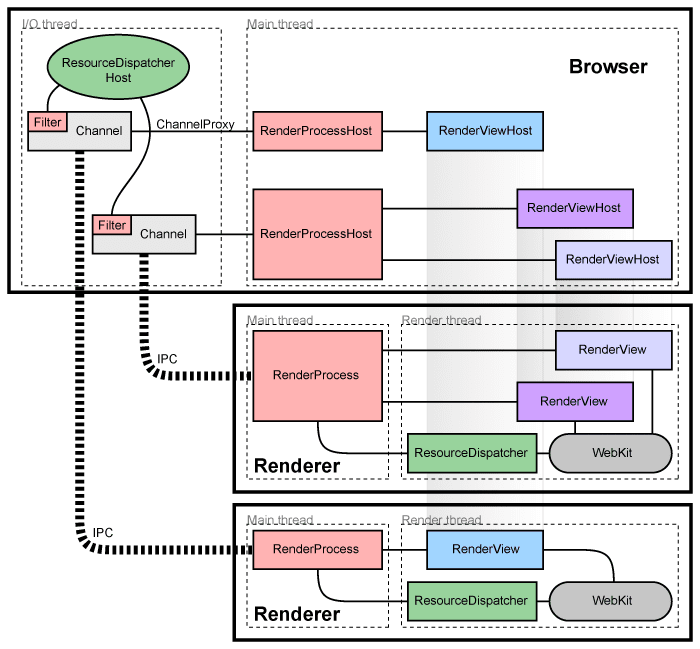

This is what 20 years of browser security hardening looks like - OpenAI just threw it out the window. The Chromium multi-process architecture isolates each tab and extension to prevent cross-contamination when one gets compromised.

The kicker? You'll need OpenAI's permission to investigate your own security incidents. That's not how enterprise security works.

![]()

Enterprise security depends on controlling your own infrastructure. OpenAI's browser breaks that fundamental principle.

They're Building the Ultimate Training Dataset

OpenAI needs data to train their models. But this browser is surveillance capitalism on steroids.

Google Chrome knows you visited Amazon. OpenAI's browser knows you looked at divorce lawyers, spent 47 minutes reading about depression symptoms, and abandoned your cart three times before buying anxiety medication.

That level of behavioral insight is worth billions. And unlike Google, who at least pretends to anonymize data, OpenAI's AI needs to understand context to work properly. Good luck anonymizing that.

I've seen what happens when companies get subpoenaed. Courts are already ordering OpenAI to preserve all user data. Your browsing history could end up as evidence in lawsuits you're not even involved in.

The technical risks are just the beginning - wait until you see how this fucks your compliance program.