So you want to know why NGINX conquered the web? It started in 2004 when Igor Sysoev got tired of Apache falling over under heavy load. The C10K problem was killing everyone's servers - trying to handle 10,000+ concurrent connections with thread-per-connection models was like trying to run a restaurant by hiring a new chef for every order.

As of August 2025, NGINX runs 21.2% of all websites because it actually works under load. The latest stable version is nginx-1.28.0 (April 2025) and mainline nginx-1.29.1 (August 2025) with Early Hints support - though the nginx.org docs are their usual cryptic mess when it comes to explaining if Early Hints actually help your use case.

Why NGINX Actually Works

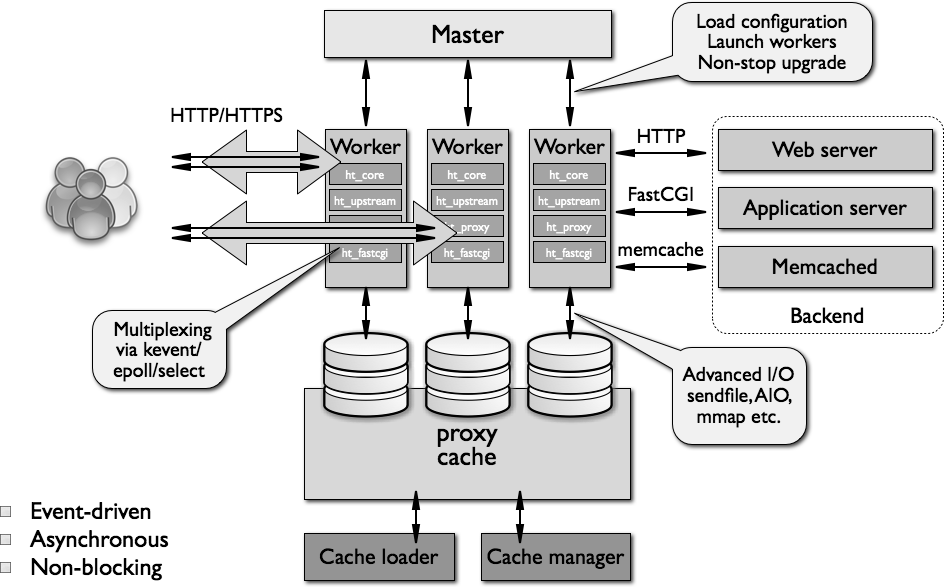

NGINX uses an event-driven model instead of spawning threads for every connection. One worker process can handle thousands of connections simultaneously using epoll (Linux) or kqueue (FreeBSD). Your CPU doesn't melt and your RAM doesn't get eaten alive.

Here's what NGINX actually does well:

Static Files: Serves files stupid fast. 500,000 requests/second isn't marketing bullshit if you tune it right, though your results will vary based on your shitty hardware and network.

Reverse Proxy: Sits in front of your application servers and doesn't die. Netflix switched early because they needed something that wouldn't crash when half the internet wanted to stream shows simultaneously.

Load Balancing: Actually distributes traffic across backend servers. Round-robin works fine for most people. Least connections is better if your backends have different performance. IP hash is for session stickiness when you haven't figured out proper stateless design yet.

How NGINX Doesn't Suck

NGINX runs one master process that manages worker processes (usually one per CPU core). Each worker can handle thousands of connections without creating new threads. Apache creates threads/processes per connection, which is why it dies under load.

The magic is in the event loop. Instead of blocking on I/O, NGINX uses epoll (Linux) or kqueue (FreeBSD) to efficiently handle thousands of connections in one worker. 10,000 idle connections only use 2.5MB RAM - try that with Apache threads.

Performance numbers are bullshit without context: benchmarks claim 400,000-500,000 requests/second for static content, but that's on their lab setup with perfect conditions. On my production boxes, I get about 200k req/sec before things start falling apart. 40,000-50,000 new connections/second is typical until you hit file descriptor limits because someone forgot to increase ulimit -n.

Why Everyone Switched

NGINX grabbed 21.2% of all websites by 2025 because Apache was getting its ass kicked by high-traffic sites. W3Techs shows 33.6% market share for high-traffic sites specifically - the ones that actually matter.

Netflix was desperate for something that wouldn't fall over when everyone wanted to binge The Office. They switched so early that NGINX Inc. had to be created just to provide support. Now it runs critical infrastructure everywhere - banking, e-commerce, social media, basically anywhere downtime costs serious money.

F5 bought NGINX Inc. for $670 million in 2019 because load balancing is serious business. That spawned NGINX Plus with enterprise features, but the free version still handles more than most people will ever need.