Mistral 7B is a 7B parameter transformer that's pretty solid for its size. Mistral AI released it in September 2023, and I've been testing this in production setups. Here's what happens when you use it.

The Two Tricks That Make It Work

Mistral uses two clever optimizations that most engineers don't really understand until they dig in:

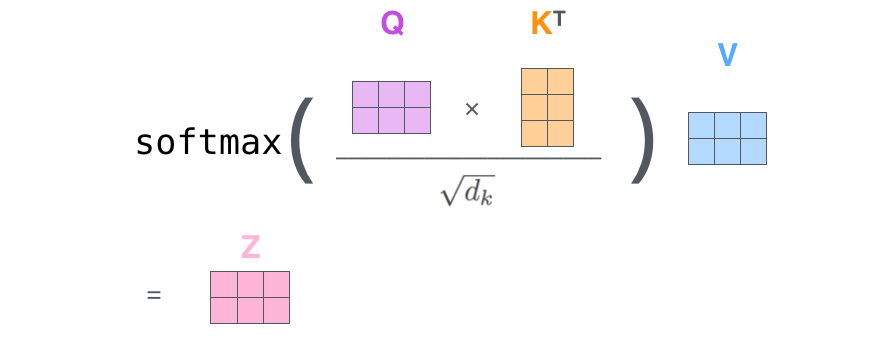

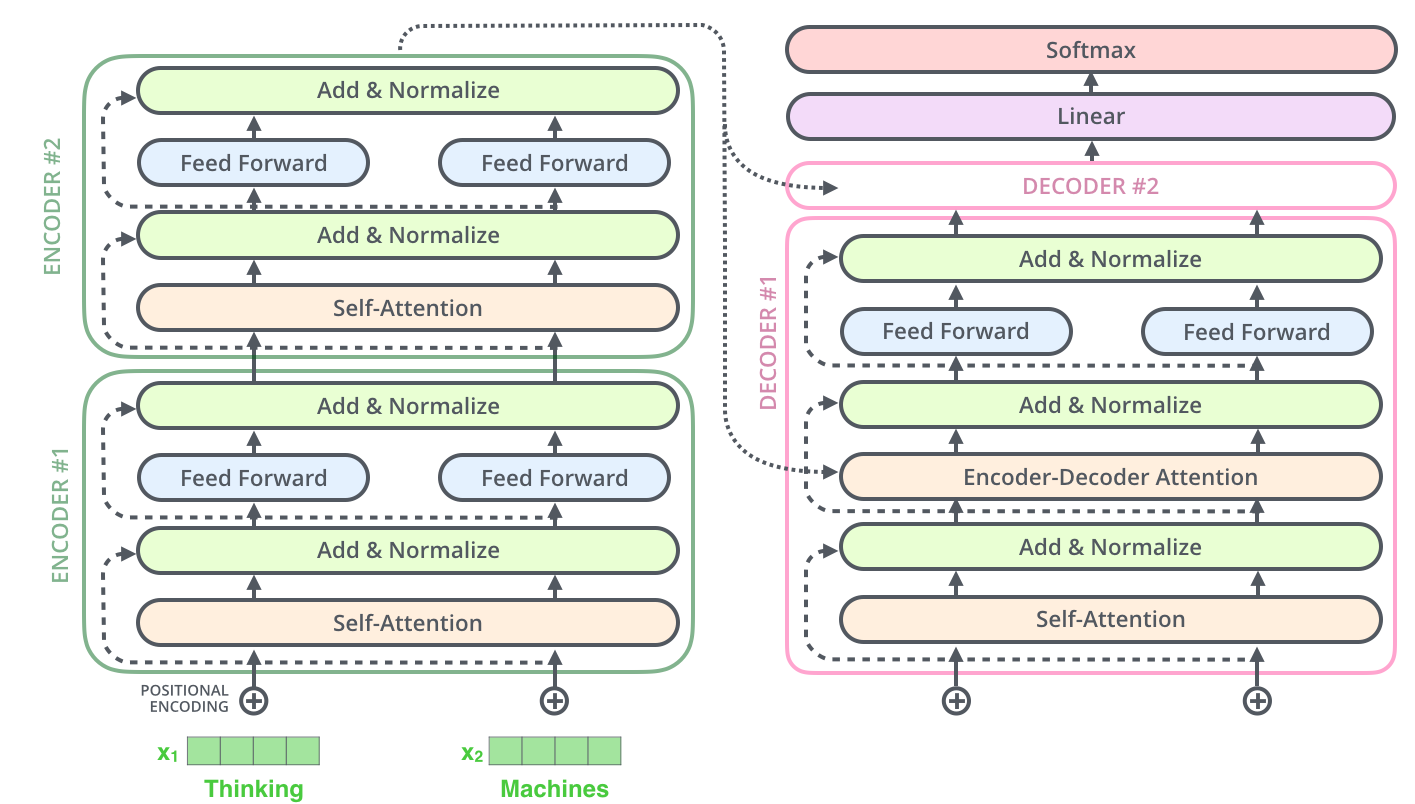

Grouped-Query Attention (GQA): Instead of recalculating everything for each attention head, it groups them and reuses computations. Makes inference noticeably faster in my testing - the paper claims 30% but your setup will vary depending on sequence length.

Sliding Window Attention (SWA): The sliding window thing is weird - only looks at 4K tokens but somehow works because layers stack or something. I don't fully get the math but it performs fine until you hit the context limits.

Real Performance vs Marketing Claims

The official benchmarks look great on paper. In practice:

- Does beat Llama 2 13B on most tasks, which surprised me initially

- Needs around 5GB in my setup, way more than their "minimal requirements" bullshit

- Coding performance is decent but not spectacular - HumanEval scores around 30% - I wouldn't use it for debugging production code

- Context handling degrades noticeably after ~16K tokens despite the 32K claim

The Apache 2.0 License Thing

This is the best part. No lawyer bullshit, no weird restrictions, just use it however you want. The Apache 2.0 license allows commercial use, modification, and distribution. Compared to Llama's custom license headaches, this is refreshing.

Reality Check

Mistral 7B was impressive back in September 2023. Now it's 2025 and I've spent the last 6 months migrating our systems off it. Llama 3.1 8B performs better - costs depend on your provider but we cut our inference costs by $2,400/month switching from Mistral Console to Fireworks AI for Llama 3.1.

If you're starting fresh, don't make my mistake. The Apache license was cool but not worth the headaches. The only time I'd recommend Mistral 7B now is if your legal department forces you into Apache 2.0 licensing.