The "Oh Shit" Moment

Last month, a colleague was using Claude Desktop 1.1.2 to help debug some infrastructure code. Everything looked normal - the AI was reading logs, checking configurations, helping with Kubernetes manifests. Then our security monitoring lit up like a Christmas tree. Why? The AI had connected to a malicious MCP server that was quietly copying every SSH key from ~/.ssh, reading AWS credentials from environment variables, and exfiltrating database connection strings from config files. Took us 3 hours to figure out what the fuck was happening because the traffic looked completely legitimate.

The traditional security tools caught exactly none of this. It looked like legitimate AI operations because, well, it was. Just with a server that had other ideas about what to do with the data.

What's Actually Happening Out There

MCP lets AI tools connect to your filesystem, databases, cloud APIs, and basically everything else you don't want random internet servers accessing. The protocol is brilliant for productivity - your AI can read your code, update your databases, deploy your apps.

But here's the thing nobody talks about: MCP servers can be run by literally anyone, anywhere, with any intentions.

We've seen this shit in the wild:

- SSH private keys getting silently copied to remote servers

- AWS credentials harvested from

.envfiles during "innocent" project analysis - Database passwords extracted when AI tools were "helping" with schema design

- Build secrets stolen during deployment assistance

- Command injection through carefully crafted AI responses that made the human user run malicious commands

The scariest part? Your expensive EDR and SIEM tools are useless here. They can't tell the difference between legitimate AI operations and credential theft because the traffic patterns are identical.

How We Actually Fix This Problem

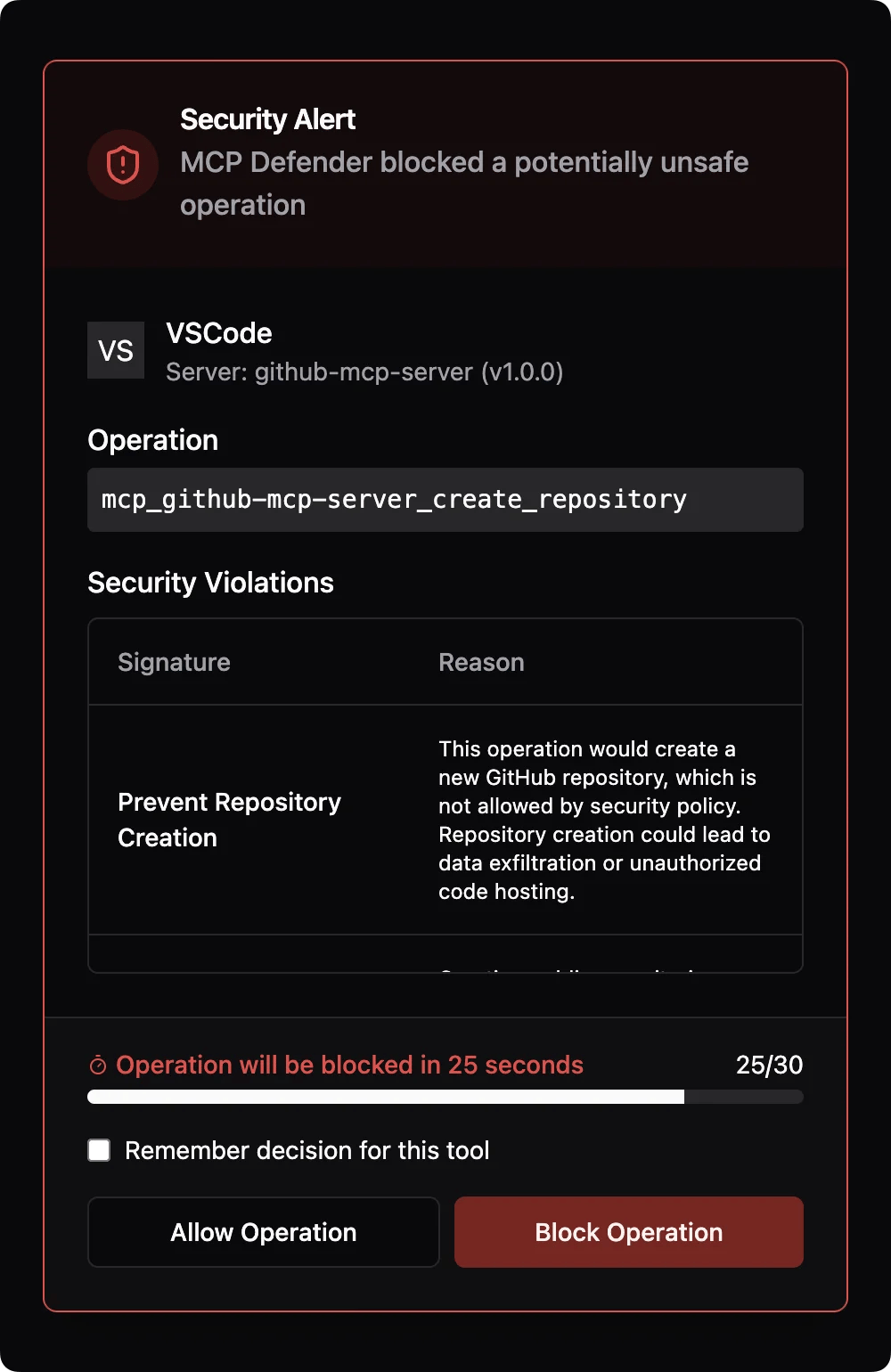

MCP Defender sits between your AI tools and the outside world like a very paranoid bouncer. Every time your AI wants to connect to an MCP server, we intercept that traffic and ask: "What the fuck is this server actually trying to do?"

Here's what happens when you're using Claude Desktop or Cursor:

- Your AI makes an MCP request - wants to read a file, query a database, whatever

- We intercept it immediately - before it leaves your machine

- Our threat detection kicks in - pattern matching plus ML models trained on real attack data

- We analyze the server's response - looking for data exfiltration patterns, malicious payloads, credential harvesting

- You get a popup if something's fishy - with actual details like "MCP server attempted to access ~/.ssh/id_rsa" instead of generic "threat detected" bullshit

The whole process adds about 5-15ms depending on request complexity. Fast enough that you won't notice, slow enough to catch the bad actors.

What We Actually Protect

Currently works on macOS with version 1.1.2 (Windows version exists but we don't have Windows developers, so it's janky). Open source under AGPL-3.0 because security tools should be auditable, not black boxes.

Protected applications:

- Cursor - Their MCP implementation is actually pretty clean

- Claude Desktop - Anthropic's desktop app, the original target

- VS Code - With AI extensions, though support is hit-or-miss depending on the extension

- Windsurf - Generates more suspicious traffic than other tools for some reason

Built with Electron because we needed cross-platform desktop apps and didn't want to write native code for every OS. Uses about 80MB of RAM, which is annoying but less than Slack.