The Issue: Your kernel dies, but JupyterLab just sits there with a spinning indicator. No error message, no explanation, just eternal loading. This is Issue #4748 that's been plaguing users since 2019.

Why It Happens: Usually memory exhaustion (OOMKiller on Linux) or kernel crashes. JupyterLab 4.x improved error reporting but still fails to show meaningful messages when the kernel process gets murdered by the system. The Linux OOM killer documentation explains how memory exhaustion triggers process termination.

Quick Diagnosis Commands

## Check if process was killed (Linux/Mac)

dmesg | grep -i "killed process"

grep "Out of memory" /var/log/kern.log

## Check JupyterLab server logs

jupyter lab --debug

## Monitor memory usage while running

htop -p $(pgrep -f jupyter-lab)

Immediate Fix: Open a terminal in JupyterLab and run:

jupyter kernelspec list

jupyter kernel --kernel=python3 --debug

This will show you the actual error messages that the UI hides from you. For more debugging techniques, check the Jupyter Server documentation and kernel management guide.

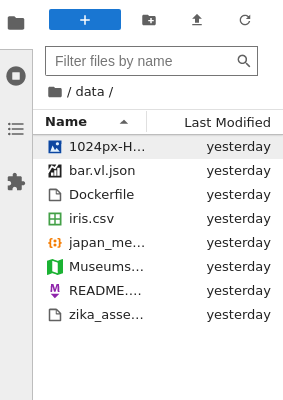

Memory Explosion: The 2GB CSV That Killed My Laptop

Real story: I loaded what I thought was a "small" 2GB CSV file. Pandas read it fine, but then I tried to display it in a cell. JupyterLab rendered every single row in the notebook interface, consuming 18GB of RAM and crashing my 16GB machine.

The Problem: JupyterLab's output rendering doesn't respect memory limits. Display a large DataFrame and watch your system die. This is a known issue with notebook output handling that affects all web-based notebook interfaces. The pandas memory optimization guide provides strategies for handling large datasets.

Solutions That Work:

## WRONG - will kill your browser

df = pd.read_csv('huge_file.csv')

df # Don't do this with large data

## RIGHT - limit display output

pd.set_option('display.max_rows', 20)

pd.set_option('display.max_columns', 10)

df.head() # Always use head() for large datasets

Emergency Memory Recovery:

## Clear all variables except essentials

%reset_selective -f "^(?!df|important_var).*"

## Force garbage collection

import gc

gc.collect()

## Check memory usage of variables

%whos

Extension Hell: When Your Beloved Extensions Break Everything

JupyterLab 4.4.7 broke about half the extensions I rely on. The extension compatibility tracker shows the carnage. Check the migration guide for breaking changes and the extension development docs for troubleshooting.

Debug Extension Issues:

## List all extensions and their status

jupyter labextension list

## Start with all extensions disabled

jupyter lab --LabApp.tornado_settings='{"disable_check_xsrf":True}' --no-browser

## Enable extensions one by one to find the culprit

jupyter labextension disable extension-name

Common Extension Failures:

- jupyterlab-git 0.41.x: Breaks with JupyterLab 4.4+ due to API changes

- Variable Inspector: Causes memory leaks with large objects

- LSP extensions: Conflict with each other, causing infinite loading

Nuclear Option: When everything's fucked, start fresh:

## Backup your settings

cp -r ~/.jupyter ~/.jupyter-backup

## Remove all extensions and config

jupyter lab clean --all

rm -rf ~/.jupyter/lab

jupyter lab build

The Webpack Build From Hell

"Building JupyterLab assets" - words that strike fear into every developer's heart. This process randomly fails, especially on systems with limited memory or slow disks.

Common Build Failures:

JavaScript heap out of memory:

## Increase Node.js memory limit

export NODE_OPTIONS="--max-old-space-size=8192"

jupyter lab build

Permission denied on extensions:

## Fix ownership issues (Linux/Mac)

sudo chown -R $USER ~/.jupyter

jupyter lab build --dev-build=False

Build just hangs forever:

## Kill all Node processes and try again

pkill -f "node.*jupyter"

jupyter lab clean --all

jupyter lab build --minimize=False # Faster, less optimized build

Port Conflicts and Proxy Hell

Running multiple JupyterLab instances? Congratulations, you're about to discover port conflict hell.

Check what's using your ports:

## Find what's on port 8888

lsof -i :8888

netstat -tulpn | grep 8888

## Kill zombie jupyter processes

pkill -f jupyter-lab

jupyter notebook stop

Reverse Proxy Issues: If you're running behind nginx or Apache, SSL problems will make your life miserable. The browser console will show WebSocket connection failed errors.

Fix nginx proxy config:

location /jupyter/ {

proxy_pass http://localhost:8888/jupyter/;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header Host $host;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_http_version 1.1;

proxy_set_header Upgrade $http_upgrade;

proxy_set_header Connection "upgrade";

proxy_read_timeout 86400;

}

Database Connection Disasters

Using SQL magic or database extensions? Prepare for connection timeout hell.

Common SQL Magic Failures:

## This will randomly fail

%sql SELECT * FROM huge_table

## Add connection pooling and timeouts

%config SqlMagic.autopandas = True

%config SqlMagic.displaycon = False

%sql SET statement_timeout = '300s'

Connection Pool Exhaustion:

## Close connections properly or get "too many connections" errors

%sql COMMIT

%sql --close connection_name

Time lost to this debugging: approximately 47 hours of my life I'll never get back. But now you don't have to lose yours. For comprehensive troubleshooting resources, bookmark the JupyterLab troubleshooting FAQ, Jupyter community forum, and Stack Overflow JupyterLab tag.