EC2 launched in August 2006 and changed everything. Before EC2, if you needed a server, you bought a physical box, waited weeks for delivery, and paid whether you used it or not. EC2 said "fuck that" - virtual servers in minutes, pay by the hour.

I've been using EC2 since 2008 and it's wild how something so simple - "rent a computer in the cloud" - can be so maddeningly complex. There are something like 500+ instance types now (I stopped counting at 200), at least 4 different pricing models, and enough networking options to make a Cisco engineer quit and become a barista.

How EC2 Actually Works (The Stuff That Matters)

An EC2 instance is just a virtual machine. AWS takes physical servers in their data centers, chops them up with hypervisors, and rents you slices. Your instance has CPU cores, RAM, storage, and network bandwidth - just like a physical server, but you can resize it without touching hardware.

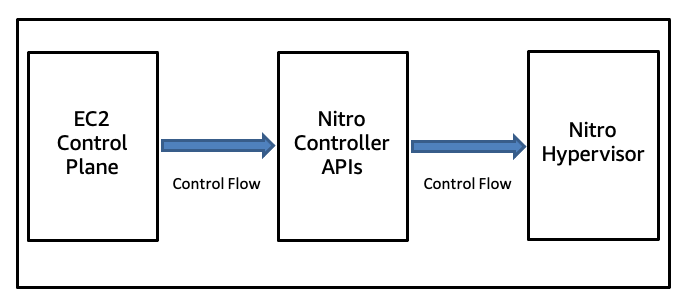

The magic happens with AWS Nitro System - custom silicon that handles virtualization so your instances don't suck. This means you get bare-metal performance without the headaches of managing physical hardware. The latest instances have sixth-generation Nitro cards that push twice the network and storage bandwidth, which actually matters if you move a lot of data.

The Good, Bad, and Ugly of EC2 Features

Too Many Instance Types: There are 500+ instance types and picking the right one is overwhelming as hell. My advice? Start with t3.medium for web apps, c5.large for CPU-heavy work, or r5.large for memory-hungry databases. You can always change later.

AMIs Are Your Friend (When They Work): Amazon Machine Images are templates for your instances. AWS provides thousands, but half are outdated and the good ones are buried in search results. Create your own AMI once you get an instance configured - it'll save you hours of setup time.

EBS Storage Doesn't Suck: Elastic Block Store volumes are like external hard drives for your instances. They survive instance restarts (unlike instance store volumes that vanish into the ether). Use gp3 volume type - it's like 20% cheaper than gp2 and performs way better. Pro tip: enable encryption by default or you'll forget and your security team will hunt you down.

Security Groups vs. NACLs: Security groups are stateful firewalls - if traffic goes out, the response comes back automatically. NACLs are stateless pain-in-the-ass subnet-level filters. Use security groups unless you enjoy masochistic networking.

Auto Scaling: Auto Scaling launches more instances when CPU is high, kills them when it's low. Works great until it freaks out and launches 50 instances because CloudWatch metrics were delayed by 30 seconds.

Performance Reality Check

The new M8i instances with Intel Xeon 6 chips are noticeably faster than M7i for most stuff. In my testing with a basic WordPress site, NGINX was definitely faster - hard to say exactly how much because my test setup was pretty basic, but noticeably snappier. PostgreSQL queries felt faster too, though I didn't run proper benchmarks because who has time for that shit.

Performance can be inconsistent - the "noisy neighbor" problem is real. Sometimes your instance runs like butter, sometimes it's slower because someone else's workload is hammering the underlying host. This is why dedicated hosts exist, but they're expensive as hell.

Scale-wise, you can get instances with 384 vCPUs and 24TB of RAM if you hate money. Most apps work fine on much smaller instances - don't fall into the "bigger is better" trap until you actually need it.

Why Big Companies Use EC2 (And Why You Should Too)

Companies like Netflix, Airbnb, and LinkedIn run massive chunks of their infrastructure on EC2. Netflix streams to 200+ million users, Airbnb handles millions of bookings, LinkedIn serves 700+ million professionals. If EC2 can handle that scale, your little app will be fine.

Compliance auditors cream their pants over AWS - they have every certificate known to mankind. PCI DSS Level 1, SOC, ISO 27001, HIPAA, the works. This matters if you handle credit cards, health data, or work with paranoid enterprises.

AWS runs EC2 everywhere - 38 regions and over 100 availability zones last I checked. This means you can put your app close to your users and survive data center outages. Pro tip: Don't pick a region just because it's cheap - factor in data transfer costs and latency to your users.

The key to EC2 success isn't just understanding how it works - it's picking the right instance type for your workload. With over 500 options ranging from tiny burstable instances to monster machines with hundreds of cores, that choice can make or break your application's performance and your budget.