Computer Use sounds amazing on paper - point it at any interface and it figures out what to click. In reality, it's like watching your grandfather use a smartphone. Every single action requires a screenshot, 5 seconds of thinking, another screenshot to see what happened, then 3 more tries when it clicked the wrong button.

I tested this on our internal CRM system (Salesforce Classic, because legacy hell), and a simple "create contact, add notes, set follow-up" workflow that takes me 45 seconds consistently took Computer Use 12-18 minutes. That's not a typo.

How This Actually Works (Spoiler: Slowly)

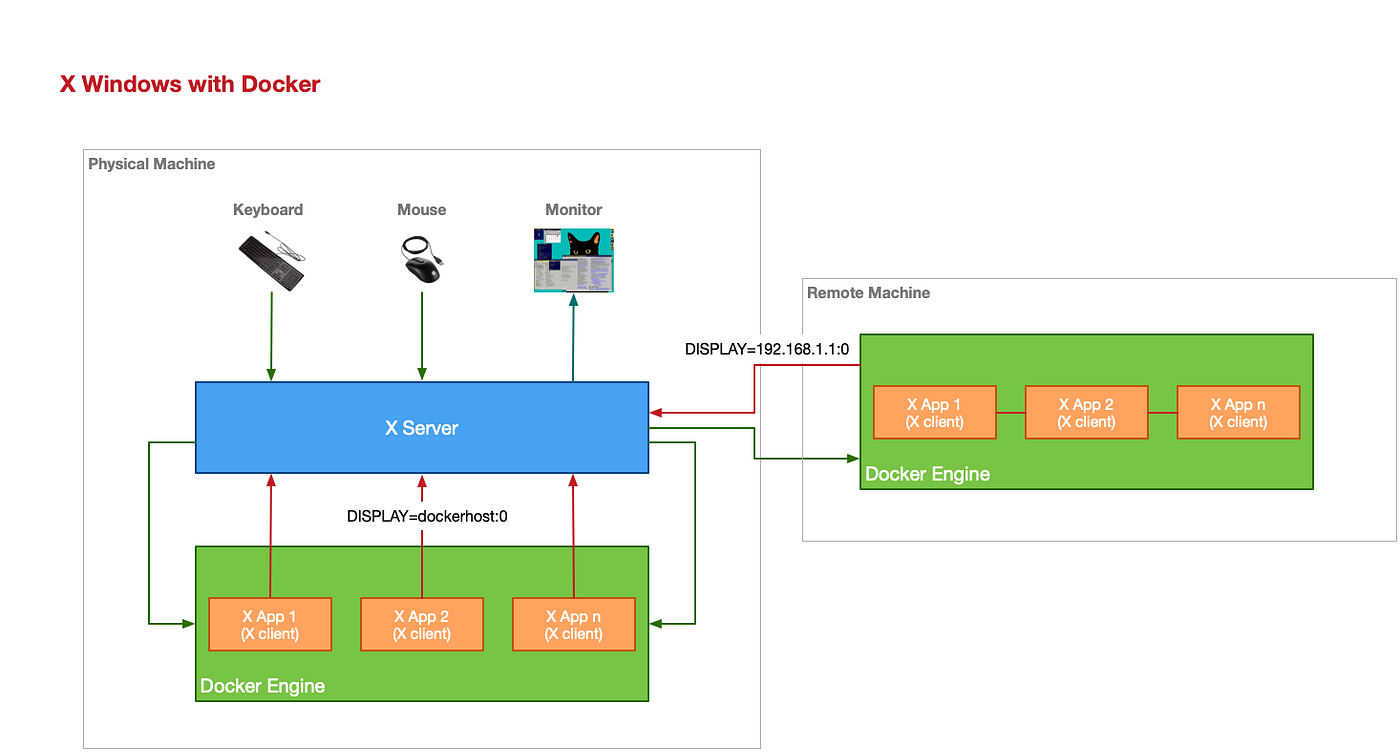

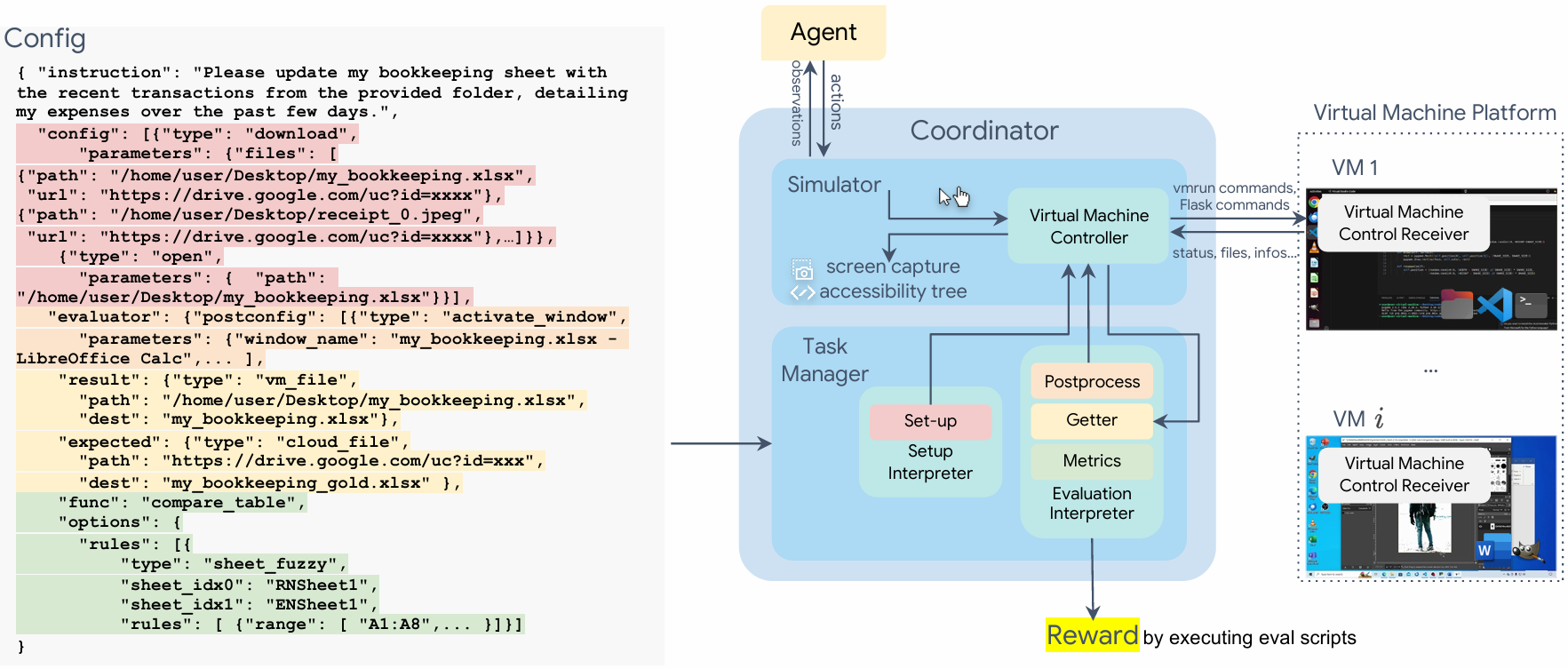

The OSWorld environment architecture - this is what Computer Use actually runs on behind the scenes

Look, the whole process is fucked. Computer Use works by taking screenshots, staring at them for what feels like forever, then clicking somewhere. Usually the wrong somewhere. The cycle is: screenshot → 3-5 seconds of "thinking" → click → another screenshot to verify it fucked up → repeat. On complex workflows, you'll watch it take 50+ screenshots for tasks that should need 5 clicks.

Each screenshot costs about 1,200 tokens with Claude 3.5 Sonnet (the only model that actually works for this), which is roughly $0.0036 per screenshot at current pricing. Doesn't sound like much until you realize a simple "fill out this form" task burns through 30 screenshots and costs $0.11 just in image processing.

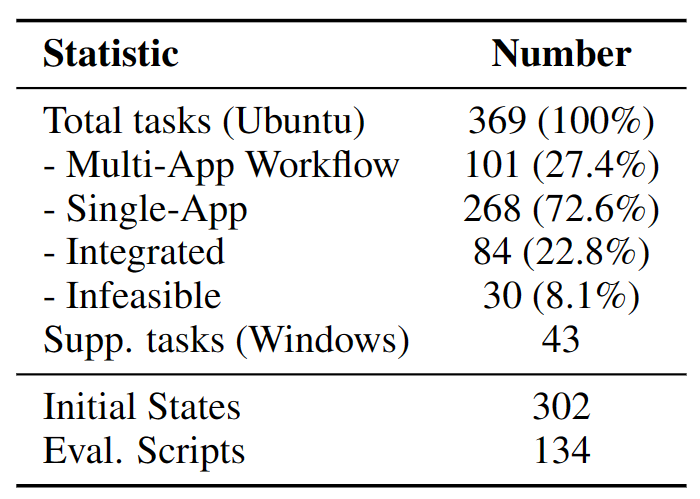

The OSWorld benchmark research proves what anyone who's used this knows: Computer Use takes 1.4-2.7× more steps than a human would. But here's what the research doesn't capture - the real failure modes that'll make you want to throw your laptop.

Real performance benchmarks from OSWorld showing Computer Use struggles with even basic tasks - humans achieve 72.36% success rate while the best models hit only 12.24%

What Actually Breaks (And How Often)

Forget the polished demos. Here's what happens in the real world:

Popup Hell: Any modal dialog, cookie banner, or "Subscribe to our newsletter!" popup instantly confuses Claude. I watched it click empty space for 2 minutes straight when a Chrome update notification appeared mid-task. Success rate with popups: basically zero.

Dynamic Content Disaster: Tried automating our project management tool (ClickUp) where content loads as you scroll. Computer Use would start clicking buttons that hadn't loaded yet, or click outdated positions after new content shifted everything down. Epic failures on anything that isn't completely static.

Resolution Roulette: Testing on different monitor setups revealed the nastiest gotcha - coordinate calculations break on high-DPI displays. Had to set everything to 1280x800 like it's 2005, and even then it clicks 10-20 pixels off target about 30% of the time. The official docs barely mention this critical limitation.

Browser Chaos: Different browsers = different failure modes. Works "okay" in Chrome, breaks spectacularly in Firefox (font rendering differences mess up element detection), and Safari? Don't even try. Browser compatibility is officially "experimental" which is corporate speak for "broken".

The real success rates from my testing:

- Simple stuff (opening files): ~75%

- Web forms without JavaScript: ~60%

- Anything with dynamic content: ~15%

- Multi-step workflows: ~8% (not a typo)

Why Everything Takes 20x Longer Than It Should

Simple math: human completes task in 30 seconds, Computer Use takes 15-20 minutes on average. The worst case I documented was a 2-minute manual process that cost $12 in API calls and took 47 minutes to complete (and still got the date format wrong).

The Screenshot Tax: Every screenshot burns ~1,200 tokens. Complex workflows can hit 80+ screenshots. At $0.003 per 1K tokens, that's $0.29 just for Claude to "see" what it's doing. Then add reasoning costs, retry costs when it fails...

Retry Hell: When Computer Use fucks up (which is often), it doesn't give up gracefully. I've seen it attempt the same failed click 8 times in a row before moving on. Each retry costs full token amounts.

Network Latency Reality: Every action requires a round trip to Anthropic's API servers. If you're not on the west coast, add 200-300ms per action. 50 actions = 10-15 seconds just in network overhead. API rate limits make this worse during peak hours.

Model Version Reality Check

There's only one model that actually works: Claude 3.5 Sonnet. The older Claude 3 Opus and Haiku can't use Computer Use at all. There's no "Sonnet 4" or "Claude 4" - that's just people getting confused by version naming. Check the model comparison table if you don't believe me.

The October 2024 update to 3.5 Sonnet improved coordinate accuracy slightly, but it's still shit at handling:

- High-resolution displays (requires 1280x800 workaround)

- Dynamic content that moves after page load

- Any popup or modal that appears unexpectedly

- Scrolling to find elements not currently visible