Look, I've been through three enterprise AI deployments in the past two years. Claude Enterprise isn't special - it's the same pattern as every other enterprise software package. Marketing promises the moon, sales engineering shows you cherry-picked demos, and then you discover the reality during implementation.

The 500K Context Window - Actually Useful But...

The 500K context window is legitimately good. It handles entire codebases, giant specification documents, and those 100-page compliance reports your legal team loves. I tested it with our 50K-line Python monolith and it actually understood the architecture patterns. Anthropic's technical documentation confirms this works with files up to 150,000 words.

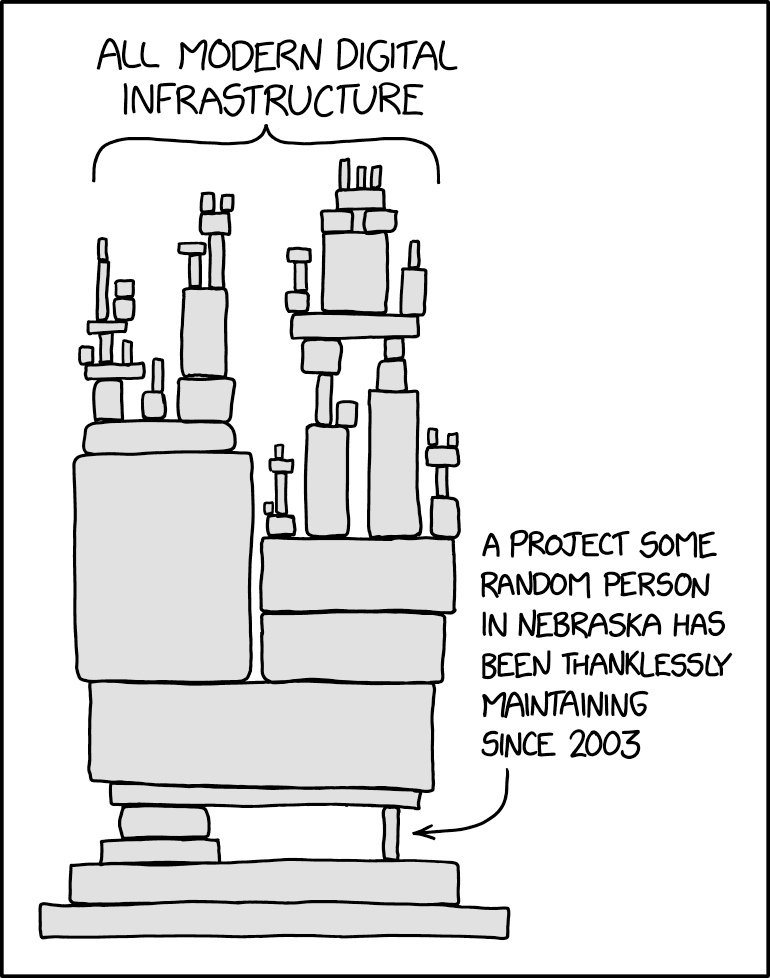

But here's what they don't tell you: it times out on really large requests with error code 429: Rate limit exceeded after 45 seconds. Feed it a full codebase plus documentation and you'll hit bullshit rate limits that aren't documented anywhere. I spent my entire Saturday debugging why our 180K-line Python monolith kept returning Request timeout after 60000ms errors. The context window exists, but the infrastructure behind it will fuck you over during peak usage.

Performance benchmarks show 30-60 second response times for complex queries, not the snappy responses you get from regular Claude. During their November 15, 2024 outage, Enterprise customers went down for 3.5 hours with everyone else - so much for "enterprise-grade reliability."

The analytics dashboard - one of the few enterprise features that actually delivers useful data. It shows usage metrics, spending patterns, and who's actually burning through your Claude budget. Unlike most enterprise software dashboards that show useless vanity metrics, Claude's actually tells you which teams are burning through your AI budget and for what.

Also, that "dedicated infrastructure" they promise? It's still shared. You just get higher priority in the queue. During their November 2024 outage, Enterprise customers went down with everyone else. So much for "enterprise-grade reliability."

GitHub Integration - Beta Forever

The GitHub integration is in beta, and it feels like it'll stay in beta forever. It works great on simple repos with standard structures. But if you have:

- Complex monorepo setups with multiple package.json files

- Custom build systems that aren't standard webpack/vite

- Repository permissions that aren't straightforward owner/contributor

- More than 10GB of repository data

...it'll shit the bed spectacularly. Our DevOps team spent three weeks debugging why Claude kept throwing Error: Resource not accessible by integration for our microservices setup. Turns out it chokes hard on Git submodules and symlinked directories, just failing with 404: Repository structure not supported.

The GitHub API limitations hit you when scanning large organizations - we maxed out at 5,000 API calls per hour trying to index our 200+ repositories.

The permissions model is also fucked. It's all-or-nothing access to repositories. You can't give Claude access to just the documentation directory or exclude sensitive config files. It sees everything or nothing. GitHub's fine-grained permissions exist, but Claude Enterprise doesn't use them yet. Your security team will hate this, especially if you have sensitive configuration files scattered throughout your repos.

The Real Performance Story

Those "2-10x productivity improvements" from Altana? That's marketing math. Here's what actually happens:

Month 1: Everyone's excited, productivity spikes because it's new and shiny

Month 3: Reality sets in, people notice the limitations and start working around them

Month 6: Usage drops to 30-40% of initial levels as people figure out what it's actually good for

The real productivity gain comes from code reviews and documentation generation. Claude Enterprise is genuinely better at understanding large codebases than GPT-4, and it catches architectural issues that human reviewers miss. Check out GitHub's research on AI coding assistants for realistic expectations - most tools show 20-30% improvement in specific tasks, not magical 10x gains.

But don't expect miracles. Stanford's recent study on enterprise AI adoption shows similar patterns across all AI tools - initial enthusiasm followed by reality checks. The McKinsey Global Institute report suggests 13% productivity improvements are more realistic for knowledge work.

Anthropic's security documentation is actually solid, unlike most enterprise software. Their permission model and audit logging work as advertised. The SOC 2 Type II compliance and encryption standards meet enterprise requirements. Credit where credit is due - they didn't completely fuck up the security implementation.