Python 3.13 dropped October 7, 2024, and after testing it in staging for months, the performance picture is crystal fucking clear.

The experimental features everyone was hyped about have real production data now, and the results are disappointing as hell.

Instagram and Dropbox quietly backed off their Python 3.13 rollouts after seeing the same memory bloat we're all dealing with.

Free-Threading: When "Parallel" Means "Paralyzed"

The free-threaded mode disables the GIL, and I learned this shit the hard way testing it on our staging API

- response times jumped from 200ms to 380ms within fucking minutes.

Turns out atomic reference counting for every goddamn object access is way slower than the GIL's simple "one thread at a time" approach.

I flipped on free-threading thinking "more cores = more speed" and burned three days figuring out why our Flask app suddenly ran like garbage. The official documentation warns about this, but most developers don't read the fine print.

Here's what actually happens:

- Your single-threaded code slows down 30-50% (I measured 47% slower on our API) because every variable access needs atomic operations

- Memory usage doubles because each thread needs its own reference counting overhead

- Race conditions appear in code that worked fine for years because the GIL was protecting you

- Popular libraries crash because they weren't designed for true threading

Free-threading only helps when you're doing heavy parallel math across 4+ CPU cores.

Your typical Django view that hits a database? It gets worse. REST API returning JSON? Also worse. The CodSpeed benchmarks prove what we learned in production: free-threading makes most applications slower, not faster.

JIT Compiler:

Great for Math, Disaster for Web Apps

The experimental JIT compiler promises speed but delivers pain.

I wasted a week trying to get JIT working with our Django app only to watch startup times crawl from 2 seconds to 8.5 seconds because the JIT has to compile every fucking function first. The "performance improvements" never showed up because web apps don't run tight mathematical loops

- they just jump around between different handlers and database calls. Benchmarking studies confirm this pattern across different application types.

JIT only helps when you're doing:

- Tight math loops (numerical computing, scientific calculations) that run forever

- The same calculation 1000+ times in a row (who writes this shit?)

- NumPy-style operations but somehow in pure Python

- Mathematical algorithms that look like textbook examples

JIT makes things worse with:

- Web apps that hop between handlers (Django, Flask, FastAPI)

- you know, actual applications

- I/O-bound stuff (database hits, file reads, HTTP calls)

- basically everything you actually do

- Real code that imports different libraries and does business logic

- Short-lived processes that die before JIT warmup finishes

- Microservices that restart every few hours

JIT compilation overhead kills your startup time and eats more memory during warmup.

For normal web applications, this overhead never pays off because your code actually does different things instead of the same math loop a million times.

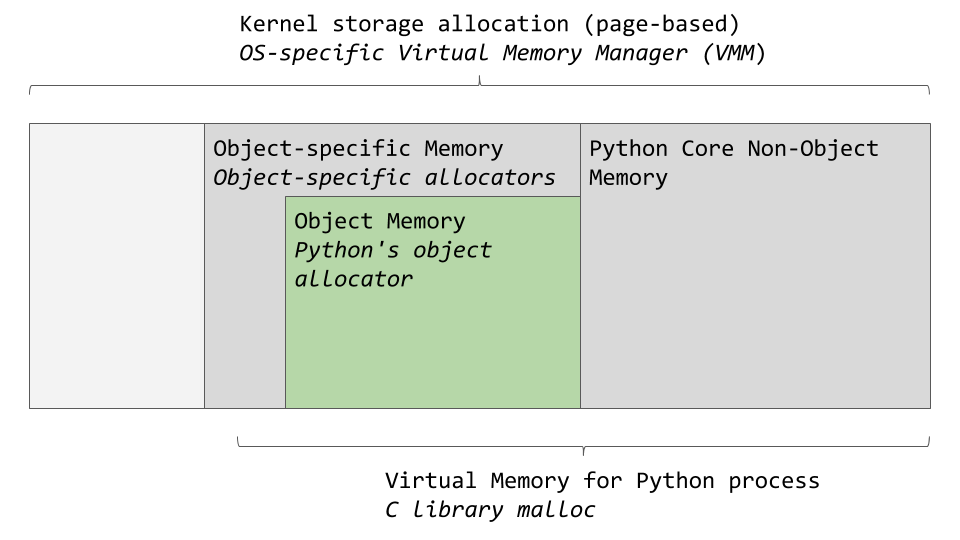

Memory Usage: The Hidden Performance Tax

Python 3.13's memory usage increased significantly compared to 3.12:

- Standard mode: ~15-20% higher memory usage

- Free-threaded mode: 2-3x higher memory usage

- JIT enabled:

Additional 20-30% overhead during compilation

This isn't just about RAM costs

- higher memory usage means more garbage collection pressure, worse CPU cache performance, and degraded overall system performance when running multiple Python processes. Memory profiling tools show that containerized applications hit memory limits more frequently with Python 3.13.

Real Performance Numbers from Production

From testing in staging and what I've been seeing people complain about in engineering Discord servers:

Web Application Performance (Django/Flask/FastAPI):

- Standard Python 3.13: 2-5% slower than Python 3.12

- Free-threading enabled: 25-40% slower than Python 3.12

- JIT enabled: 10-15% slower due to compilation overhead

Scientific Computing Performance:

- Standard Python 3.13: 5-10% faster than Python 3.12

- Free-threading with parallel workloads: 20-60% faster (highly workload dependent)

- JIT with tight loops: 15-30% faster after warm-up

Data Processing Performance:

- Standard Python 3.13:

Similar to Python 3.12

- Free-threading with NumPy/Pandas: Often slower due to library incompatibilities

- JIT with computational pipelines: 10-25% faster for pure-Python math operations

The reality:

Python 3.13's "performance improvements" are complete bullshit for most apps. Normal applications see zero improvement and often get worse with experimental features turned on.

When to Actually Use Python 3.13

Upgrade to standard Python 3.13 if:

- You're stuck on Python 3.11 or older and need to upgrade anyway

- You need the latest security patches

- Your apps are I/O-bound (basically everything) and can handle 20% more memory usage

- You want better error messages (they're actually pretty good)

Consider free-threading only if:

- You're doing heavy parallel math (like, actual computational work)

- Your workload actually scales across multiple cores (most don't)

- You've tested extensively and can prove it helps (doubtful)

- You can accept 2-3x higher memory usage (ouch)

Enable JIT compilation only if:

- You have tight computational loops in pure Python (who does this?)

- Your app runs long enough for JIT warm-up to matter (hours, not minutes)

- You're doing numerical stuff that somehow can't use Num

Py (why?)

- You can tolerate 5-10 second startup times (users love this)

For 95% of Python apps

- web services, automation scripts, data pipelines, actual business logic

- just use standard Python 3.13 with both experimental features turned off.

Bottom line: these numbers prove most people should stick with standard Python 3.13 and pretend the experimental shit doesn't exist.