Why I Went Broke in 3 Days

Default Qodo settings will destroy your credit balance faster than you can say 'node_modules'. I burned through my entire monthly allowance in 3 days because it was analyzing every damn file in node_modules/. Turns out the default config doesn't exclude anything, so it happily chewed through credits on webpack bundles and vendor dependencies.

Based on actual Qodo documentation, here's what models actually exist as of September 2025:

- Claude 4 Sonnet: 1 credit (my daily driver, catches real bugs)

- GPT-5: 1 credit (solid for most stuff, handles complex code well)

- GPT-4o-mini: 1 credit (blazing fast, misses anything complex)

- Claude 4.1 Opus: 5 credits (expensive but thorough - only for critical code)

- Gemini 1.5 Pro: 1 credit (handles huge files, inconsistent quality)

The reality: If you're not on Enterprise, you're stuck with the basic models. I spent two weeks thinking I had access to premium models before realizing half the docs refer to Enterprise-only features. Check the official pricing page to see what's actually included in each tier.

Model Switching (When It Actually Works)

The one thing Qodo gets right is mid-conversation model switching. You can change models without losing context, which saves you from starting over when the first model gives you garbage.

My actual workflow:

- Start with GPT-4o-mini (1 credit) - quick sanity check

- If it finds real issues: Switch to GPT-4o (still 1 credit)

- For complex logic bugs: Try Claude 3.5 Sonnet (1 credit)

- For critical production code: Claude Opus (5 credits - use sparingly)

Here's what actually happened: GPT-4o-mini flagged some async/await usage that looked weird - something about unhandled promise rejections, but couldn't tell me where the fuck they were coming from. Switched to Claude 4 Sonnet in the same chat, and it identified that I was missing proper error handling in a Promise chain. Cost me 2 credits total instead of starting two separate conversations.

The JavaScript event loop handling differences between models is real - some understand microtasks better than others. Claude models tend to catch Node.js-specific patterns while GPT models are better with browser APIs.

Large Repo Configuration That Won't Bankrupt You

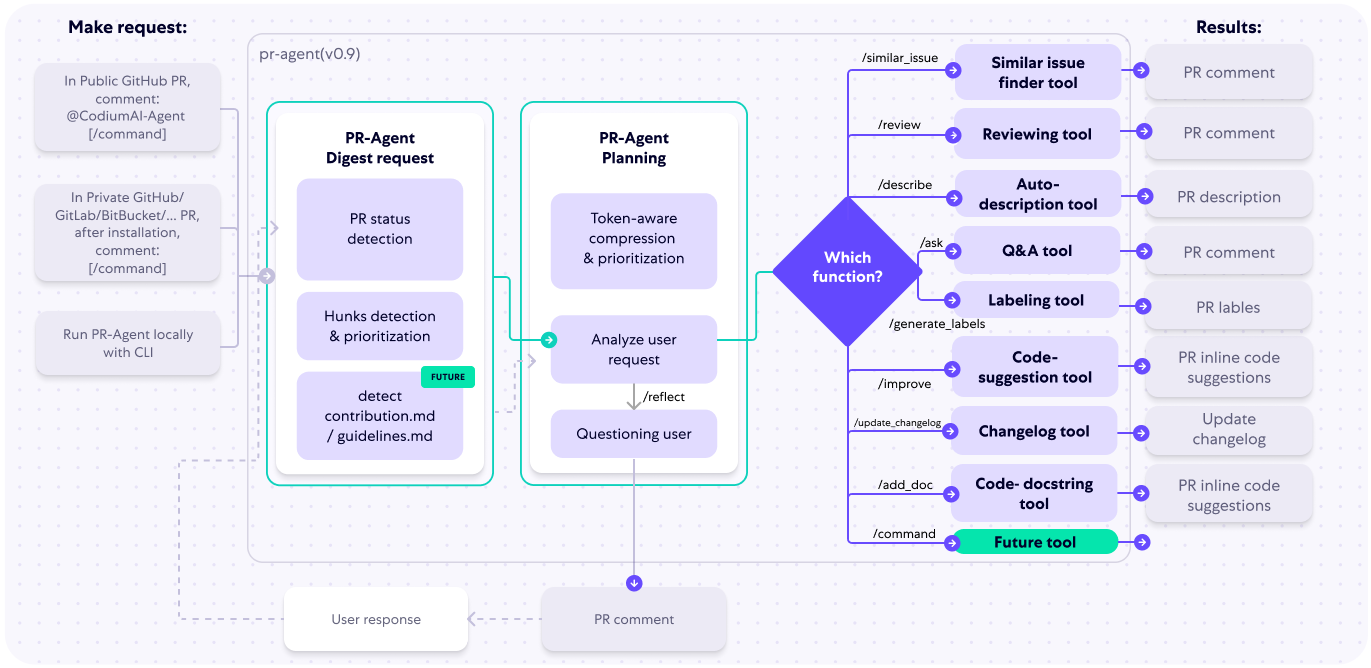

The `.pr_agent.toml` configuration file is where you save your ass. Here's what I use after burning too many credits on bullshit:

[pr_reviewer]

exclude = [\"node_modules/\", \"dist/\", \"build/\", \"coverage/\", \".git/\"]

review_only_diff = true

[config]

model = \"claude-3-5-sonnet\"

max_description_tokens = 500

extra_instructions = \"\"\"

Skip obvious formatting changes.

Focus on logic errors and security issues.

Don't analyze test snapshots or generated files.

\"\"\"

What I learned the hard way:

excludepatterns actually work - use them religiouslyreview_only_diff = trueis mandatory for large reposmax_description_tokensprevents the AI from writing novels

What doesn't work: The fancy file prioritization stuff mentioned in some docs. Half that stuff isn't implemented or requires Enterprise features. Check what's actually configurable before wasting time.

Model Performance Reality Check

After months of testing on real PRs:

Claude 4 Sonnet (1 credit): Best bang for your buck. Catches logic errors, doesn't hallucinate function names. My daily driver. Excellent at type inference and static analysis.

GPT-5 (1 credit): Good at explaining what code does, handles most tasks well. Solid choice for regular code reviews. Strong with refactoring patterns and design patterns.

GPT-4o-mini (1 credit): Use it for sanity checks only. Misses anything that requires actual thinking. Fine for syntax checking and basic linting.

Claude 4.1 Opus (5 credits): Nuclear option. Thorough as hell but expensive. Save for critical security reviews or complex refactors. Best for architectural decisions.

Gemini 1.5 Pro (1 credit): Handles big files well but gives inconsistent advice. Sometimes suggests fixing things that aren't broken. Good with large codebases but weak on edge cases.

Credit Monitoring (It Sucks)

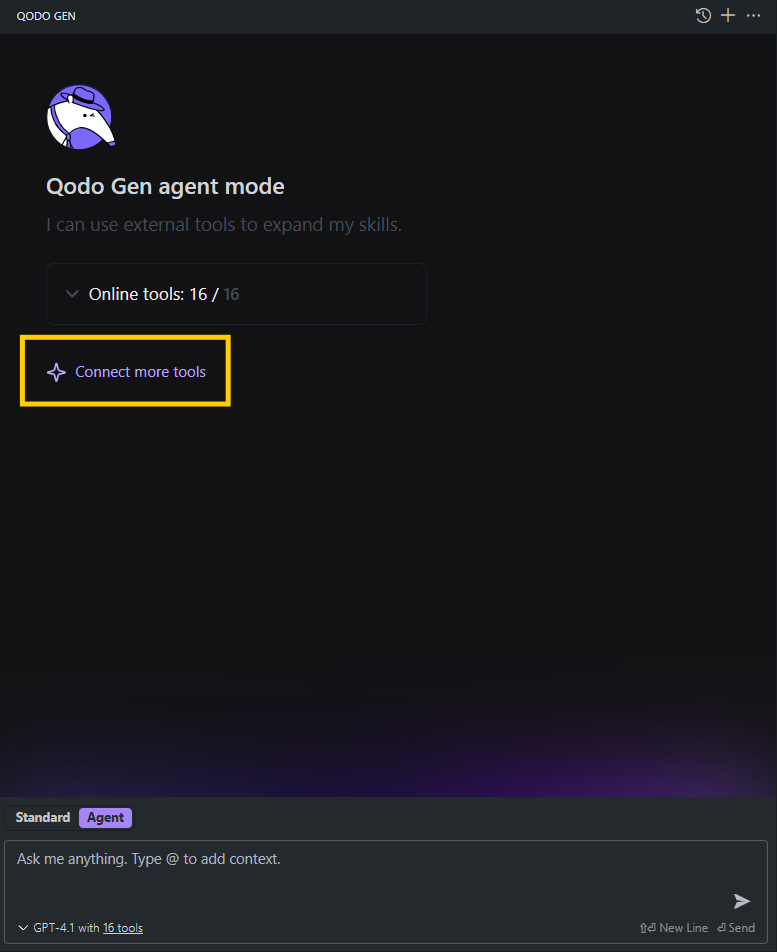

There's no real-time credit monitoring API. The app dashboard shows usage after you've already burned credits, which is pretty useless. You can check remaining credits by clicking the speedometer icon in Qodo Gen chat, but that's about it.

Your options:

- Check remaining credits manually in Qodo Gen before big PRs

- Developer plan: 75 credits/month (about 7-15 meaningful PR reviews)

- Teams tier: 2500 credits/month (can handle bigger teams if configured right)

- Set up alerts in your calendar to check usage weekly

Pro tip: If you hit your limit, you're stuck waiting for the monthly reset. Credits reset 30 days from your first message, not at the start of the calendar month - learned this the hard way when I expected a reset on October 1st but had to wait until the 17th. No way to buy more credits yet (they're "working on it").

Hit this exact scenario during a security audit last month - burned all credits on day 28 and had to manually review a critical auth bug for 3 days while waiting for the reset. Almost shipped vulnerable code because their billing cycle fucked us.

For security-focused code reviews, the cost vs thoroughness tradeoff becomes critical. One vulnerability missed because you ran out of credits could cost way more than upgrading to a higher tier.

Because the defaults are designed to maximize their revenue.

Because the defaults are designed to maximize their revenue.