Your CFO thinks AI is like buying Office 365. They're about to learn the hard way that it's more like building a rocket while it's flying. The "platform cost" in those vendor demos? Cute. That's before the data nightmare, before your models shit the bed in production, and before you realize you need a team of unicorns who cost more than your entire engineering budget.

The Shit Nobody Tells You About AI Costs

Traditional software is predictable - buy licenses, deploy, done. AI projects are chaos with a credit card attached:

Your data storage bill will make you weep: Started with a few GB of training data? Cute. Six months later you're storing 50TB of model artifacts, experiment logs, and "we might need this someday" datasets. AWS charges start small but data grows like cancer. I watched one company's storage bill go from $200/month to $8,000/month because nobody cleaned up failed experiments. S3 pricing looks cheap until you're paying $0.09 per GB just to move data around. Data lifecycle management becomes critical when you're dealing with petabyte-scale ML datasets that need versioning and lineage tracking.

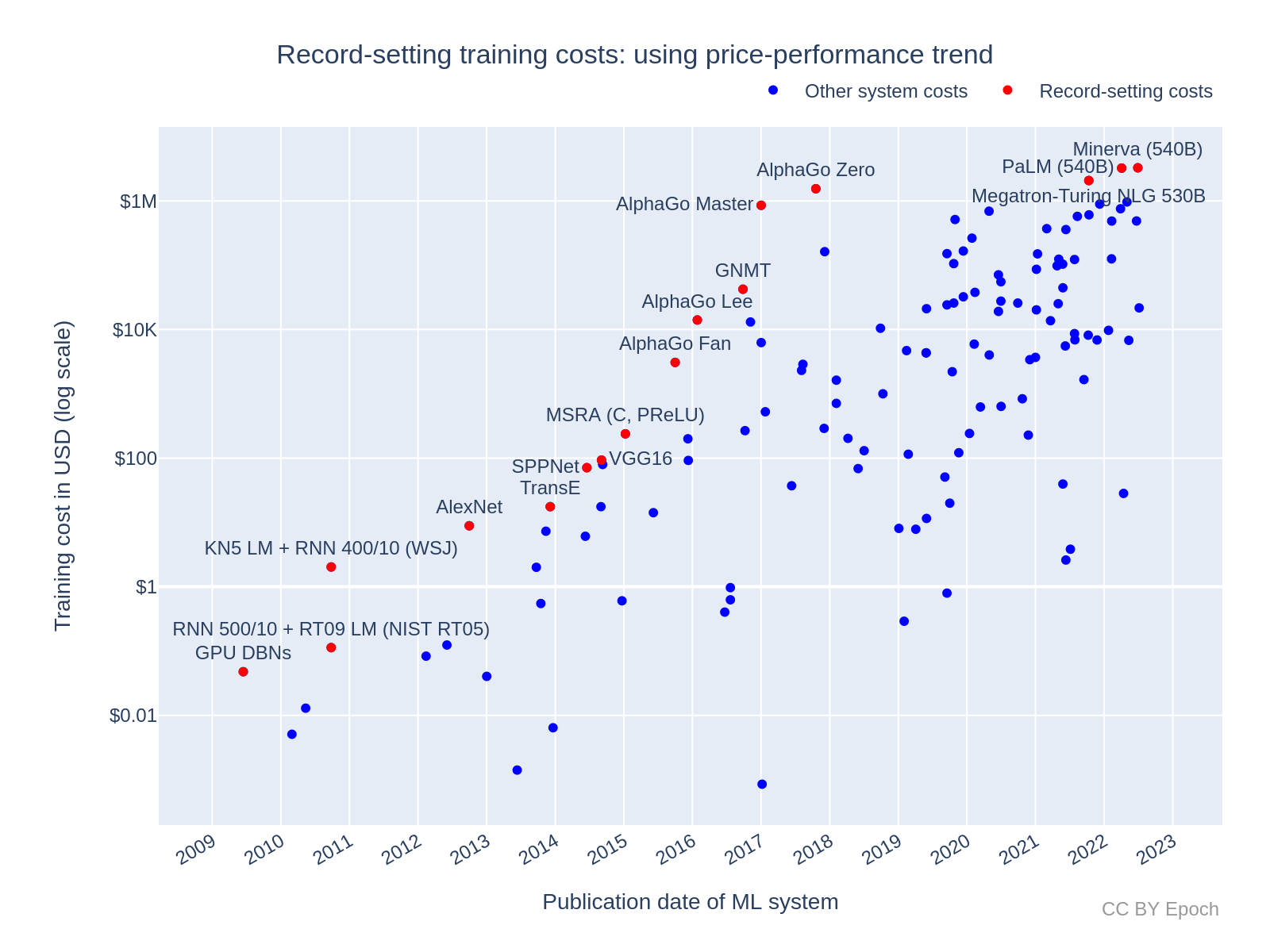

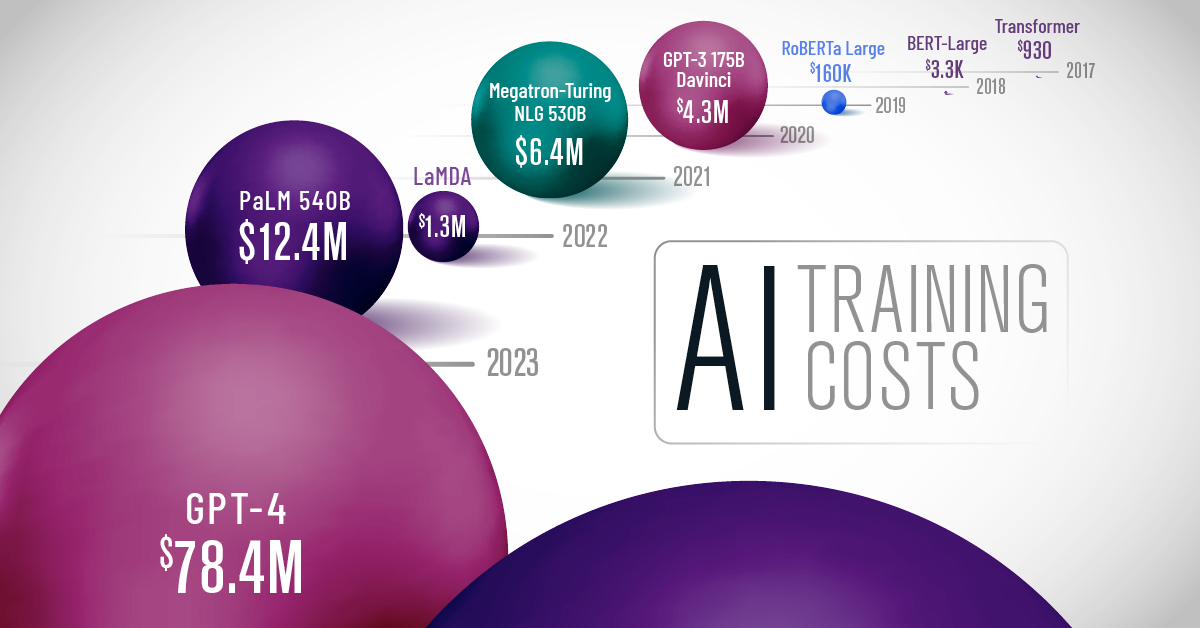

GPU compute costs are basically theft: That $500 proof-of-concept training run? Wait till you need real datasets. We burned $30K in a weekend because someone left a hyperparameter search running on V100s. Google's Vertex AI bills by the hour, and those hours add up fast when your model won't converge and you're throwing bigger GPUs at it. GPU pricing across cloud providers shows massive variations, with spot instances offering savings if you can handle preemption during distributed training.

ML engineers cost more than your house payment: Good ML engineers start at $200K and go up from there. Data scientists who actually know what they're doing? $250K+. MLOps engineers who can make this shit work in production? $350K if you can find them. Stanford's AI Index shows AI talent demand continues outpacing supply. I've seen companies spend 6 months trying to fill one senior ML role while their AI project sits dead in the water.

What Actually Costs Money (Spoiler: Everything)

Here's where your money really goes, based on watching too many AI projects implode:

The Platform Tax (25-30% of your pain)

Your cloud bill will grow like weeds. GPU instances, storage that never shrinks, and networking costs because everything needs to talk to everything else. Databricks charges per "compute unit" which sounds reasonable until you realize training one decent model burns through $2000 worth. SageMaker bills by the hour and those hours disappear fast when debugging why your notebook crashed. NVIDIA AI infrastructure shows infrastructure costs are the fastest-growing AI expense category.

The Data Shitshow (30-40% of your budget)

Data prep is where dreams go to die. You'll spend months cleaning garbage data, paying people to label images, and building pipelines that break every time someone sneezes. Then you'll pay $20K/month for some annotation service to label your training data because your interns quit. Integration with your existing systems? Add another $100K because nothing talks to anything else without custom middleware.

The Talent Black Hole (35-45% of total cost)

This is where the real money disappears. ML engineers who actually know what they're doing are rarer than unicorns and cost about the same. You'll pay $300K+ for someone who might know how to debug why your model accuracy dropped in production. Data scientists are cheaper but half of them can't deploy a model to save their lives.

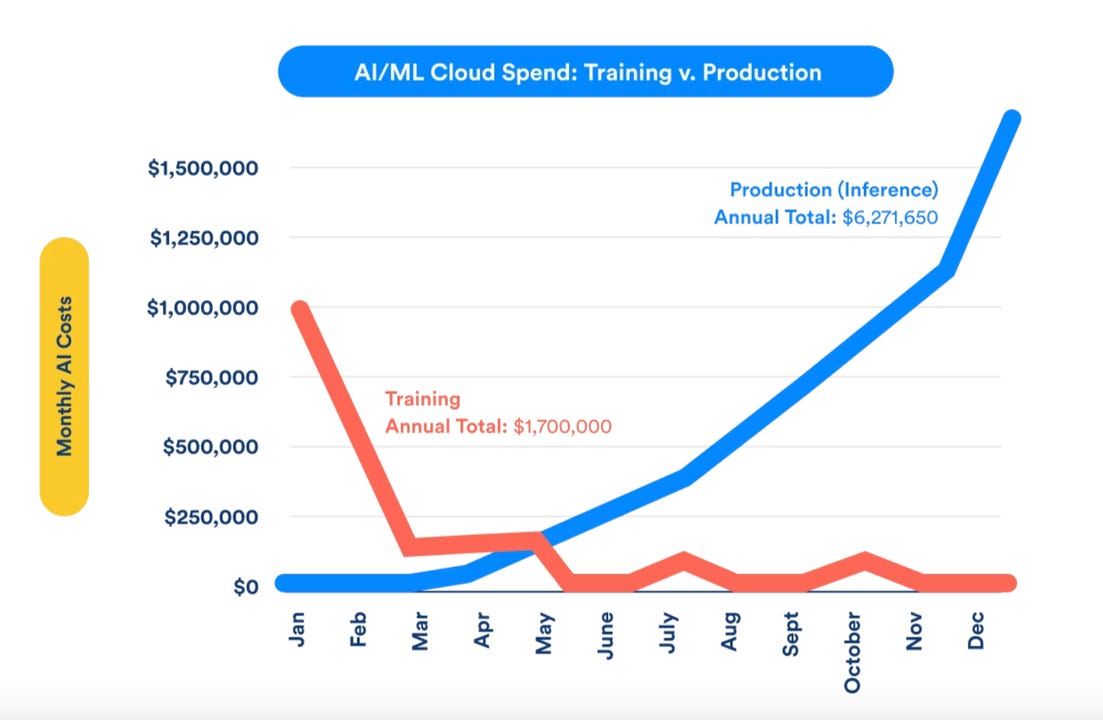

The Operational Nightmare (15-25% ongoing)

Your model worked great in the demo. Now it's crashing in production and nobody knows why. Model monitoring costs more than the model itself. Retraining because data drift broke everything. A/B testing infrastructure because you need to figure out which version sucks less. Plus 24/7 monitoring because AI systems fail in creative ways at 3am.

A typical $500K AI budget breaks down like this: $150K for platforms (the fun part), $200K for people (the expensive part), $180K trying to make data work (the pain part), and $70K keeping everything running (the 3am problem). Most companies budget for the first line item and get blindsided by everything else.

Why "Best of Breed" is Code for "Integration Hell"

There are 90+ MLOps tools because every startup thinks they solved one piece of the puzzle. Research shows the MLOps landscape is fragmented across 16+ categories. You have two choices, both suck:

Buy everything from one vendor like Google Vertex AI or AWS SageMaker. You'll pay $5K-20K monthly and get vendor lock-in that makes your architecture team cry. But at least shit works together most of the time.

Build frankenstein with "best-of-breed" tools: MLflow for tracking (free but you'll spend weeks getting it deployed), Kubeflow for pipelines (good luck debugging those YAML files), Weights & Biases for pretty charts ($50/user/month), plus a dozen other tools that were designed in isolation.

Here's what happens with the DIY approach: you'll spend 6 months getting tools to talk to each other, then another 6 months fixing what breaks when you update one component. I watched one team save $80K on licenses and spend $400K in engineering time trying to make everything work. Their ML project launched 18 months late.

The Three Stages of AI Budget Pain

AI projects follow a predictable cost curve that nobody warns you about:

Stage 1: Everything Works (Months 1-6, $50K-150K)

Your proof-of-concept runs on sample data and everyone's excited. Costs are contained because you're not doing anything real yet. This stage tricks you into thinking AI is affordable.

Stage 2: Reality Hits (Months 6-18, $200K-800K)

Now you need real data, production infrastructure, security, monitoring, and integration with existing systems. Costs explode 3-5x because everything that worked in your laptop crashes when it meets production. Half your budget goes to fixing shit that should "just work."

Stage 3: Scale or Die (18+ months, $800K-3M+)

You've got multiple models, global deployment, compliance requirements, and 24/7 operations. Total spend keeps growing but at least costs become predictable. If you survive this stage, you might actually get economies of scale.

The fuck-up most companies make: they budget for Stage 1 and get blindsided by Stage 2. Netflix runs hundreds of models on Databricks efficiently, but they spent years building that foundation. Stanford AI Index research shows most companies underestimate infrastructure costs by 3-5x. Your startup doesn't have that luxury.

Here's the real talk: if you can't afford $1M+ for a real AI capability, stick with APIs and vendor services. Don't build what you can't afford to maintain.