Here's what they don't tell you about OpenAI's Realtime API: It's a goddamn money pit unless you're a Fortune 500 company. I burned through I think 8 grand? Maybe closer to 10? Could've been 11k. I stopped looking at the bills after the third week because my eye started twitching every time I saw the AWS charges.

When OpenAI Actually Makes Sense (Spoiler: Rarely)

You're prototyping and have unlimited budget: If you're at the "let's just make it work" stage with VC money burning holes in your pocket, fine. The single WebSocket approach saves maybe 2 weeks of dev time. But that convenience costs you $14.40/hour forever.

Complex function calling mid-conversation: This is literally the only technical feature where OpenAI genuinely excels. Their function calling integration is smooth, and replicating that flow with multiple providers is a pain in the ass. If your voice app needs to execute functions during conversations (not after), OpenAI might be worth the premium.

You have zero engineering capacity for maintenance: OpenAI handles the entire pipeline, so there's less shit to break at 2am. But here's the kicker - when it does break (and it will), you're completely at their mercy. I've watched 4-hour outages where you literally can't do anything except refresh their status page and watch your customers leave nasty reviews. There was this one incident in... March? April? Fuck, might've been February. Anyway, their whole thing went down for like 6 hours and we just sat there watching our conversion rate tank.

When You're Getting Robbed (Most Likely Your Situation)

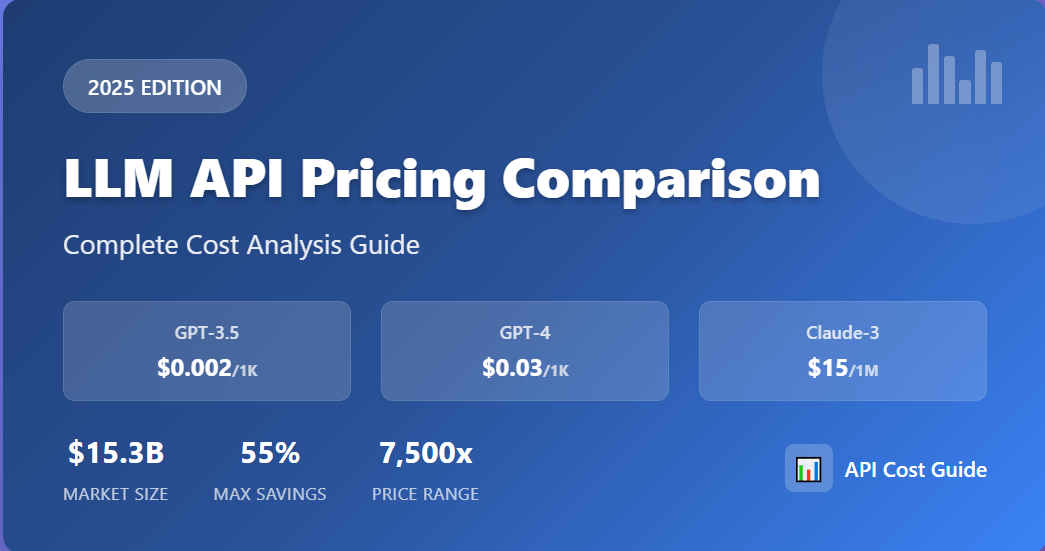

Processing more than 50 hours monthly: At $14.40/hour (based on OpenAI's $0.24/min output pricing), that's $720+ per month just for voice processing. AssemblyAI + ElevenLabs + Claude costs under $200 for the same volume. The math isn't even close.

You need custom voices: OpenAI gives you like 6 voice options. That's it. Meanwhile, ElevenLabs lets you clone any voice you want, and Cartesia has ultra-fast synthesis that beats OpenAI's latency.

Multiple languages: OpenAI's multilingual support is mediocre at best. Deepgram handles 50+ languages better, and Speechmatics actually works with regional dialects that OpenAI butchers.

Enterprise compliance: Good luck getting HIPAA compliance or data residency controls from OpenAI without paying enterprise prices. AssemblyAI offers HIPAA compliance, and Azure Speech gives you data residency without the premium.

The Migration Reality: It's Going to Suck

Alright, enough bitching. Here's the technical nightmare you're signing up for:

You'll spend 6-10 weeks debugging WebSocket connections: Managing multiple WebSocket connections across STT, LLM, and TTS providers is nightmare fuel. Connection pooling and real-time audio streaming require expertise most teams don't have. Error handling and failover logic will consume your life. I spent an entire weekend just getting the fucking connections to stay up. And that's just for AssemblyAI - don't get me started on Deepgram's WebSocket implementation that randomly decides to hate Node.js 18.2.0 for reasons nobody can explain.

Your voice quality will drop initially: Alternative combinations need tuning. Expect 2-4 weeks of constant adjustments to match your current user experience. Context preservation across providers is particularly brutal - you'll lose conversation flow and spend weekends fixing it.

Operational complexity will triple: Instead of one vendor relationship, you now have 3-4. Different billing cycles, different support channels, different outage schedules. When ElevenLabs goes down and AssemblyAI is fine, good luck explaining to your users why voice isn't working.

But here's the thing - after the initial pain, most teams save 2-8 grand monthly while getting better features.

Decision Framework: Do the Fucking Math

Stop overthinking this. Here's the brutal calculation:

// RIP your AWS bill

const monthlyHours = 100; // Being optimistic here, might be 150

const openaiCost = monthlyHours * 14.40; // Bankruptcy simulator

// TODO: add retry costs, connection overhead - fuck it, next sprint

// Alternative stack that \"works\"

const alternativeCost = monthlyHours * 4; // Still expensive but whatever

const monthlySavings = openaiCost - alternativeCost; // Sweet relief... hopefully

// Reality check - this will hurt your soul

const migrationHours = 150; // Learned this the hard way, probably 200+ if Docker decides to be a dick

const migrationCost = migrationHours * 100; // Weekend goodbye fund + therapy costs

const paybackMonths = migrationCost / monthlySavings; // Still over a year to break even, kill me

If you're processing 50+ hours monthly and can survive 11 months to payback, migration makes sense. If you're doing 10 hours monthly, stick with OpenAI and focus on growing your user base instead.

Technical Compatibility: The Hard Questions

Before you start this migration nightmare, honestly answer these:

- Can your codebase handle multiple concurrent WebSocket connections without shitting itself?

- Do you have audio format conversion logic, or are you hardcoded to OpenAI's specific format?

- How dependent are you on OpenAI's exact conversation context handling?

- Does your team have experience with microservices, or are you used to monolithic APIs?

If you answered "no" to any of these, budget extra time for the migration. A lot of extra time. Also, Docker's networking will probably make you want to throw your laptop out the window at some point.

Every provider has outages.

Every provider has outages.