TensorFlow is Google's open-source machine learning framework that dominates enterprise ML whether developers like it or not. Originally developed by Google Brain in 2015 to handle Google's internal ML needs, TensorFlow was built for production scale from day one - which means it probably won't completely shit itself when your demo goes viral.

The TensorFlow Ecosystem: More Than Just a Framework

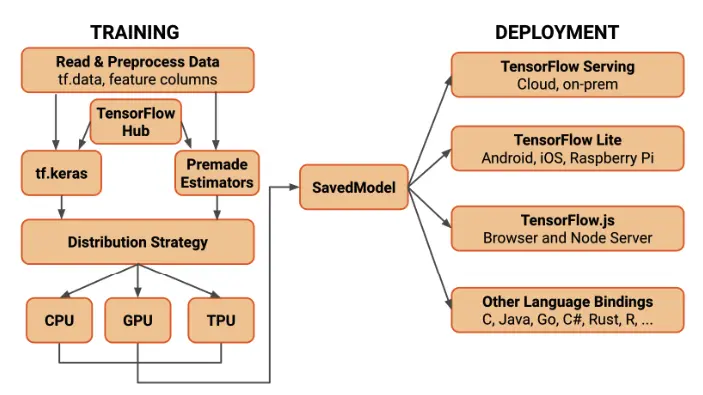

TensorFlow isn't just a single library; it's a complete ecosystem of tools designed to handle every aspect of machine learning development:

Core TensorFlow: The fundamental library that handles computational graphs, automatic differentiation, and GPU acceleration. The latest stable version is TensorFlow 2.20 dropped in August - and yeah, every major release breaks something that worked fine before.

Keras Integration: Since TensorFlow 2.0, Keras is baked in as the high-level API, which is the only reason most people can actually use TensorFlow without losing their minds. Before this, TensorFlow 1.x was about as user-friendly as raw assembly code.

TensorFlow Extended (TFX): Google's production platform for ML pipelines. Includes TensorFlow Serving for model deployment, plus data validation and preprocessing components that work great when you configure them correctly.

Specialized Libraries: The ecosystem covers everything - TensorFlow Lite for mobile deployment, TensorFlow.js for browser applications, and TensorFlow Quantum for quantum ML research (if you're into that).

Why TensorFlow Became the Standard

TensorFlow's dominance in the machine learning space stems from several key advantages:

Built for Google Scale: Unlike PyTorch, which started in research labs, TensorFlow was built to handle Google Search and Gmail from day one. This means it probably won't collapse when you get your first big traffic spike - probably. Google actually uses this internally for billion-user services, so the deployment story is solid even if the developer experience occasionally makes you want to quit programming.

Scalability: TensorFlow excels at distributed training across multiple GPUs and machines. The tf.distribute.Strategy API simplifies scaling from single-GPU development to multi-node clusters without changing model code.

Enterprise Support: You can actually call someone when TensorFlow breaks your production system at 3am, instead of posting on Stack Overflow and hoping. Google provides enterprise support through Google Cloud AI Platform, though their documentation assumes you have a PhD in distributed systems and unlimited time to decipher their jargon.

Hardware Optimization: TensorFlow leverages specialized hardware when it feels like cooperating. TPUs are blazing fast if you can get access and your code doesn't use any of the hundred TensorFlow operations that TPUs don't support. NVIDIA GPU support works great until driver updates break everything, and Intel MKL-DNN optimizations help CPU performance but good luck debugging when they silently fail.

Architecture and Performance

TensorFlow's architecture centers around computational graphs - directed graphs where nodes represent operations and edges represent data flow (tensors). This design enables several benefits:

Automatic Differentiation: TensorFlow automatically computes gradients using its GradientTape system, essential for training neural networks.

Graph Optimization: The Grappler optimization system automatically improves graph performance through techniques like operator fusion, constant folding, and memory optimization.

Execution Modes: TensorFlow 2.x defaults to eager execution for intuitive debugging, while also supporting graph compilation via @tf.function decorator for production performance.

TensorFlow usually keeps up with PyTorch in training speed, and actually beats it for production inference - assuming you can get the deployment working without pulling your hair out. The MLPerf benchmarks show TensorFlow doing well, but those are run by teams who know what they're doing and have unlimited time to tune everything.

Real-World Impact and Adoption

TensorFlow's adoption extends across industries and company sizes:

Technology Giants: Beyond Google, companies like Uber, Airbnb, and Twitter use TensorFlow for core business functions including recommendation systems, fraud detection, and personalization.

Scientific Research: TensorFlow powers research at institutions like CERN for physics simulations, NASA for astronomical data analysis, and healthcare organizations for medical imaging and drug discovery.

Startups and Scale: The framework scales from individual developers prototyping on laptops to enterprises processing petabytes of data. This scalability explains why TensorFlow appears in over 180,000 GitHub repositories as of 2025.

Current State and Future Direction

As of September 2025, TensorFlow maintains its position as one of the two dominant deep learning frameworks (alongside PyTorch). Google continues significant investment, with the 2.20.0 release introducing improvements to distributed training, better ARM CPU performance, and enhanced debugging tools.

The framework's future focuses on:

- Easier Deployment: Simplified mobile and edge deployment through TensorFlow Lite improvements

- Hardware Diversity: Broader support for emerging AI chips and accelerators

- Responsible AI: Built-in tools for fairness, explainability, and privacy preservation

- Developer Experience: Continued improvements to debugging, profiling, and error messaging

TensorFlow's combination of production readiness, comprehensive ecosystem, and industry backing ensures its continued relevance as machine learning becomes increasingly central to software development across all industries.

Google finally figured out that 1.x was a usability nightmare, so 2.x actually works like normal Python. PyTorch won over researchers because debugging doesn't make you want to quit programming, but TensorFlow kept the enterprise market locked down through battle-tested deployment and Google's massive investment in keeping it working.

Whether you're building recommendation engines, fraud detection systems, or computer vision applications, TensorFlow provides the full stack needed to go from prototype to production at scale - which is exactly why it dominates enterprise ML, despite making developers occasionally question their career choices during debugging sessions.