I've worked at five companies. Four of them still SSH into production to run database changes manually. It's 2025 and we're still copying SQL from Slack messages into a terminal window like savages.

Liquibase fixes this mess by letting you version control your schema changes like regular code. I've been using it for three years and it's prevented me from destroying production at least twice - once when it caught a changeset ID conflict that would have skipped half our schema updates.

The Problem: Database Changes Are Pure Pain

Most teams have automated deployments for their app code but still run database changes manually. You know the drill - some poor bastard has to SSH into production, run SQL scripts by hand, and hope nothing explodes. Last month I watched a senior dev accidentally DROP TABLE users instead of DROP TABLE user_sessions. Two hours of downtime while we restored from backup.

Database changes are the one thing we all pretend is "too risky" to automate, so we make them manually risky instead. While your code deploys in CI/CD pipelines with rollbacks and health checks, database changes still happen in a terminal window with crossed fingers and a "works on my machine" attitude.

How This Thing Actually Works (And Where It Breaks)

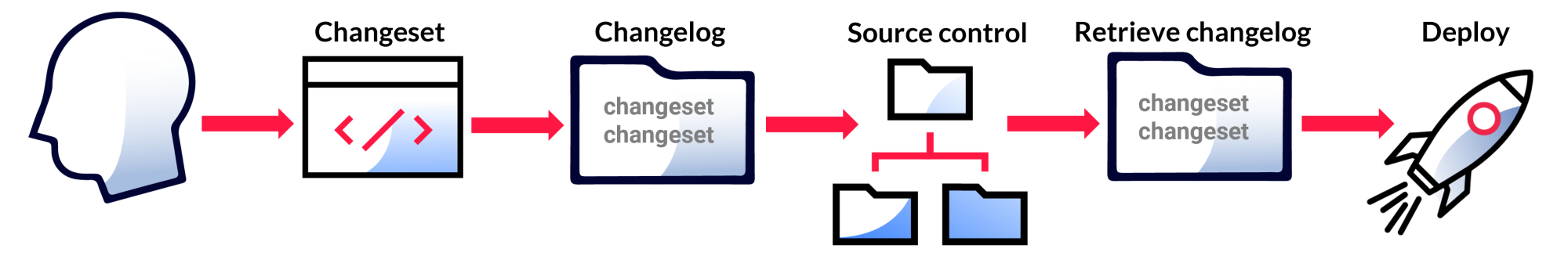

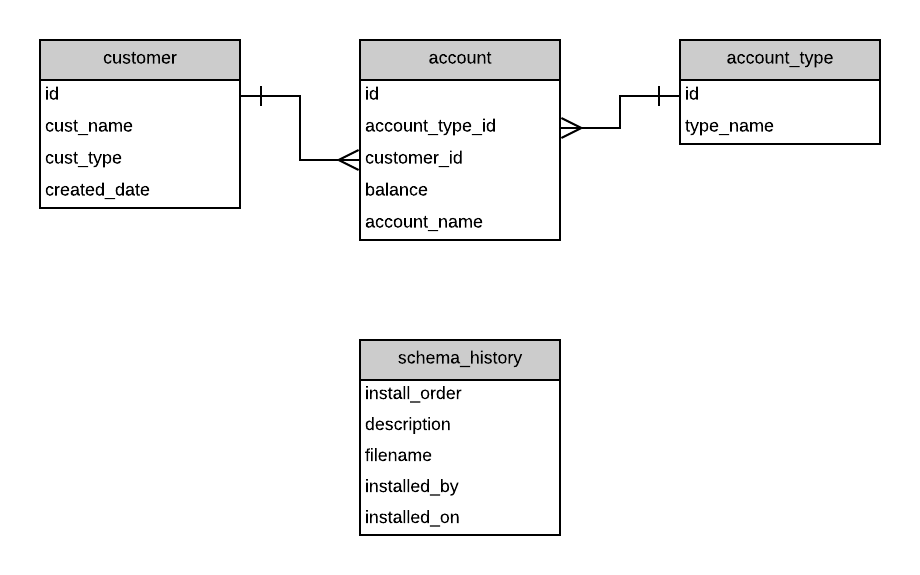

Liquibase Architecture Overview: The system tracks changes through versioned changelog files, applies them to your database via the CLI or API, and maintains state in dedicated tracking tables.

Liquibase tracks database changes in "changelog" files - basically XML, YAML, SQL, or JSON files that describe what needs to happen. Each change is a "changeset" with a unique ID. Pro tip: those IDs matter a lot. I learned this the hard way when two developers used the same ID and our database shit itself. The Liquibase best practices guide has more details on avoiding this disaster.

The tool creates tracking tables in your database (DATABASECHANGELOG and DATABASECHANGELOGLOCK). Do not fuck with these tables. Seriously. I once had a junior dev manually delete rows from DATABASECHANGELOG thinking it would "clean things up." Spent six hours figuring out why Liquibase thought half our schema didn't exist. The tracking tables documentation explains exactly why these are sacred.

Here's what breaks regularly:

- Connection timeouts during large migrations (set your timeout to like 30 minutes, not 30 seconds) - timeout parameters guide

- Lock table issues when deployments crash mid-run (you'll need to manually clear the lock)

- Case sensitivity problems between local dev (usually case-insensitive) and production - see PostgreSQL tutorial

- Foreign key constraint violations when Liquibase tries to apply changes out of order - preconditions documentation

The Startup Time Problem Nobody Talks About

Liquibase is Java-based, so it takes fucking forever to start up. On our CI server, just launching the tool takes 15-20 seconds before it even connects to the database. This adds up when you're running migrations in multiple environments. Even the latest versions are still painfully slow - the JVM startup overhead is just brutal for database tooling.

Docker helps a bit since you can keep the container warm, but you're still looking at substantial startup time every single run. I've timed it: hello-world to actual database connection averages 18 seconds on our AWS runners.

Database Support (AKA "It Works Until It Doesn't")

Supports 60+ databases including the usual suspects: PostgreSQL, MySQL, Oracle, SQL Server. Also works with newer stuff like MongoDB, Snowflake, and Databricks.

Reality check: Basic operations work everywhere. Advanced features? That's where you'll find gaps. PostgreSQL and Oracle get the best support. MySQL works great. SQL Server is decent but occasionally surprises you. MongoDB support is newer and has some rough edges.

Production Horror Stories (Learning from Pain)

Ticketmaster and IBM Watson Health use this in production, which gives me some confidence it can handle real workloads. Enterprise case studies show teams using Liquibase across thousands of databases. But I've still seen spectacular failures:

- Migration that took 14 hours on a 500GB table because we didn't properly index before the change

- Rollback that failed halfway through, leaving the database in an inconsistent state

- Lock table that got stuck for three hours because someone killed the process mid-migration

The trick is testing migrations on production-sized data first. Don't trust that tiny dev database - real data behaves differently. Most teams don't plan for rollbacks properly until it's too late. The migration best practices guide and rollback strategies documentation cover the things that actually matter in production.