So what really happened? Beyond the corporate speak about "learning integration," GitHub Copilot Workspace was a fascinating experiment that failed to understand what developers actually wanted.

I used the Workspace preview for a few weeks last year. Here's what it was actually like to use GitHub's AI development experiment before they killed it.

The Three-Agent Circus (Or: How to Turn 5 Minutes Into 2 Hours)

Workspace ran on GPT-4 Turbo with this three-agent clusterfuck that looked slick in demos but made you want to throw your laptop in October 2024 when you were trying to ship before a deadline:

Brainstorm Agent: This bastard would spend 3-4 minutes "analyzing your architectural patterns" for bugs that took 30 seconds to explain. I submitted a GitHub issue about useEffect causing infinite re-renders in React 18.2.0, and it came back with a 800-word dissertation on "state management paradigms" and "component lifecycle optimization strategies."

The bug? I forgot to add an empty dependency array. One fucking line: useEffect(() => { ... }, []).

Plan Agent: This overachieving piece of shit would generate 15-step "implementation roadmaps" to fix a typo. I needed to change color: red to color: blue in a CSS file, and it suggested:

- Analyze current color scheme architecture

- Evaluate brand consistency implications

- Create color variable abstraction layer

- Implement design system tokens

... - Deploy with comprehensive testing

For changing one fucking color value. And you couldn't just skip to step 15 - had to manually delete 14 steps of bullshit every goddamn time.

Implementation Agent: Started strong, then shit the bed when it hit real code. It would churn for 5 minutes, generate half a component, then throw this beauty:

Error: Context complexity threshold exceeded. Unable to maintain coherent implementation strategy.

Translation: "I got confused by your TypeScript interfaces and gave up."

Worst one was when it left me with a React component that had this gem:

function UserProfile({ user }) {

const [loading, setLoading] = useState(true);

// TODO: Implement user data fetching logic

// TODO: Add error handling

// TODO: Implement loading states

return <div>Profile goes here</div>;

}

Thanks, asshole. Really earned that GPT-4 API cost there.

The Browser IDE from Hell

The browser environment felt like coding through molasses. Every keystroke had a 200-300ms delay because everything went through their containerized bullshit. My MacBook Pro M2 with 32GB RAM was stuttering on basic text editing while VS Code ran buttery smooth in another tab.

The integrated terminal was a special kind of torture. Running npm install took 30 seconds longer than local because of their "security sandboxing." Git commands would randomly timeout. And trying to debug a Node.js app? Good fucking luck seeing console logs in real time.

Mobile development was the biggest joke. I tried reviewing a PR on my iPhone 15 Pro once - squinting at a 200-line diff, trying to tap the exact pixel to comment on line 87. Nearly threw my phone into traffic. This GitHub issue has dozens of developers calling out the mobile experience as "unusable for anything beyond reading code."

The Production Disasters (AKA Why I Stopped Using This Shit)

Real failures that cost me actual hours in November 2024:

- Memory errors: Hit the context limit on a 2,000-line React app and it forgot the entire component structure mid-edit. Left me with imports for components that didn't exist anymore.

- Branch conflicts: Created a PR that conflicted with

mainbecause it was still working off a 3-day-old commit. Spent an hour manually merging conflicts that shouldn't have existed. - Silent failures: Workspace would just... stop. No error message, no logs, just spinning cursors forever. Found out later it hit their 10-minute timeout limit.

- Syntax disasters: Generated this beautiful TypeScript:

interface UserProps {

name: string

age: number

// End of interface

}

email: string; // This broke the entire build

}

Took me 20 minutes to find that orphaned line.

- Token limit hell: Hit GPT-4's context window on a simple Express API. Error message:

Context exceeds maximum tokens (128k). Please simplify your request.Fuck you, I just wanted to add authentication.

The GitHub Actions integration was clever in theory but added latency to everything. Every code execution went through a full CI/CD pipeline, turning 30-second local tests into 5-minute waits.

Why It Never Worked (The 3AM Test)

Real scenario: Production API is down, throwing ECONNREFUSED errors, CEO is pinging Slack, and you've got 30 minutes before the morning standup where you have to explain why user signups are broken.

Workspace workflow:

- Open browser (30 seconds)

- Navigate to repository (another 30 seconds of loading)

- Explain the problem to Brainstorm Agent (2 minutes of typing)

- Wait for analysis (3 minutes of "thinking")

- Edit the overcomplicated plan (2 minutes)

- Watch Implementation Agent fail on the first database connection (5 minutes)

- Debug broken code it generated (15 minutes)

- Give up and fix it manually in VS Code (30 seconds)

VS Code workflow:

- Open file with the error (

Cmd+P, type filename) - Fix the fucking connection string

- Save and deploy

Total time: 45 seconds vs 25 minutes of Workspace hell.

Cursor understood this. They built AI that works within your existing workflow, not some separate browser experience that makes you context-switch every 5 minutes. Performance comparisons consistently favor Cursor for speed and reliability.

GitHub's mistake was thinking natural language programming meant replacing development workflows instead of enhancing them. The pivot to Coding Agent in May 2025 proved they finally got it. Multiple developer surveys showed Cursor outperforming Workspace in real-world usage.

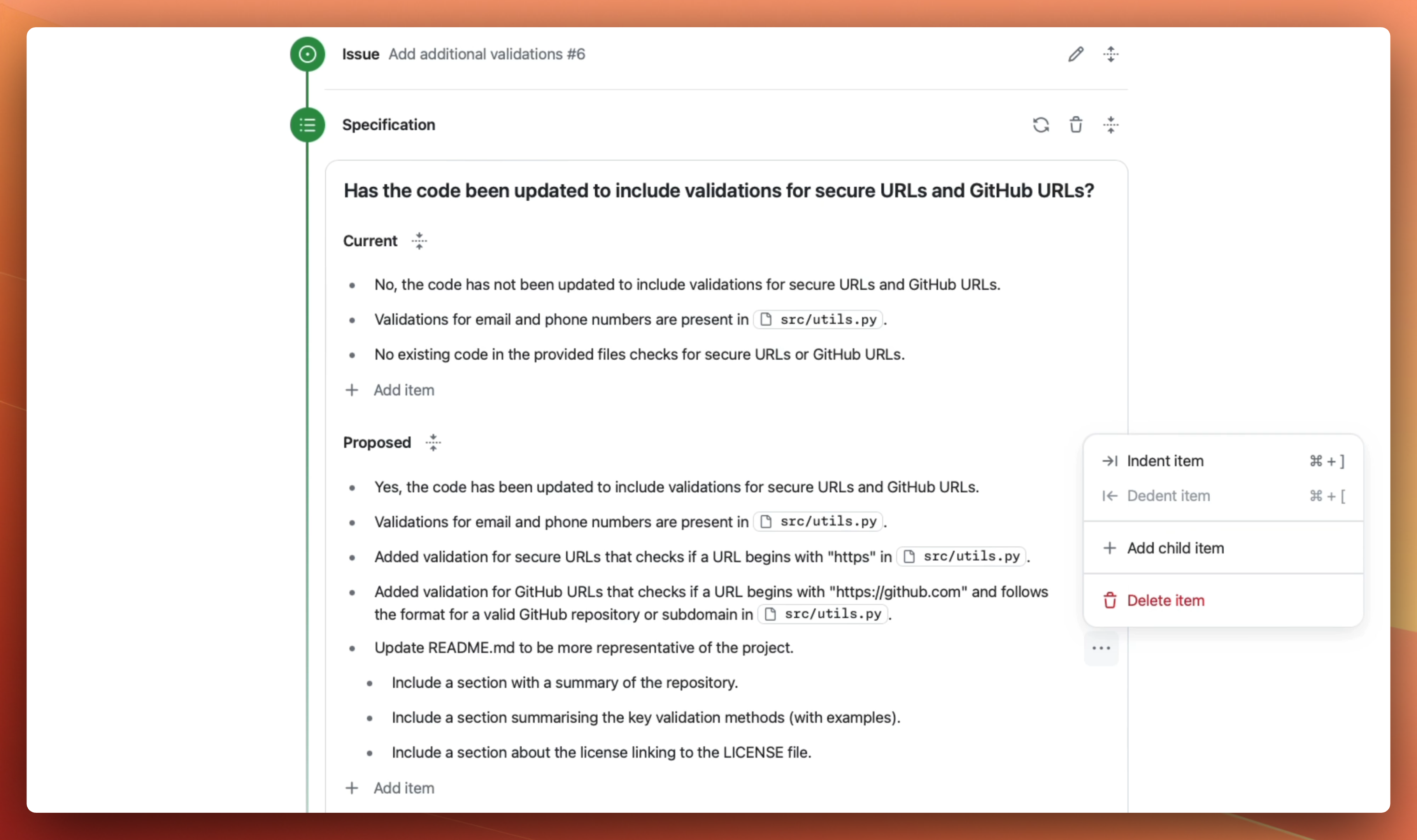

The Performance Reality

![]()

What GitHub marketed: "Build complete applications from natural language"

What actually happened: Spend 20 minutes explaining a bug to get a 50-line fix that needed manual debugging

What GitHub marketed: "Mobile-first development environment"

What actually happened: Squinting at tiny code diffs on your phone like an idiot

What GitHub marketed: "Collaborative AI development"

What actually happened: Sharing links to half-broken workspaces that colleagues couldn't properly access

GitHub Copilot Workspace included an integrated terminal, but it was laggy compared to local development environments.

The community feedback was pretty clear by early 2025: cool experiment, wouldn't use it for real work.

Meanwhile, Cursor was quietly building what developers actually wanted: AI that enhances your existing workflow instead of replacing it with some browser-based essay-writing exercise. AI coding tool comparisons in 2025 consistently rank Cursor among the top performers while Workspace was nowhere to be found.

By the time GitHub realized their approach was wrong, better AI coding tools had already captured developer mindshare. The developer community consensus was clear: Workspace was an interesting experiment, but nobody wanted to use it for real work.