After drowning in LangChain's callback hell for weeks, Dify actually makes sense. No more debugging nested promise chains in the middle of the night because someone thought callbacks were a good idea.

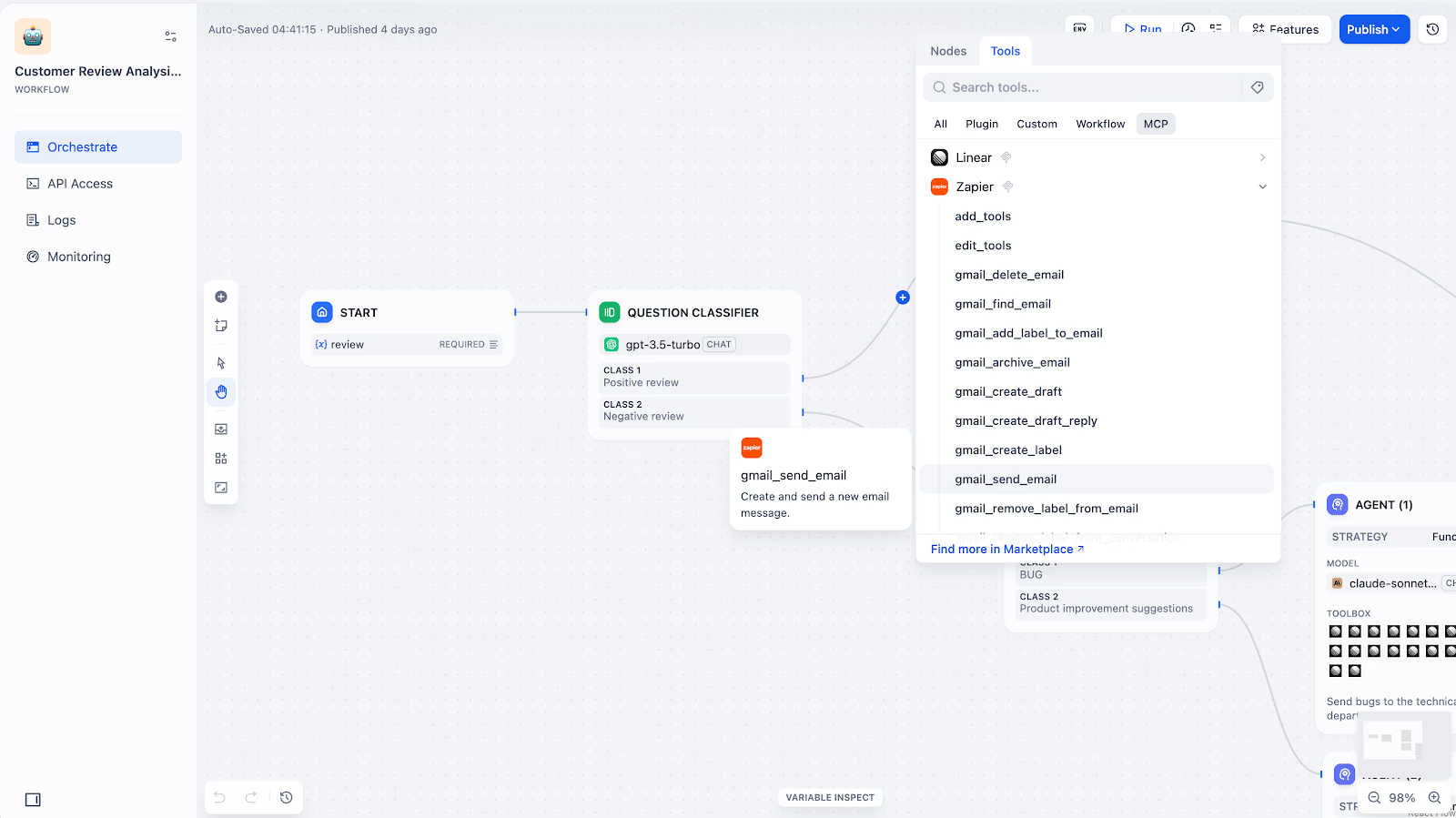

Here's the deal - Dify is a visual AI workflow builder that doesn't make you want to throw your laptop out the window. You drag nodes around instead of writing the same fucking REST API wrapper for the 50th time. It handles 100+ LLM providers so you're not locked into OpenAI's pricing shenanigans.

The Architecture Actually Makes Sense

They redesigned the backend in 2024 to be more modular - components can fail independently without bringing down the whole system. Vector database connections shit the bed every few weeks with connection timeout errors. The fix? Kill postgres, restart docker-compose, and pray your indices didn't get corrupted. At least it doesn't nuke your entire workflow like in v0.6.8.

The core features that actually matter:

- Visual Workflows: Drag-and-drop interface that shows you what's happening instead of hiding it in nested callbacks

- Built-in RAG: No more manually chunking documents and managing vector stores - just upload PDFs and it works

- Model Switching: Change from GPT-4 to Claude to local Llama without rewriting your entire codebase

- Real Debugging: You can actually see where your workflow fails instead of guessing from stack traces

Production Reality Check

Look, Dify isn't perfect. Memory usage went mental when Jenny uploaded that 200MB compliance PDF - spiked from 2GB to 14GB RAM usage and stayed there until we restarted. Docker setup breaks for weird reasons - last Tuesday staging just died with dify-worker exited with code 137 and we had to delete node_modules and cry. API rate limits just fail instead of backing off gracefully, so you get ERROR 429 and the whole pipeline dies.

But it's still better than writing everything from scratch. I spent two weeks building a custom RAG pipeline in LangChain that Dify replicated in two hours.

The observability features actually work - you can see token usage, response times, and error rates without setting up Grafana and crying. Self-hosting works if you don't want to send your data to their cloud.

Compare that to LangChain's over-engineered callback hell, Flowise's basic feature set, or LangFlow's update-breaking workflows and you'll understand why developers are switching. The Discord community actually helps solve problems, documentation doesn't completely suck, and GitHub issues get real responses from maintainers.