UPDATE: As of July 23, 2025, Cody is enterprise-only. Sourcegraph killed the free and pro tiers. This review is still accurate for enterprise users, but individual developers need to look elsewhere.

Ask Copilot about your company's internal API and you'll get generic garbage. "Try using fetch() to call the API" - thanks genius, but which endpoint? What headers? What's the response format when authentication fails?

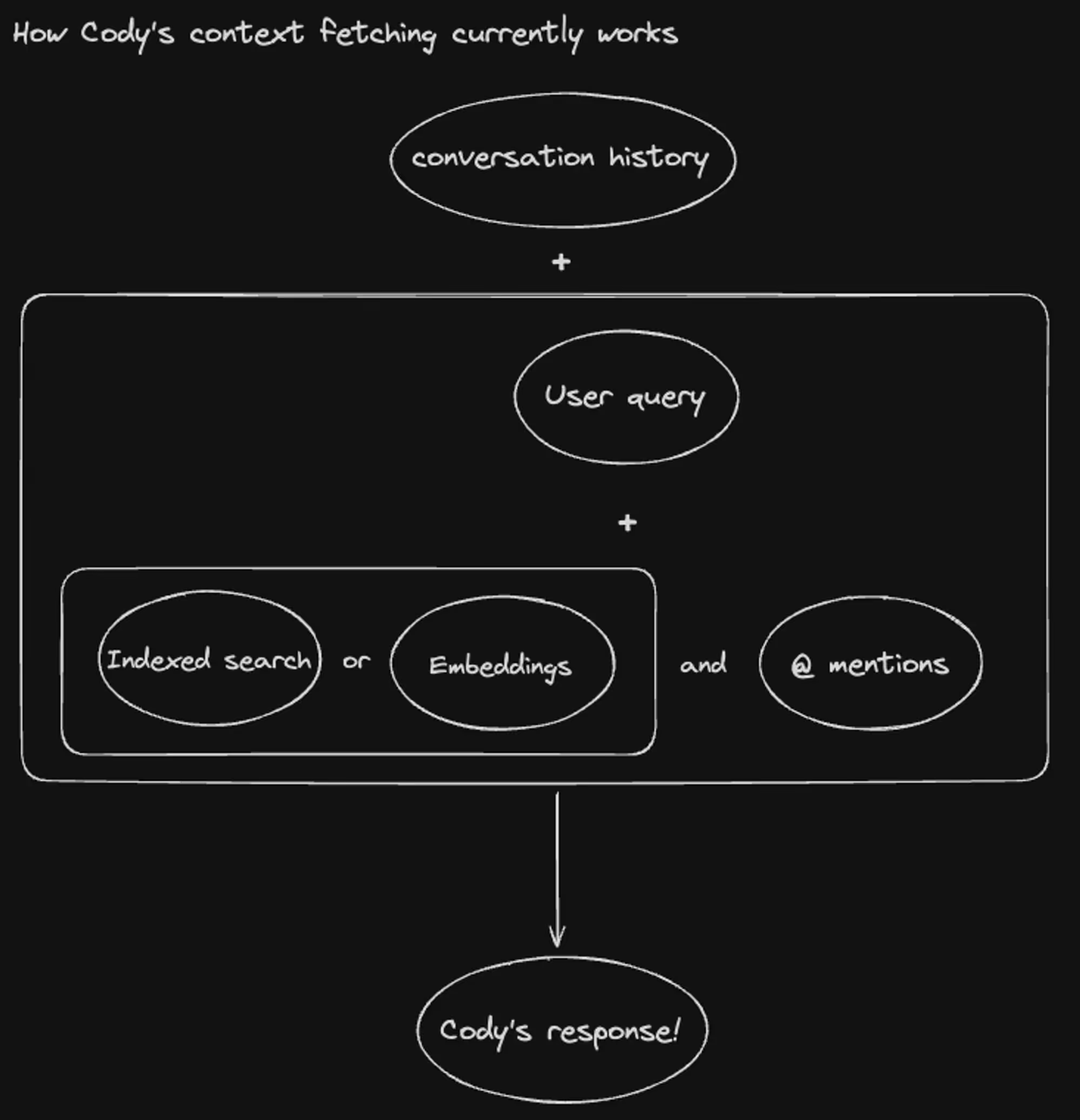

Sourcegraph has been building enterprise code search for years. Companies with monster codebases that would make VS Code cry. Now they've plugged that search engine directly into an AI that can actually see how your 47 microservices connect to each other.

Here's the difference: ask Cody to write error handling and it'll use the same patterns you already have in 73 other places in your codebase. It knows you use custom ApiError classes, that you log to Datadog with specific tags, and that your frontend expects {error: {code, message}} format.

Enterprise Setup Sucks But Works

Your security team will panic about sending code to external APIs. Mine spent 3 weeks asking questions like "what if Sourcegraph gets hacked?" Fair point, honestly.

You can run this whole thing on your own servers if you want. Deploy Sourcegraph first (that's the search engine), then bolt Cody on top. Took me a week and a half, mostly waiting for security approval and fighting with Kubernetes configs.

Memory usage is brutal. Plan for 60+ GB of RAM if you're indexing anything over 500k lines. Our 2M line monolith maxed out a 64GB box and we still had to restart the indexing job twice. Docker containers kept getting OOMKilled until we bumped the limits way up.

Pro tip: If you're on Kubernetes, the default resource limits will kill your indexing pods. Learned this the hard way after watching it fail at 90% completion three times.

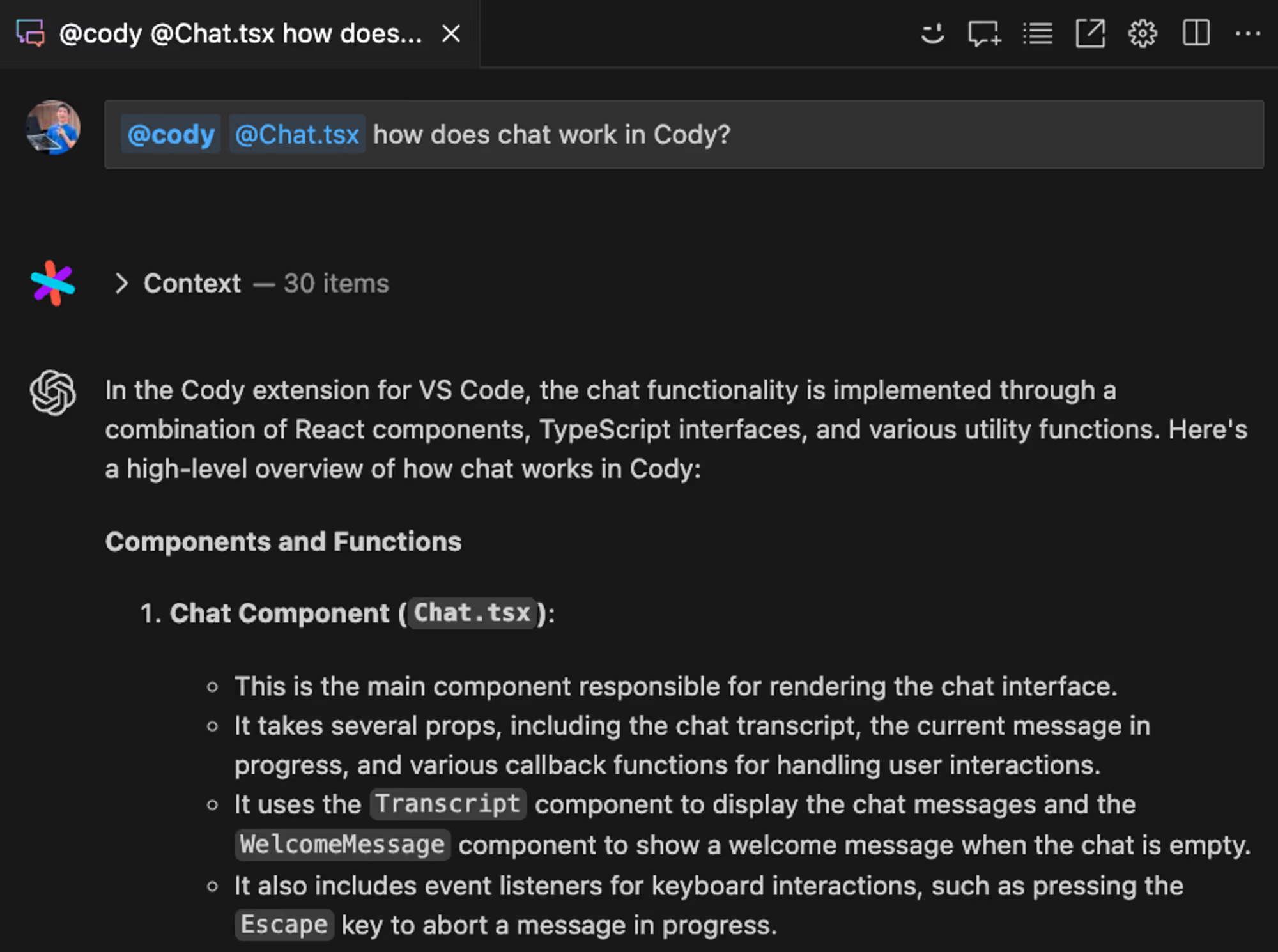

VS Code works great. IntelliJ is okay but feels like an afterthought. Don't bother with Eclipse unless you hate yourself. GitHub repos work perfectly, GitLab is fine, Bitbucket integration exists but has weird edge cases.

The Magic Actually Works

Ask Cody to write a function that calls your user service and it'll use the right endpoint URL, include the auth headers your API expects, and handle the response format you actually return. Because it's read your OpenAPI spec and seen 47 other places where you call that service.

Writing database queries? It knows you use Prisma, that your User table has a deletedAt column for soft deletes, and that you always eager-load the profile relationship. Not magic, just more context.

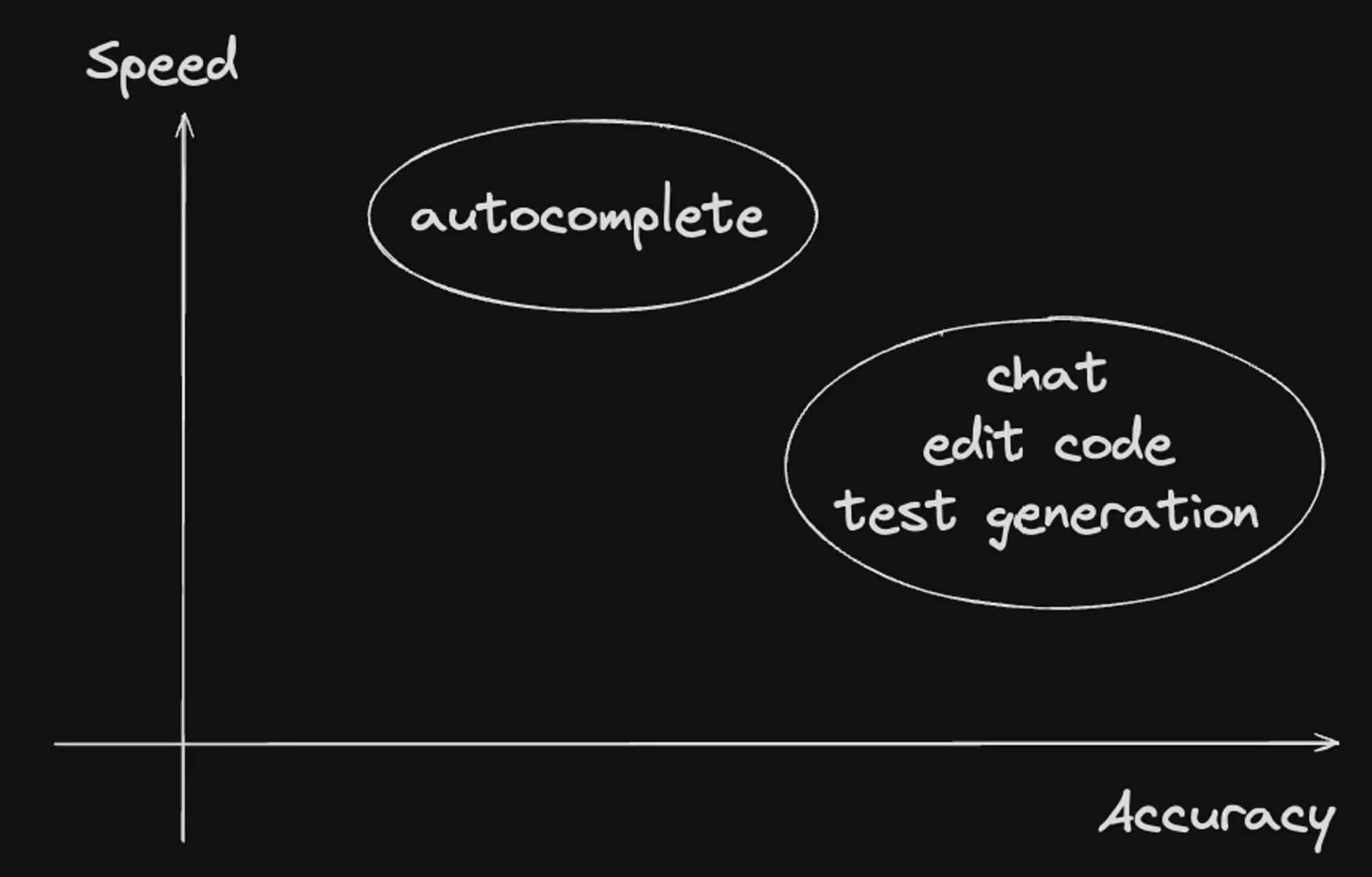

Indexing is slow as hell though. Took 8 hours to churn through our React monolith the first time. Had to re-run the whole thing when we moved auth logic around and renamed half our API endpoints.

It uses Claude 3.5 by default which is pretty good at understanding messy codebases. You can switch to GPT-4 if you want but honestly Claude works fine and doesn't hallucinate as much.

So how does this actually stack up against the competition? That's what everyone really wants to know.

Cloud version: 5 minutes to install the extension. Enterprise self-hosted: plan for 1-2 weeks of setup hell, depending on how paranoid your security team is and how many repos you're indexing. The actual indexing takes hours and will probably crash at least once if you have a big codebase.

Cloud version: 5 minutes to install the extension. Enterprise self-hosted: plan for 1-2 weeks of setup hell, depending on how paranoid your security team is and how many repos you're indexing. The actual indexing takes hours and will probably crash at least once if you have a big codebase.